Recently, researchers from MIT, Adobe Research and Tsinghua University introduced a technique that improves the data efficiency of GAN models by imposing various types of differentiable augmentations on both real and fake samples. The method is known as DiffAugment or Differentiable Augmentation.

Generative Adversarial Networks (GANs) have achieved many advancements over a few years now. From creating artistic paintings to generating realistic human faces who don’t exist, GANs have created several groundbreaking instances.

However, the success behind these models come at the cost of both computation and data. GAN models are data-hungry and rely heavily on vast quantities of diverse and high-quality training examples in order to generate high-fidelity natural images of diverse categories.

Collecting such large-scale datasets require a longer period of time with considerable human efforts, along with prohibitive annotation costs. Which is why researchers thought it would be important to eliminate the need for immense datasets for GAN training. However, while doing so, there will be consequences like over-fitting, degraded image quality, among others.

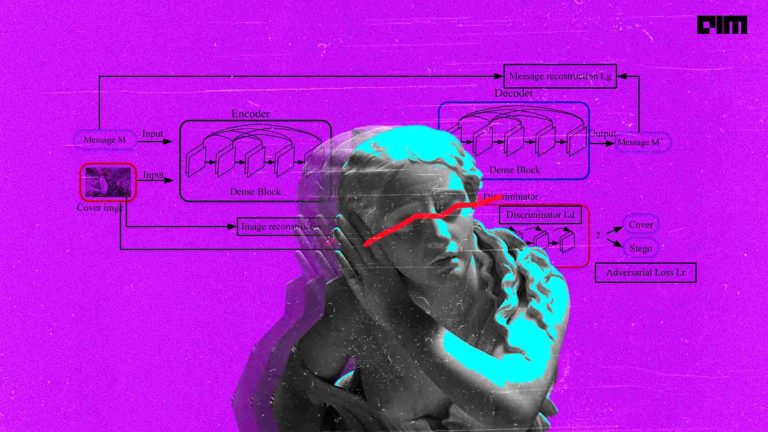

To mitigate such issues, DiffAugment method was introduced that applies the same differentiable augmentation to both real and fake images for both generator and discriminator training.

Behind The Model

The researchers presented DiffAugment for data-efficient GAN training. The method exploits various types of differentiable augmentations on both real and fake samples. It enables the gradients to be propagated through the augmentation back to the generator, regularises the discriminator without manipulating the target distribution, and maintains the balance of training dynamics.

The researchers conducted experiments on popular datasets such as ImageNet, CIFAR-10 and CIFAR-100 corpora based on the leading class-conditional BigGAN by DeepMind and unconditional StyleGAN2 technique by NVIDIA.

They used the common evaluation metrics, which are Frechet Inception Distance (FID), which is a performance metric to evaluate the similarity between two datasets of images and Inception Score (IS), which a popular metric for judging the image outputs of Generative Adversarial Networks. In addition, the researchers applied the method to few-shot generation both with and without pre-training.

Benefits of DiffAugment

According to the researchers, this technique is enabled to adopt the differentiable augmentation for the generated samples, effectively stabilises training, and leads to better convergence. It can be used to significantly improve the data efficiency for GAN training. They stated that the method can generate high-fidelity images using only 100 images without pre-training while being on par with existing transfer learning algorithms.

Wrapping Up

The DiffAugment method achieved state-of-the-art performance on popular benchmarks and is able to generate high-quality images using only 100 examples. With DiffAugment, the researchers improved BigGAN and achieved a state-of-the-art Frechet Inception Distance (FID) of 6.80 with an Inception Score (IS) of 100.8 on ImageNet 128×128 without the truncation trick.

They also matched the top performance on CIFAR-10 and CIFAR-100 corpora using only 20% training data. According to the researchers, without any pre-training, the DiffAugment method can achieve competitive performance with existing transfer learning algorithms.

The code is available on GitHub. Click here.

Read the paper here.