A black box is an electronic device placed in an aircraft to facilitate the investigation of aircraft accidents and incidents. Sometimes it is the last device that remains alive in crucial situations. These devices are placed in a hidden place and are very rare to be destroyed. Similarly, in computer programming, any algorithm consists of various functions that can not be accessed, but we can observe that its output depends on the given inputs; these types of functions are called black-box functions. Optimizing these types of functions is called black-box optimization (BBO). For example, tuning of a large neural network is considered as an application of black-box optimization.

The basic goal of BBO is to find a configuration for those black-box functions so that configuration can improve the performance of the whole algorithm by implying them as rapidly as possible.

There are some basic applications of traditional BBO are:

- Automatic hyperparameter tuning.

- Automatic bucket testing.

- Database knob tuning.

- Experimental design.

As the generalized BBO has emerged, the application of BBO has increased and has been applied to areas like:

- Resource allocation.

- Automatic chemical design.

- Circuit design.

- Processor architecture design.

Generalized BBO requires more functionalities like multiple objectives and constraints, which a single objective or traditional BBO may not support. There are various open-source BBO systems available. some of the systems are:

- BoTorch

- GPflowOpt

- Spearmint

- Hypermapper

- SMAC#

- HyperOpt

OpenBox is also an open-source BBO tool, which can help us in BBO for different tasks.

OpenBox

OpenBox is a standalone python package as an open source for efficient generalized Block-Box optimization. It is designed to satisfy the following demands in the field of BBO and generalized BBO :

- Ease to use.

- Consistent performance.

- Resource aware management.

- Scalability.

- High efficiency.

- Data privacy protection.

With these all, it gives visualization functions for tracking and managing BBO tasks, allowing users to choose the SOTA algorithms after optimizing them, effective use of system optimization with transfer-learning and multi-fidelities, etc.

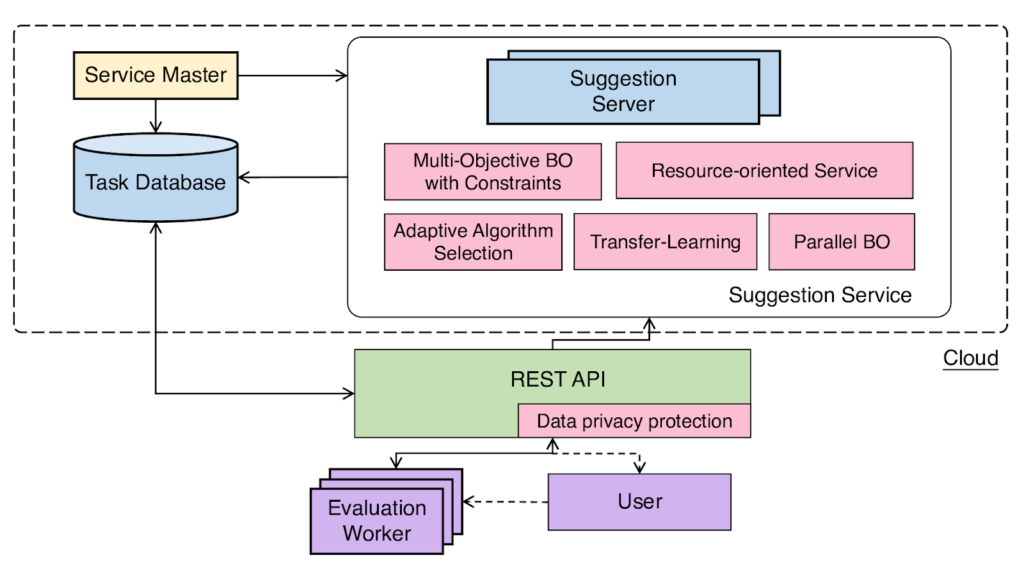

The following image can show the architecture of Open-Box.

There are a few main components of the Open-Box:

- Service master.

- Task database.

- Suggestion server.

- REST API.

- Evaluation workers.

Where service master helps in the management of nodes and load balance, task database stores the history of all tasks, suggestion server generates the configuration, and these configurations are connected by the worker using rest API.

Next in the article, we are going to tune a LightGBM model using OpenBox.

Installing the OpenBox

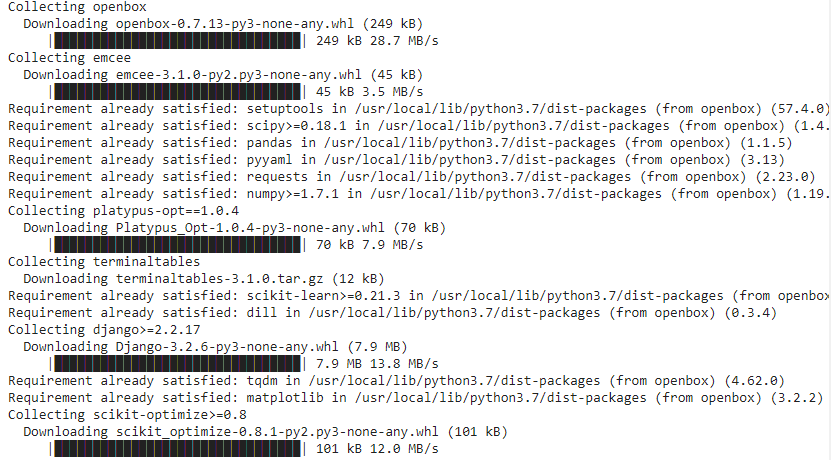

!pip install openboxOutput:

After completion of installation, we can start using the system.

Importing some basic libraries and OpenBox functions.

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_digits

from openbox import get_config_space, get_objective_function

from openbox import OptimizerPreparing the data

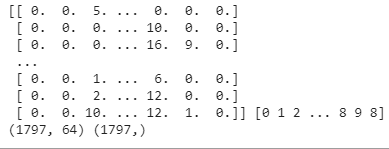

X, y = load_digits(return_X_y=True)

print(X,y)

print(X.shape,y.shape)Output:

Here we have called a data set provided by SK-Learn. In the data, we have 10 classes, and the features are an integer between 0-16. To know more about the data set readers can visit this link.

Splitting the data into train and test:

x_train, x_val, y_train, y_val = train_test_split(X, y, test_size=0.2, stratify=y, random_state=1)Defining a configuration space using OpenBox package provided get_config_space function.

config_space = get_config_space('lightgbm')Or we can define a function for configuration space using following code:

def get_configspace():

space = sp.Space()

n_estimators = sp.Int("n_estimators", 100, 1000, default_value=500, q=50)

num_leaves = sp.Int("num_leaves", 31, 2047, default_value=128)

max_depth = sp.Constant('max_depth', 15)

learning_rate = sp.Real("learning_rate", 1e-3, 0.3, default_value=0.1, log=True)

min_child_samples = sp.Int("min_child_samples", 5, 30, default_value=20)

subsample = sp.Real("subsample", 0.7, 1, default_value=1, q=0.1)

colsample_bytree = sp.Real("colsample_bytree", 0.7, 1, default_value=1, q=0.1)

space.add_variables([n_estimators, num_leaves, max_depth, learning_rate, min_child_samples, subsample,colsample_bytree])

return spaceDefining the objective function using get_objective_function provided by the package of OpenBox.

objective_function = get_objective_function('lightgbm', x_train, x_val, y_train, y_val)

Or if you want to edit the function you can define the function using following code:

def objective_function(config: sp.Configuration):

params = config.get_dictionary()

params['n_jobs'] = 2

params['random_state'] = 47

model = LGBMClassifier(**params)

model.fit(x_train, y_train)

y_pred = model.predict(x_test)

loss = 1 - balanced_accuracy_score(y_test, y_pred) # minimize

return dict(objs=(loss, ))The input of the objective function is a Configuration instance sampled from the space.

After defining those functions, we can define an optimization instance to run the optimization process.

opt = Optimizer(

objective_function,

config_space,

max_runs=100,

surrogate_type='prf',

time_limit_per_trial=180,

task_id='tuning_lightgbm',

)Output:

This instance will take 00 rounds or optimize the objective function 100 times.

Running the optimizing function:

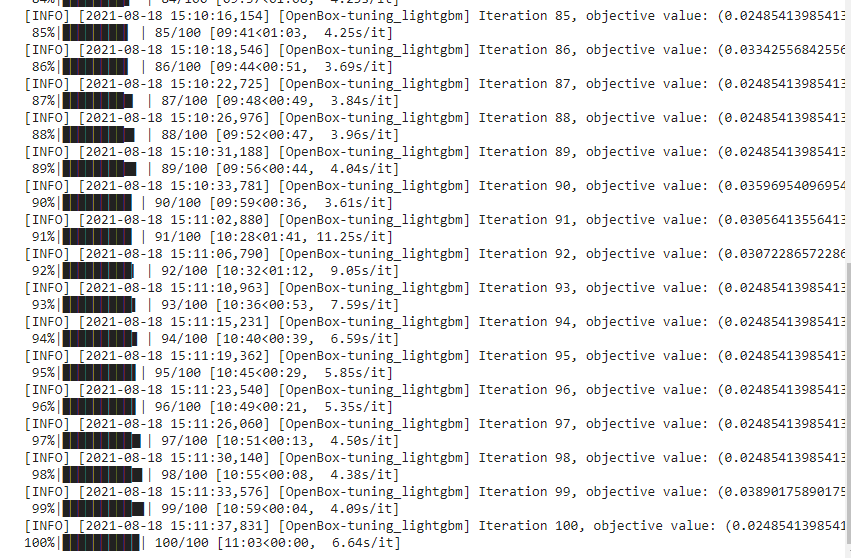

history = opt.run() Output:

Here I have saved all the information of the function in the history instance to extract and visualize the information about the optimization.

After the execution of the opt.run function, we can check for the history and also visualize the optimization:

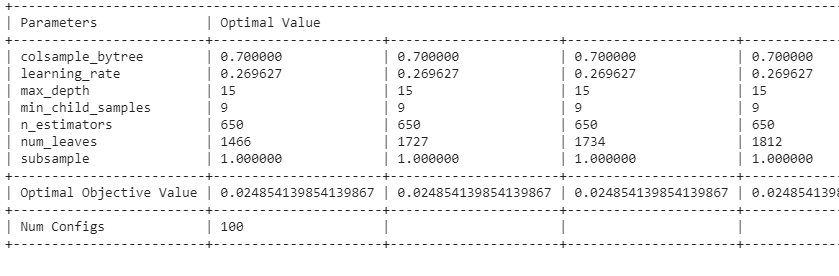

print(history)

Output:

Here we can see the learning rates and the optimal objective value for every optimization and compare them to select the best optimization values.

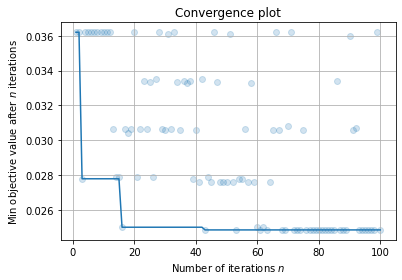

We can also visualize the minimum number of optimization after n iteration.

history.plot_convergence()

plt.show()Output:

Here we have seen how we can use the OpenBox for optimization of the best parameters after tuning. In the results, we can see how the LightBGM model will perform better. Also, we can perform deployment of the BBO, using the REST API conveniently. For deployment of the BBO reader, you can visit this link. Also, I will share what I have felt about OpenBox. This is the easiest way to implement a BBO, and the results are much better than any other BBO algorithm. This is a well-developed library and provides features to perform BBO very easily and efficiently. I encourage you to do more things by using this open-source package.

References :

- OpenBox documentation.

- Google Colab notebook for the above codes.