|

Listen to this story

|

NVIDIA has been surprising the world as if there’s no tomorrow. “It’s certainly a good time to be NVIDIA,” declared xAI chief Elon Musk in a recent interview with Peter Diamandis during the 2024 Abundance360 Summit.

Musk said that AI compute is growing exponentially, increasing by a factor of 10 every six months, and most data centres are transitioning from conventional to AI compute. ‘AI factories’ are most certainly just a step in that direction. NVIDIA is obsessed with it.

At the recent GTC 2024, Huang drew parallels between data centres and factories during the industrial revolution. He explained how data centres now produce data tokens using data and electricity as raw materials, and compared it with the production of electricity during the industrial revolution, when energy was used.

This marks a significant perspective shift from data centres being a cost-guzzling infrastructure to becoming revenue generators.

In October last year, the most valuable chip company joined hands with Foxconn to build ‘AI factories’ that would use NVIDIA chips to power a “wide range” of autonomous vehicles, robotics platforms, and LLM training.

This time, Huang emphasised the necessity, stating, “Anyone developing chatbots and generative AI will require an AI factory.” He also drew attention to their collaboration with Dell, a company Huang described as “skilled in building end-to-end systems for enterprises at scale”.

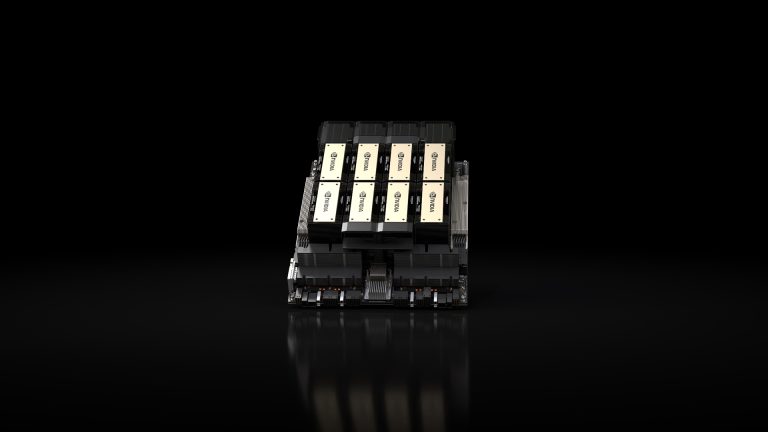

Additionally, major cloud providers and data centre operators, including AWS, Microsoft Azure, Google Cloud, and Oracle Cloud, have announced plans to offer NVIDIA’s new Blackwell GPUs and systems in their data centres. Hardware partners like Cisco, Dell, HPE, Lenovo, and Supermicro will deliver Blackwell-optimised servers and systems.

AWS and Microsoft Azure plan to offer Blackwell-based instances, co-developing the Project Ceiba AI supercomputer with NVIDIA. Google Cloud will incorporate NVIDIA’s GB200 NVL72 systems, while OCI will adopt the GB200 Grace Blackwell Superchip and host a 72 Blackwell GPU NVL72 cluster.

Other cloud providers, such as Lambda, CoreWeave, IBM Cloud, and NexGen Cloud, also intend to offer Blackwell hardware.

Data centre operators, including YTL Power in Malaysia and Singtel in Singapore, are preparing to host Blackwell-powered systems.

NVIDIA has also garnered interest from AI leaders like Meta, Microsoft, OpenAI, Oracle, and Tesla to adopt the Blackwell platform, signalling widespread adoption across hyper scalers public clouds, specialised GPU cloud providers, data centre operators, and sovereign clouds.

Challenges Galore

However, the AI revolution, powered by Blackwell, is not without challenges. As computational demands soar, the data centre industry faces mounting pressure to enhance energy efficiency and meet renewable energy goals.

Pedro Domingos, a professor of computer science at the University of Washington, also highlighted in a post on X how data centres seem to have become uni-focused rather than multi-focused after the popularisation of generative AI models.

This trend signals increasing data centre infrastructure for each use case or function, which could prove challenging. Hence, experts have indicated a need to move to low-cost data centres powered by renewable energy, which wouldn’t pose a burden.

“If you’re not building data centres adjacent to low-cost renewable power, you’re not going to have a fruitful conversation with the hyperscalers and cloud players,” said Marc Ganzi, CEO of DigitalBridge.

Musk said that last year, the primary constraint was AI chips. “This year, however, one of the major constraints, if not the biggest, is voltage step-down transformers. The challenge lies in reducing the power from a utility’s 300 kilovolts to less than one volt for computers,” he added.

Musk said that this massive voltage reduction is critical. On a lighter note, he said it is almost as if we need transformers for the transformers — voltage transformers for our AI’s neural net transformers. “That’s the main issue we’re facing this year,” he added.

What’s Next?

Musk said that looking ahead to next year and beyond, the constraint is likely to be on the availability of electrical power. He also said with AI’s substantial power demands and the shift to sustainable energy, especially electric vehicles, the need for electrical power is becoming increasingly significant.

OpenAI’s co-founder and chief AI scientist Ilya Sustkever has repeatedly envisioned the initial AGIs as “very large data centres packed with specialised neural network processors working in parallel”.

Given the recent developments in the field, Sustkever’s insights are particularly noteworthy.

A short while ago, Microsoft and OpenAI announced Stargate, a groundbreaking AI supercomputer data centre project costing over $115 billion, which is set to be launched in 2028. Additionally, AWS plans to invest over $150 billion in data centres over the next 15 years to accommodate increasing demand.

While all of them are at it, NVIDIA is better positioned to develop AGI chips and eventually AGI factories, considering its recent strides in defying Moore’s Law with its Blackwell infrastructure.