Despite tremendous advances, modern picture inpainting systems frequently struggle with vast missing portions, complicated geometric patterns, and high-resolution images. Recently, Roman Suvorov et al. proposed a SOTA technique called LaMa, which may mask any scale of the object in a given image and return a recovered image excluding the object that we have masked. We will talk about that strategy theoretically in this post, and we will see how it work practically. The major points to be discussed in this article are as follows.

Table Of Contents

- About Image Inpainting

- How does LaMa Address the Issue?

- Implementing LaMa

Let’s start the discussion by understanding what is image inpainting.

About Image Inpainting

The process of rebuilding missing areas of an image so that spectators are unable to discern that these regions have been restored is known as image inpainting. This method is frequently used to eliminate undesired things from images or to restore damaged areas of old photographs. The images below demonstrate some examples of picture inpainting.

Image inpainting is a centuries-old technique that needed human painters to work by hand. But lately, academics have proposed various automatic inpainting approaches. In addition to the image, most of these algorithms require a mask that shows the inpainting zones as input. We compare the outcomes of nine automatic inpainting systems with those of skilled artists.

Inpainting is a conservation technique that involves filling in damaged, deteriorated, or missing areas of artwork to create a full image. Oil or acrylic paints, chemical photographic prints, sculptures, and digital photos and video are all examples of physical and digital art mediums that can be used in this approach.

The solution to the image inpainting problem realistically filling in missing sections necessitates “understanding” the large-scale structure of natural images as well as image synthesis. The topic was investigated before the advent of deep learning, and development has accelerated in recent years thanks to the usage of deep and wide neural networks, as well as adversarial learning.

Inpainting systems are often trained on a huge automatically produced dataset built by randomly masking real images. Complicated two-stage models incorporating intermediate predictions, such as smoothed pictures, edges, and segmentation maps, are frequently used.

How does LaMa Address the Issue?

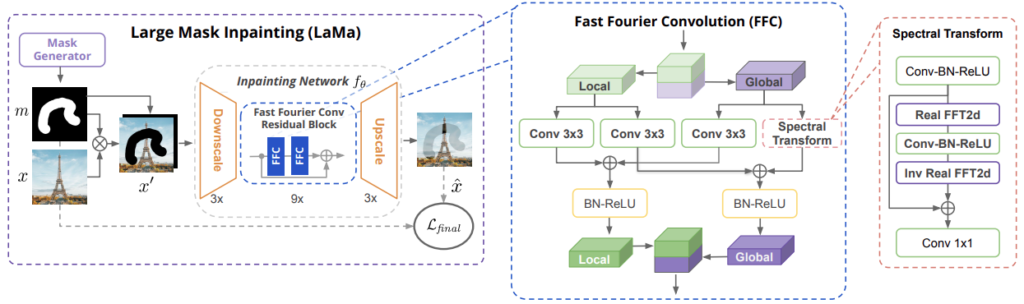

This inpainting network is based on Fast Fourier Convolutions (FFCs) that were recently developed. Even in the early levels of the network, FFCs allow for a receptive field that spans the full image. This trait of FFCs increases both perceptual quality and network parameter efficiency, according to researchers. FFC’s inductive bias, interestingly, allows the network to generalize to high resolutions that were never experienced during training. This discovery has major practical implications, as it reduces the amount of training data and computations required.

It also employs perceptual loss, which is based on a semantic segmentation network with a large receptive field. This is based on the finding that an insufficient receptive field affects both the inpainting network and perceptual loss. This loss supports global structural and shape consistency.

An aggressive training mask generation technique to harness the potential of the first two components’ high receptive fields. The approach generates wide and huge masks, forcing the network to fully use the model’s and loss function’s high receptive field.

All of this leads to large mask inpainting (LaMa), a revolutionary single-stage image inpainting technique. The high receptive field architecture (i) with the high receptive field loss function (ii), and the aggressive training mask generation algorithm are the core components of LaMa (iii). We rigorously compare LaMa to current baselines and assess the impact of each proposed component.

The scheme for large-mask inpainting is shown in the image above (LaMa). As can be seen, LaMa is based on a feed-forward ResNet-like inpainting network that employs the following techniques: recently proposed fast Fourier convolution (FFC), a multi-component loss that combines adversarial loss and a high receptive field perceptual loss, and a training-time large masks generation procedure.

Implementing LaMa

In this section, we will take a look at the official implementation of LaMa and will see how it masks the object marked by the user effectively.

- Let’s set up the environment by installing and importing all the dependencies.

# Cloning the repo !git clone https://github.com/saic-mdal/lama.git # installing the dependencies !pip install -r lama/requirements.txt --quiet !pip install wget --quiet # change the directory % cd /content/lama # Download the model !curl -L $(yadisk-direct https://disk.yandex.ru/d/ouP6l8VJ0HpMZg) -o big-lama.zip !unzip big-lama.zip # Importing dependencies import base64, os from IPython.display import HTML, Image from google.colab.output import eval_js from base64 import b64decode import matplotlib.pyplot as plt import numpy as np import wget from shutil import copyfile import shutil

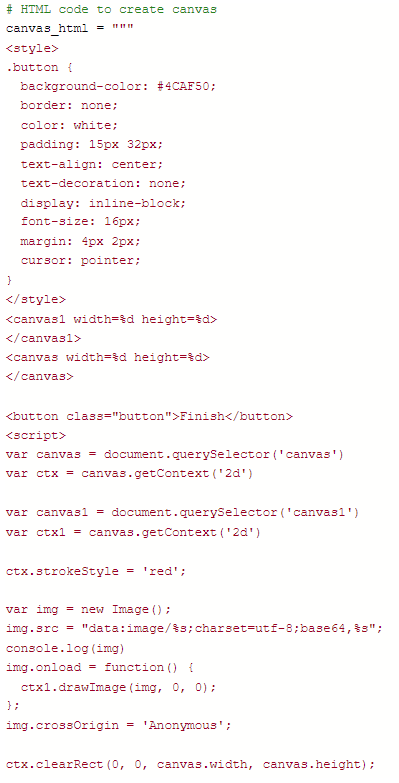

- In order to facilitate users to mask the desired object in the given image, we need to write HTML code.

- Now we will upload the image that we want to mask the object within it for that set fname=None and the mask will mask the object.

if fname is None:

from google.colab import files

files = files.upload()

fname = list(files.keys())[0]

else:

fname = wget.download(fname)

shutil.rmtree('./data_for_prediction', ignore_errors=True)

! mkdir data_for_prediction

copyfile(fname, f'./data_for_prediction/{fname}')

os.remove(fname)

fname = f'./data_for_prediction/{fname}'

image64 = base64.b64encode(open(fname, 'rb').read())

image64 = image64.decode('utf-8')

print(f'Will use {fname} for inpainting')

img = np.array(plt.imread(f'{fname}')[:,:,:3])

draw(image64, filename=f"./{fname.split('.')[1]}_mask.png", w=img.shape[1], h=img.shape[0], line_width=0.04*img.shape[1])

Now we will mask the deer in the image just like we usually do in the Paint app.

Below we can see how the model convolved the masked image with the original image.

- Now we will do the inference.

print('Run inpainting')

if '.jpeg' in fname:

!PYTHONPATH=. TORCH_HOME=$(pwd) python3 bin/predict.py model.path=$(pwd)/big-lama indir=$(pwd)/data_for_prediction outdir=/content/output dataset.img_suffix=.jpeg > /dev/null

elif '.jpg' in fname:

!PYTHONPATH=. TORCH_HOME=$(pwd) python3 bin/predict.py model.path=$(pwd)/big-lama indir=$(pwd)/data_for_prediction outdir=/content/output dataset.img_suffix=.jpg > /dev/null

elif '.png' in fname:

!PYTHONPATH=. TORCH_HOME=$(pwd) python3 bin/predict.py model.path=$(pwd)/big-lama indir=$(pwd)/data_for_prediction outdir=/content/output dataset.img_suffix=.png > /dev/null

else:

print(f'Error: unknown suffix .{fname.split(".")[-1]} use [.png, .jpeg, .jpg]')

plt.rcParams['figure.dpi'] = 200

plt.imshow(plt.imread(f"/content/output/{fname.split('.')[1].split('/')[2]}_mask.png"))

_=plt.axis('off')

_=plt.title('inpainting result')

plt.show()

fname = None

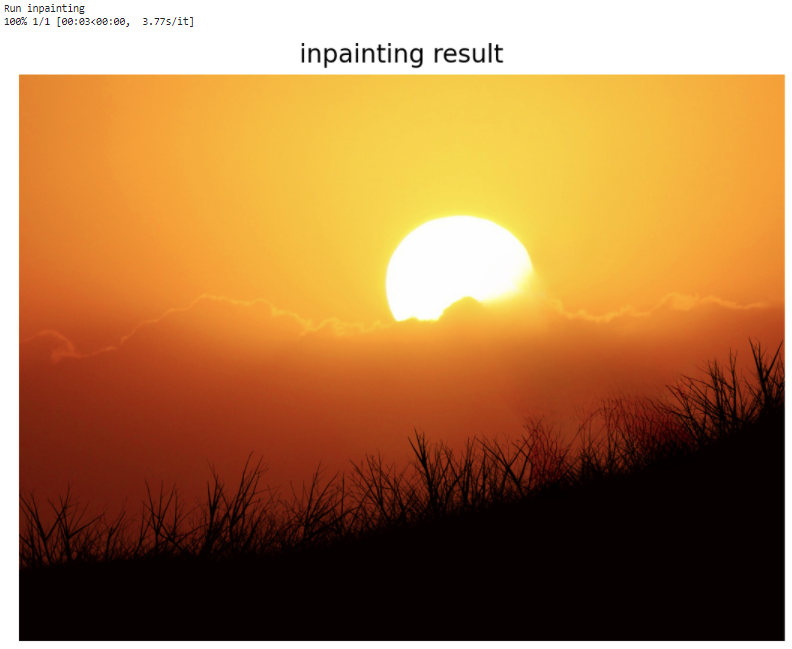

And here is the inpainted image:

Really mind-blowing result.

Final Words

We discussed the usage of a basic, single-stage solution for largely masked part inpainting in this post. We have seen how, with the right architecture, loss function, and mask generation method, such an approach may be very competitive and push the state of the art in picture inpainting. The approach, in particular, produces excellent results when it comes to repetitive pixels. I encourage you to experiment more with your own photographs, or you can look up additional information in the paper.