SQL and Pandas are the two different tools that have a great role to play when handling the data. These are not only the basic tools for any data-related tasks, but also they are very easy to use and implement even by a novice user. Where SQL works in a database environment so need an understanding of the databases as well, Pandas can be used very quickly in simple environments like notebooks or browser-based editors where one can start with a simple CSV file. In this article, we will go through a list of operations that can be performed on data and will compare how the same task can be done using SQL and using Pandas. In the end, we will be in the position to conclude the ease of using the Pandas. The major points to be covered in this article are listed below.

Table of contents

- About SQL and Pandas

- Where to use SQL and Pandas?

- Comparing the data operations

- Pandas and SQL together

Let’s begin by having a quick look at both SQL and Pandas.

About SQL and Pandas

Let’s have a brief introduction to both SQL and Pandas.

SQL: SQL is a programming language, more accurately, it is a Query language that can be used for performing database operations. SQL is the de-facto language used by most of the RDBMSs. SQL is a programming language to store, query, update and modify data.

Pandas: Deep down, Pandas is a library in python language that helps us in many operations using data such as manipulation, conversion, etc. the data type we use with Pandas is mostly tabular. This can also be used for data warehousing and using the tools of Pandas we can inspect data while moving it in or out.

Using the above points we find that Pandas is a library or toolkit in python language where SQL is itself a language and more appropriately it is a Query language.

Where to use SQL and Pandas?

SQL and Pandas can be used in a variety of applications. Let’s have a look at their key usage.

SQL: We can consider SQL as the first place for data handling where we use it to manage several types of relational databases. Using this language we can query a data warehouse or distributed database. Although we can also perform data analysis using SQL and also it is a high-performing language with high dimensional data.

Pandas: We can consider Pandas as the last place for the data to be that enables us to transform the data. This library is a better place for complicated transformation and EDA of data. Most of the time we use Pandas to handle data in data frame format which is a tidy form of data. We use Pandas for the analysis of data into python.

By seeing the above points we can say that using SQL we can manage the movement of data and in a pipeline, we require SQL to ask for data. When things come in the analysis of data we can analyze the data using SQL and import required features out of it and then using Pandas we can analyze data deeper and process it for machine learning modelling. Since there are many tools available in python for data modelling, we need to use the Pandas library before using these tools.

Talking about business logic, one of the basic advantages of using SQL is that we can distribute data to everyone. SQL can help in every situation like importing data to python, building dashboards in Tableau, displaying web reports, etc, and ensures that the performance of the process will be higher. Using Pandas for data manipulation has some advantages like we can store data into a single machine, lower I/O cost and latency than repeatedly querying a database, and the ability to apply arbitrary functions in every segment of data.

Comparing the data operations

Many data science practitioners have to use SQL and Pandas on a regular basis for various data-related tasks. So this section of the article will let us know about how we can perform different operations using the SQL language and Pandas library.

To explain the process we are going to use the titanic data set. We will perform operations using the Pandas library and will see how we can perform them using the SQL language.

import Pandas as pd

path = 'https://web.stanford.edu/class/archive/cs/cs109/cs109.1166/stuff/'

data = pd.read_csv(path + "titanic.csv")

data.head()

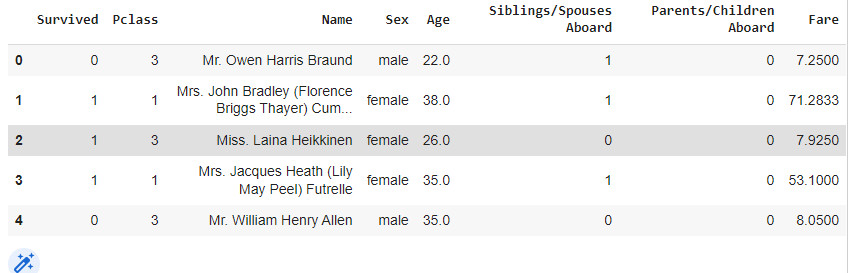

Output:

In the above output, we can see the names of the different columns. Now, let’s move toward our first operation.

Select

Select operation is used to fetch a required piece of information from the given data. Using the SQL language we can perform selection operations using some of the variables in the following way:

SELECT Survived, Pclass, Name

FROM data

LIMIT 5;Talking about the Pandas library, we can perform the selection of variables in the following way:

data[['Survived', 'Pclass', 'Name']].head()

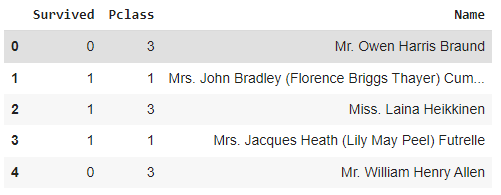

Output:

Where

This is a conditional operation we mostly use to find data values from the data that follows some condition. Using the SQL we can find data points where the sex variable has the value male in the following way:

SELECT *

FROM data

WHERE Sex = 'male'

LIMIT 5Using Pandas, we can perform the same in the following way:

data[data['Sex'] == 'male'].head()

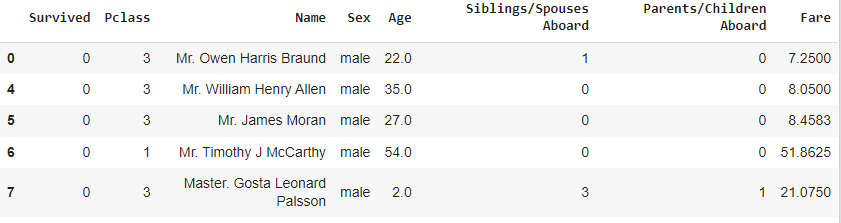

Output:

Please note that we use * (asterisk) in SQL to display all columns from the data.

OR and AND operation

These are also conditional operations that merge two conditions into one. Using the SQL language, we can find values where variable sex is male and the age of the person is more than five in the following way:

SELECT *

FROM data

WHERE Sex = 'Male' AND Age > 5.00;We can perform the same operations using Pandas in the following way:

data[(data['Sex'] == 'male') & (data['Age'] > 5.00)]

Output:

Group by

We perform the group-by operation to make groups of the data values using some categories. With this data, we can make groups of males and females. In SQL we can do this in the following way:

SELECT Sex, count(*)

FROM data

GROUP BY Sex;

Note: This query will give us the number of records for every Sex.

The same procedure can be performed using Pandas in the following way:

data.groupby('Sex').size()

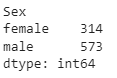

Output:

Join

Join operations are the most used operations using SQL because it mainly helps in making new data using two or more data using one variable. We join data in different the following manners:

- Inner join

This join provides common values from the variable on which we decided to join, using the SQL we can perform this operation in the following manner:

SELECT *

FROM df1

INNER JOIN df2

ON df1.key = df2.key;

Where we have two data frames(df1 and df2) and one common variable(key). To perform this operation we are required to have two or more datasets. We can make a data frame in Pandas using the following way:

import numpy as np

df1 = pd.DataFrame({'key': ['A', 'B', 'C', 'D'],

'value': np.random.randn(4)})

df2 = pd.DataFrame({'key': ['B', 'D', 'D', 'E'],

'value': np.random.randn(4)})Now we can inner join datasets in the following four ways as given below.

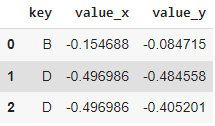

pd.merge(df1, df2, on=’key’)

- Left outer join

This operation helps us join the datasets using a clause. With the help of this, we can preserve the unmatched rows of the left data and join them with a NULL row in the shape of the right table. In SQL we can perform this operation in the following way:

SELECT *

FROM df1

LEFT OUTER JOIN df2

ON df1.key = df2.key;

the same operation can be performed using pandas in the following way:

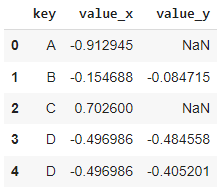

pd.merge(df1, df2, on='key', how='left')

Output:

- Right outer join

This operation helps us in joining data using a clause using which we preserve rows from the right data and join them with a null in the shape of the first (left) table. Using the SQL we can perform this operation in the following way:

SELECT *

FROM df1

RIGHT OUTER JOIN df2

ON df1.key = df2.key;

The same operation Using the Pandas can be performed in the following way

pd.merge(df1, df2, on='key', how='right')

Output:

- Full join

This operation preserves all the rows of every data while joining them. This operation

can be performed using the SQL in the following way:

SELECT *

FROM df1

FULL OUTER JOIN df2

ON df1.key = df2.key;

This same operation can be performed using Pandas in the following way

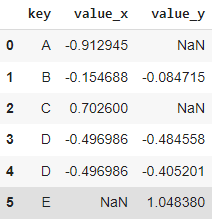

pd.merge(df1, df2, on='key', how='outer')

Output:

Here in the above, we have seen some of the most important operations that can be performed using the Pandas library and SQL language.

In the above sections, we have seen that performing operations using both Pandas and SQL are easy and we can also say that converting one from Pandas to SQL or vice-versa is not a difficult task.

Pandas and SQL together

Deep down, we know that procedures and programs are required to be robust every time, for which we need powerful development environments. In the above points, we have seen that Pandas are less costly and SQL is more robust. So in most of the organizations, we find a combination of both of them. Since both of them have proven their capabilities, without any doubt we can say both of them are very good in their work and provide great benefits and they have proven themselves as being incredibly valuable. We can make them function together where handling data from a warehouse can be done using the SQL and after SQL we can use Pandas for further procedures where data has lower dimensions.

Final words

In this article, we have seen the comparison between Pandas and SQL, where we have seen comparisons according to what are these and what are the situations where we use them. Also, we have seen differences between them in the context of programming or coding when a piece of information is required to be extracted from the given data.