Global technology player Intel has been a catalyst for some of the most significant technology transformations in the last 50 years, preparing its partners, customers and enterprise users for a digital era. In the area of artificial intelligence (AI) and deep learning (DL), Intel is at the forefront of providing end-to-end solutions that are creating immense business value.

But there’s one more area where the technology giant is playing a central role. Intel is going to the heart of the developer community by providing a wealth of software and developer tools that can simplify building and deployment of DL-driven solutions and take care of all computing requirements so that data scientists, machine learning engineers and practitioners can focus on delivering solutions that grant real business value. The company’s software offerings provide a range of options to meet the varying needs of data scientists, developers and researchers at various levels of AI expertise.

So, why are AI software development tools more important now than ever? As architectural diversity increases and the compute environment becomes more sophisticated, the developer community needs access to a comprehensive suite of tools that can enable them to build applications better, faster and more easily and reliably without worrying about the underlying architecture. What Intel is primarily doing is empowering coders, data scientists and researchers to become more productive by taking away the code complexity.

Intel Makes AI More Accessible For The Developer Community

In more ways than one, software has become the last mile between the developers and the underlying hardware infrastructure, enabling them to utilise the optimization capabilities of processors. Analytics India Magazine spoke to Akanksha Bilani, Country Lead – India, Singapore, ANZ at Intel Software to understand why, in today’s world, transformation of software is key to driving effective business, usage models and market opportunity.

“Gone are the days where adding more racks to existing platforms helped drive productivity. Moore’s law and AI advocates that the way to take advantage of hardware is by driving innovation on software that runs on top of it. Studies show that modernization, parallelisation and optimization of software on the hardware helps in doubling the performance of our hardware,” she emphasizes.

Going forward, the convergence of architecture innovation and optimized software for platforms will be the only way to harness the potential of future paradigms of AI, High Performance Computing (HPC) and the Internet of Everything (IoE).

Intel’s Naveen Rao, Corporate Vice President and General Manager, Artificial Intelligence Products Group at Intel Corporation, summed up the above statement at the recently concluded AI Hardware1 summit. It’s not just a ‘fast chip’ – but a portfolio of products with a software roadmap that can enable the developer community to leverage the capabilities of the new AI hardware. “AI models are growing by 2x every 3 months. So it will take a village of technologies to meet the demands: 2x by software, 2x by architecture, 2x by silicon process and 4x by interconnect,” he stated.

Simplifying AI Workflows With Intel® Software Development Tools

As the global technology major leads the way forward in data-driven transformation, we are seeing Intel® Software2 solutions open up a new set of possibilities across multiple sectors. In retail, the Intel® Distribution of OpenVINO™ Toolkit is helping business leaders3 take advantage of near real-time insights to help make better decisions faster. Wipro4 has built groundbreaking edge AI solutions on server class Intel® Xeon® Scalable Processors and the Intel® Distribution of OpenVINO™ Toolkit.

Today, data scientists who are building cutting-edge AI algorithms rely very heavily on Intel® Distribution for Python to get higher performance gains. While stock Python products bring a great deal performance to the table, the Intel performance libraries that come already plugged in with Intel® Distribution for Python help programmes gain more significant speed-ups as compared to the open source scikit-learn.

Now, those working in distributed environments leverage BigDL, a DL library for Apache Spark. This distributed DL library helps data scientists accelerate DL inference on CPUs in their Spark environment. “BigDL is an add-on to the machine learning pipeline and delivers an incredible amount of performance gains,” Bilani elaborates.

Then there’s also Intel® Data Analytics Acceleration Library (Intel® DAAL), widely used by data scientists for its range of algorithms, ranging from the most basic descriptive statistics for datasets to more advanced data mining and machine learning algorithms. For every stage in the development pipeline, there are tools providing APIs and it can be used with other popular data platforms such as Hadoop, Matlab, Spark and R.

There is also another audience that Intel caters to — the tuning experts who really understand their programs and want to get the maximum performance out of their architecture. For these users, the company offers its Intel Math Kernel Library for Deep Neural Networks (Intel MKL-DNN) — an open source, performance-enhancing library which has been abstracted to a great extent to allow developers to utilise DL frameworks featuring optimized performance on Intel hardware. This platform can accelerate DL frameworks on Intel architecture and developers can also learn more about this tool through tutorials.

The developer community is also excited about yet another ambitious undertaking from Intel, which will soon be out in beta and that truly takes away the complexity brought on by heterogeneous architectures. OneAPI, one of the most ground-breaking multi-year software projects from Intel, offers a single programming methodology across heterogeneous architectures.

The end benefit to application developers is that they need no longer maintain separate code bases, multiple programming languages, and different tools and workflows which means that they can now get maximum performance out of their hardware. As Prakash Mallya, Vice President and Managing Director, Sales and Marketing Group, Intel India, explains, “The magic of OneAPI is that it takes away the complexity of the programme and developers can take advantage of the heterogeneity of architectures which implies they can use the architecture that best fits their usage model or use case. It is an ambitious multi-year project and we are committed to working through it every single day to ensure we simplify and not compromise our performance.”

According to Bilani, the bottomline of leveraging OneAPI is that it provides an abstracted, unified programming language that actually delivers a one view/OneAPI across all the various architectures. OneAPI will be out in beta in October.

How Intel Is Reimagining Computing

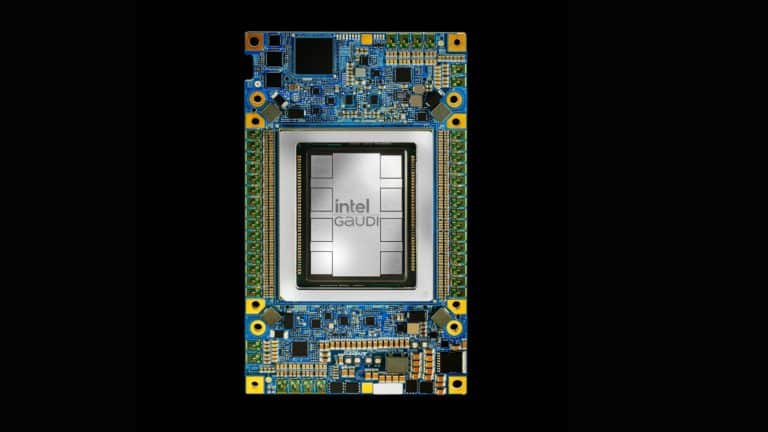

As architectures get more diverse, Intel is doubling down on a broader roadmap for domain-specific architectures coupled with simplified software tools (libraries and frameworks) that enable abstraction and faster prototyping across its comprehensive AI solutions stack. The company is also scaling adoption of its hardware assets — CPUs, FPGAs, VPUs and the soon to be released Intel Nervana™ Neural Network Processor product line.

As Mallya puts it, “Hardware is foundational to our company. We have been building architectures for the last 50 years and we are committed to doing that in the future but if there is one thing I would like to reinforce, it is that in an AI-driven world, as data-centric workloads become more diverse, there’s no single architecture that can fit in.”

That’s why Intel focuses on multiple architectures — whether it is scalar (CPU), vector (GPU), matrix (AI) or spatial (FPGA). The Intel team is working towards offering more synchrony between all the hardware layers and software. For example, Intel Xeon Scalable processors have undergone generational improvements and are now seeing a drift towards instructions which are very specific to AI.

Vector Neural Network Instruction (VNNI), built into the 2nd Generation Intel Xeon Scalable processors, delivers enhanced AI performance. Advanced Vector Extensions (AVX), on the other hand, are instructions that have already been a part of Intel Xeon technology for the last five years. While AVX allows engineers to get the performance they need on a Xeon processor, VNNI enables data scientists and machine learning engineers to maximize AI performance.

Here’s where Intel is upping the game in terms of heterogeneity — from generic CPUs (2nd Gen Intel Xeon Scalable processors) running specific instructions for AI to actually having a complete product built for both training and inference. Earlier in August at the Hot Chips 2019, Intel announced the Intel Nervana Neural Network processors4, designed from the ground up to run full AI workloads that cannot run on GPUs which are more general purpose.

The Bottomline:

a) Deploy AI anywhere with unprecedented hardware choice

b) Software capabilities that sit on top of hardware

c) Enriching community support to get up to speed with the latest tools

Winning the AI Race

For Intel, the winning factor has been staying closely aligned with its strategy of ‘no one size fits all’ approach and ensuring its evolving portfolio of solutions and products stays AI-relevant. The technology behemoth has been at the forefront of the AI revolution, helping enterprises and startups operationalize AI by reimagining computing and offering full-stack AI solutions, spanning software and hardware that add additional value to end customers. Intel has also heavily built up a complete ecosystem of partnerships and has made significant inroads into specific industry verticals and applications like telecom, healthcare and retail, helping the company drive long-term growth. As Mallya sums up, the way forward is through meaningful collaborations and making the vision of AI for India a reality using powerful best-in-class tools.

Sources

1AI Hardware Summit: https://twitter.com/karlfreund

2Intel Software Solutions: https://software.intel.com/en-us

3Accelerate Vision Anywhere With OpenVINO™ Toolkit: https://www.intel.in/content/www/in/en/internet-of-things/openvino-toolkit.html

4At Hot Chips, Intel Pushes ‘AI Everywhere’: https://newsroom.intel.com/news/hot-chips-2019/#gs.8w7pme