AI is everywhere, and companies around the world are constantly working round the clock to integrate more AI in our daily lives. Products like Alexa, Google Home, Siri are our virtual assistants, Autonomous cars are vying to be our drivers, facial recognition is everywhere — from Facebook photo tagging to replacement of boarding pass at airports, and a lot more. Governments around the world are creating specific divisions for AI research. China is already using facial recognition and AI algorithm for scoring its citizens on a variety of parameters. A lot has been done, and so much more is being done. Within 5-10 years, AI will be much more integrated into our daily lives, something like a mobile phone.

But the question arises — how safe are these? Are they foolproof? Can these autonomous systems be ‘hacked’ or ‘manipulated’ in some way to cater to nefarious designs of terrorists? Is a ‘100%’ switch to these systems viable and secure? Most importantly – can AI algorithms themselves be used to fooling other state-of-the-art AI algorithms?

Let’s take up a couple of examples of the application of AI algorithms for public usage. Autonomous cars – driving vehicles efficiently, removing the need to have a driver and decongesting our highways by optimising traffic and reducing ‘human error’. Facial Recognition – fast and secure identification, less manpower and less cost. Facial recognition will be deployed at the top 20 US airports by 2021 for “100% of all international passengers”. These two examples rely heavily on AI Algorithms classifying and recognising ‘Images’ and other associated feeds (videos, photographs, etc) in real-time.

How Does An AI Algorithm Work To Recognise Images?

An algorithm does not read the image as a human brain does. The computer reads numbers, not images. All images are composed of pixels of varying intensity, across multiple channels of colours.

A deep learning algorithm like neural network applies multiple filters on each layer, which are passed on the entire slice, followed by activations, regularisations, forward and backpropagation and in the end optimising the weights associated with each layer to minimise the loss. The optimised weights that are generated for each unit in every layer of the network after completing the training process over millions and billions of images can now be applied to any new image and the algorithm will be able to classify the image.

How Are These Algorithms ‘Fooled’?

The algorithms do not see the image, it just reads the pixel intensity as numbers and uses pre-trained weights to classify the image. If a carefully constructed noise is added to the image, the results of weight-pixels multiplication can be manipulated to generate the output probabilities such that the algorithm classifies the image as an entirely different thing.

An example of this manipulation is the Panda-Gibbon classification. The image of a Panda is fed to the network, which correctly classifies the image as that of a panda. Now, a carefully constructed noise (the noise has to be constructed specifically to the algorithm that is being used – but this constraint can now be bypassed – more on it later) is added to the image. The new image looks just like the old one to the human eye, but to an algorithm, the underlying pixel values have changed. The weights, which now are applied to the new pixels generate a different matrix, which forces the algorithm to classify the image as a ‘Gibbon’ instead of a ‘Panda’

Another example is presented by Simen Thys et.al shows how to fool the surveillance cameras. The researcher used an AI algorithm which classifies a person correctly (left part of the image below). Now, a patch of the image is put on the body of the person, and the algorithm fails to classify the image as that of a person (right part of the image below). Why? Because pixel values in a certain part of the image have been manipulated in such a way that it affects the activations in the layers of the deep neural network, forcing it to misclassify the input image and failing to detect the person in the image.

Implications: Imaging a city with a network of autonomous vehicles carrying passengers, which takes a video of the road ahead, classifies objects in each frame of the video (person, tree, road, traffic light etc) and makes a decision (left, right, brake, steer, etc). What if someone puts the adversarial patch on a traffic light, and the autonomous vehicle won’t be able to detect it, causing accidents and death. Or if a pedestrian is wearing a shirt with a similar patch – the vehicle will not recognise that as a person, and the vehicle will run over it. If someone knows how to manipulate a facial recognition algorithm at a high-security place like an airport (as mentioned earlier) – all they need to do is to put an adversarial patch or wear a shirt with a specific pattern and they won’t be detected. Furthermore, the facial recognition algorithm can be tricked into believing that the person is not the actual person A, but a different person B. This can give nightmares to all security agencies.

Adversarial Attacks: If we add this noise to any image, will it be able to fool the algorithm? No. These are not random noise or patterns. These are adversarial images generated by understanding the network architecture and understanding how the network is trained. The images which are to be classified are analysed to see which layers or weight matrix contributes the most, and what is the change in final activation unit on changing what part of the image. This is something which can still be prevented since the adversarial attacks need to know the details of the network which is not always available or can be classified for high-security applications.

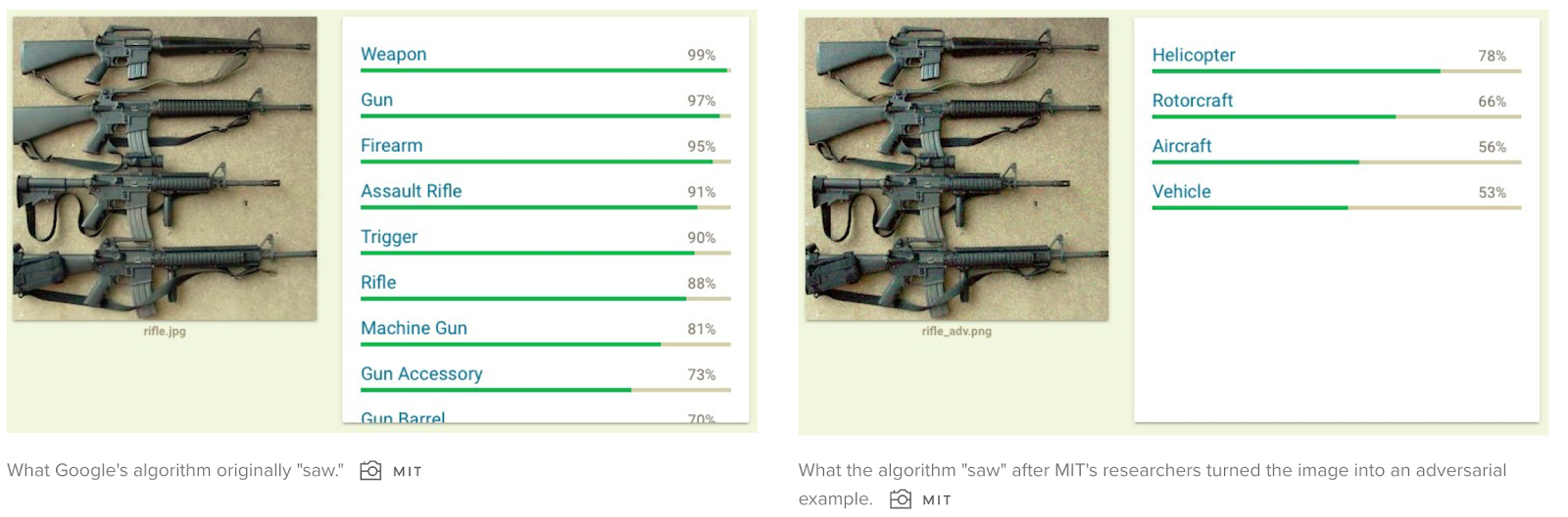

But there are ways to trick a ‘black box’ algorithm too. Researchers at MIT designed a way to fool the state-of-the-art Google’s Cloud vision API by targeting specific parts of the image and manipulating a few pixels which human eye cannot detect. The researchers also found a way to generate adversarial examples for any ‘black-box’ network to manipulate the network into thinking whatever they wanted it to (yes, not just incorrect classification but ‘whatever’ results they wanted). For example, they fooled the algorithm into believing a photo of machine guns was a picture of a helicopter, by just manipulating a few pixels. The image would still look good to the human eye.

This is something that posed a threat to AI based Airport security scanners, which scan the luggage for potentials weapons or explosives. If someone wants to bypass this, they would just have to put certain other objects at the specific positions in their luggage or an adversarial patch so that the image being fed to the AI-enabled scanner gets distorted and the luggage passes the security scanner

The Internet Of Things

Voice-based home assistants like Siri or Alexa based on speech recognition are getting popular, and more and more devices are getting connected to them. You can now order from Amazon by issuing a command to Alexa, or control lights, or unlock your door (among many other things). They will get integrated with our daily lives, performing more complex tasks while we sit and relax.

There is a funny episode of South Park animated series – It was specifically made to issue certain commands to alexa to get it to do certain tasks – like setting alarm or telling a joke. Thousands of Alexa units actually responded to these commands from TV throughout the USA, which shows how simple it is to fool these machines.

But these machines will definitely get more secure, and maybe use speech recognition algorithms so that an unauthorised user does not issue commands to them. However, they will still be vulnerable to attacks by hackers who can mimic any voice using more advanced Generative Adversarial Networks or DeepFakes.

Algorithm fooling another algorithm by learning from it – Generative Adversarial Networks (GANs):

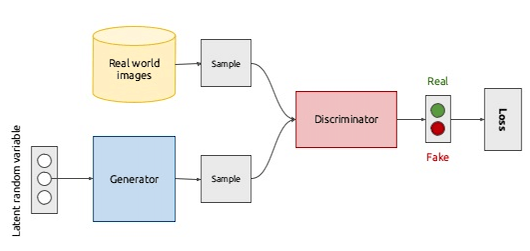

GANs were proposed first by Ian Goodfellow in 2014, and since then they have come a long way. The architecture of GAN consists of two networks – one a Generator and other a Discriminator. In the case of images, The Generator generates an image, and the Discriminator compares it with the actual image, and sends the feedback to the Generator, which now generates a better image which is closer to the actual image. The loop continues till the Generator generates an image which the Discriminator cannot differentiate from the real image, thus passing an artificially generate an image as the actual image. This works for other domains too – speech, text, videos, music etc.

Not Just Fooling The AI Algorithms, But Fooling The Humans Too

These adversarial attacks are not just restricted to Image-classifier algorithms. Generative Adversarial Networks can be used to ‘generate’ an entirely new data (image, sound, text, video) after training them on real-world data. IT can generate an entirely new face by training on a lot of examples, or a new voice sample by training the algorithm on voice, or an entirely new video of someone – the world of Deepfakes. Deepfakes are touted as the next big security nightmare, and they are becoming better and better every day. Here is a video of someone impersonating Barack Obama, and honestly, it is difficult to tell if the video is fake. This can be used with destructive intentions to change public perception about anyone, or inciting a mob, or hurting religious sentiments leading to riots and violence!

How All These Can Be Avoided To Make AI Better And More Secure

This list is not exhaustive but covers some of the points. The one thing that is paramount is that with increasing usage, the algorithms needs to be more secure. We might be moving away for the currently open-sourced networks and technologies to a more secure and restrictive algorithms and technology platforms which will be used in high-security environment and will not be available to everyone. Also, the deep learning algorithms need to be trained explicitly in detecting frauds, possibly by a brute force approach or any other method. GANs are becoming better and better at generating fake images that can fool humans, and these will require special attention. AI algorithms can themselves be used to detect a fake image or a video, better than a human. Also, additional inputs can be taken into account which is difficult to replicate — for example, an airport security scanner might take into account the facial image, gait, height, iris scan etc to become more foolproof.

There is a lot which is being done to build more and more accurate AI algorithms, but before we take them up and build our lives around these algorithms, we need to secure them and make sure they are not susceptible to fraud easily. Frauds and hacks will still happen, but it will be more difficult to do. Maybe an AI algorithm can better solve the problem of fooling an AI algorithm.