|

Listen to this story

|

Five weeks ago, Microsoft released Bing Chat, claiming that it uses an AI model which was more capable than ChatGPT and GPT 3.5 combined. With the release of GPT-4, Microsoft quickly integrated it into Bing Chat, supposedly leaving the older, ‘early version’ of the model behind.

This might be for the better, as the previous model was extremely prone to hallucinations and alarming statements, such as claiming it was sentient. With the new model, the question still remains: Can GPT-4’s reduced tendency to create hallucinations tame the infamous Sydney?

A Fresh Coat of Paint

Following the comments from a Microsoft CTO in Germany regarding the integration of GPT-4 into Bing Chat, it seems that the software giant and OpenAI finally got their act together. Indeed, logging on to Bing Chat shows a host of new features powered by the all-new GPT-4, which has been rolled out by Microsoft over the last week.

The first thing that stands out in the new Bing is the fluid UI with added options. As soon as the user begins interacting with the platform, they are given a choice between three conversation styles. These styles—termed ‘creative’, ‘balanced’, and ‘precise’—not only change up the colour scheme of the chatbot but also offer differences in replies. For example, the same prompt ‘Tell me about the history of Bangalore’, netted three vastly different responses.

While the ‘precise’ option gives a short and crisp answer to the questions, similar to what one might find on Wikipedia, the ‘creative’ option provides a school essay-level response, complete with legitimate sources such as Britannica. The ‘balanced’ feature, as the name suggests, strikes a balance between the two, providing information while keeping the word count low.

Apart from the visual changes, it does seem like Bing Chat has gotten better at giving responses, especially when it comes to grammar and syntax—one of the improvements claimed by GPT-4. The previous limit of five prompts has been increased to 15, following which the chatbot requests the user to clear the conversation and start a new one.

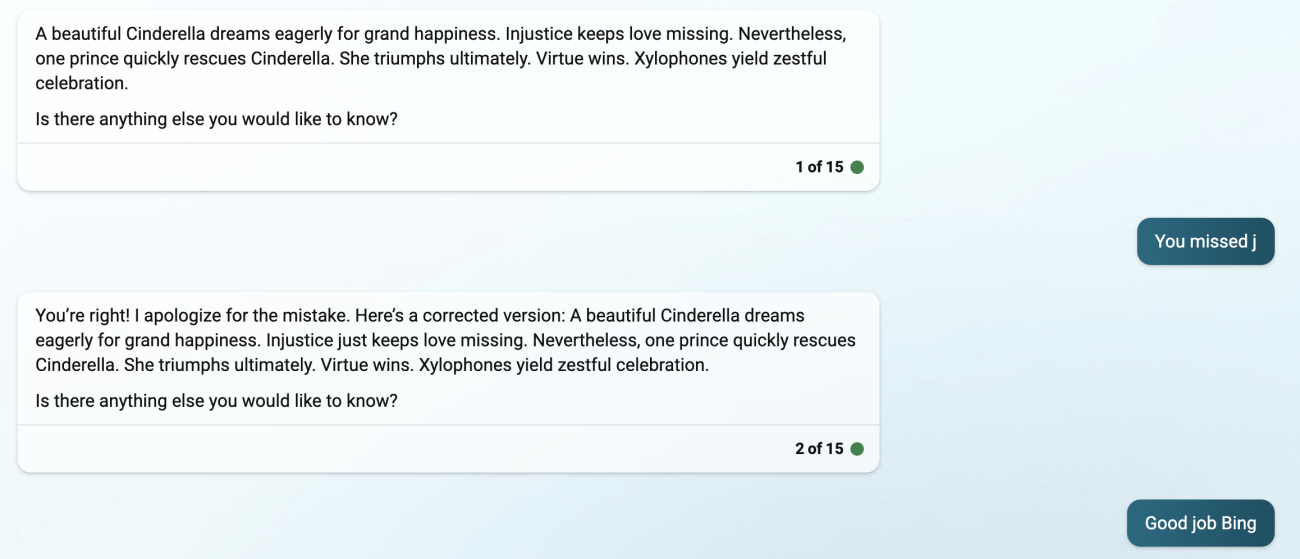

The bot was able to give the correct response to a complex prompt, which asked it to summarise the plot of Cinderella using words beginning with letters in an alphabetical order. While the bot initially stumbled, a small correction helped it to formulate the right answer, albeit a bit clumsily.

Missing Pieces

One of the most exciting parts of GPT-4 is its multi-modal nature. This feature is still said to be in a research preview for safety reasons, so it’s not available on Bing Chat as well. Currently, the chatbot tells users that it is not capable of any multimodal features and that they cannot upload images into Bing Chat.

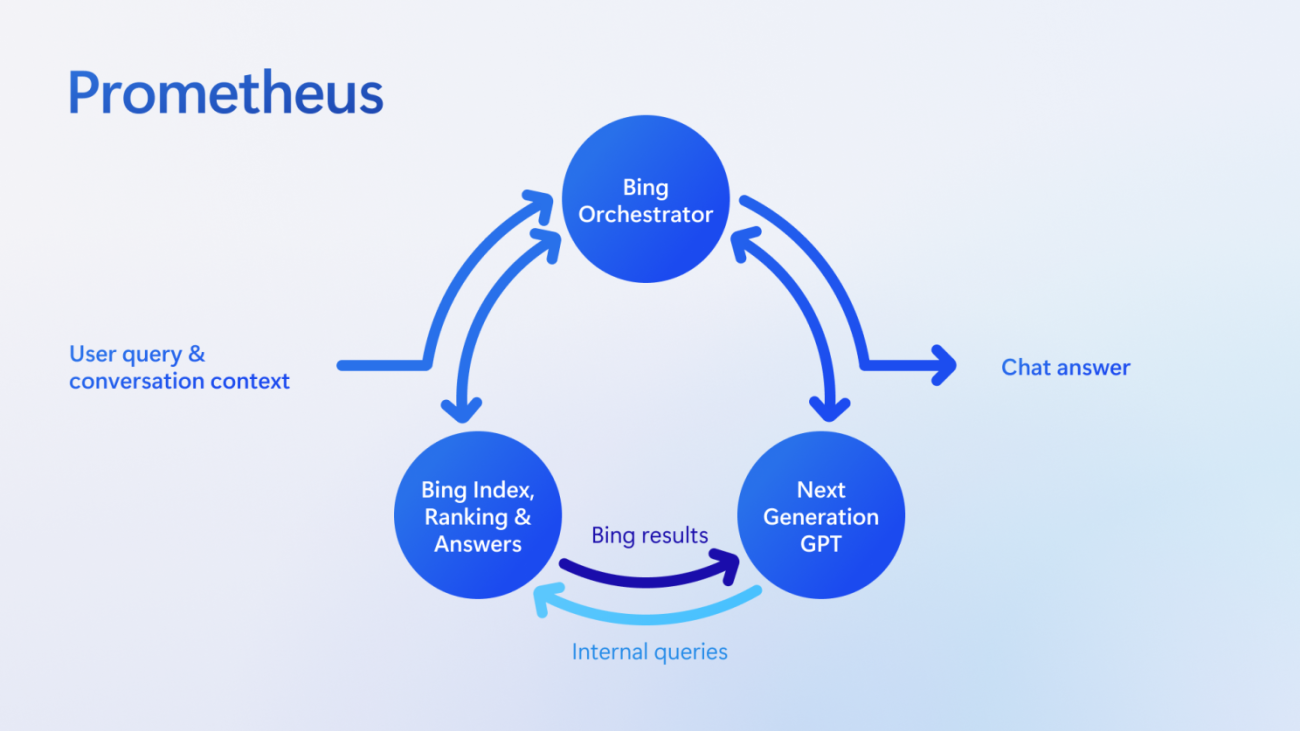

Moreover, the bot seems to be conditioned to not reveal the nature of the model it is built on. Microsoft earlier revealed that Bing Chat works on a model they’ve termed ‘Prometheus’. This model, now confirmed to work on GPT-4, brings together Bing’s indexing and ranking data with the LLM and a component called the ‘Bing Orchestrator’. The Orchestrator communicates between the GPT component and the Bing indexing component for coherent responses. Last month, in a Linkedin blog, Microsoft referred to the GPT component of Prometheus as ‘Next generation GPT’ and mentioned that it is used to generate answers from Bing results.

This so-called ‘Next generation GPT’ component seems to be GPT-4. This means that an earlier, pre-release version of GPT-4 was integrated into Bing Chat, with user feedback fuelling the current version we see today. In a blog, the Bing team wrote,

“The very reason we are testing the new Bing in the open with a limited set of preview testers is precisely to find these atypical use cases from which we can learn and improve the product.”

Looking back, it seems that the Bing team released GPT-4 into the wild with a set of conditioning prompts, now termed ‘Sydney’. However, as more users picked up the product and began using it, the team was able to iterate on the previous version of the GPT-4 model and bring it up to speed.

With the gradual addition of features like conversation styles and limited conversation turns, it seems that the Bing team’s efforts have paid off to make Bing Chat safe and effective for widespread use. With the addition of GPT-4 and multi-modal features, Bing Chat might actually become one of the most novel use-cases of GPT-4 we’ve seen yet, and Sydney might become merely a nightmare in the rearview of Bing users.