|

Listen to this story

|

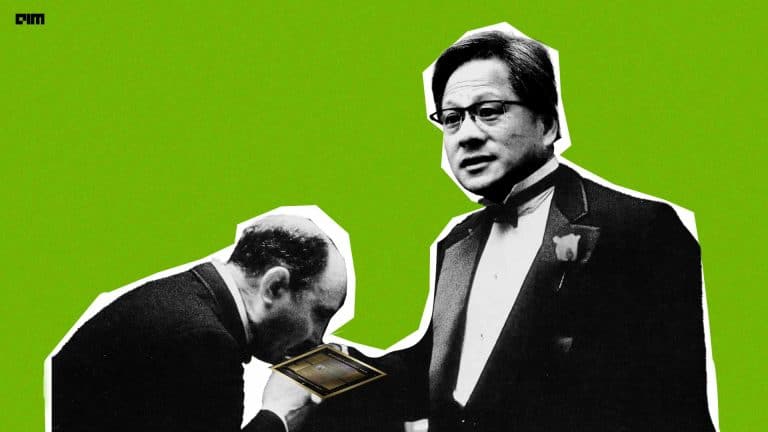

NVIDIA CEO Jensen Huang personally hand-delivered the first NVIDIA DGX H200 to OpenAI. In a post shared by Greg Brockman, president of OpenAI, Huang is seen posing with Brockman,and chief Sam Altman, with the DGX H200 in the middle.

“First @NVIDIA DGX H200 in the world, hand-delivered to OpenAI and dedicated by Jensen “to advance AI, computing, and humanity”, wrote Brockman on X.

First @NVIDIA DGX H200 in the world, hand-delivered to OpenAI and dedicated by Jensen "to advance AI, computing, and humanity": pic.twitter.com/rEJu7OTNGT

— Greg Brockman (@gdb) April 24, 2024

Huang delivering the first GPU to OpenAI appears to have become a new tradition. Back in 2016, Huang donated the first DGX-1 AI supercomputer to OpenAI, in support of democratizing AI technology. At that time, Elon Musk was the one who received it.

Would like to thank @nvidia and Jensen for donating the first DGX-1 AI supercomputer to @OpenAI in support of democratizing AI technology

— Elon Musk (@elonmusk) August 9, 2016

The new GPU could be a much-needed addition to OpenAI’s arsenal as the organization is currently working on GPT-5 and plans to make Sora publicly available this year.

NVIDIA introduced DGX H200 last year. The upgraded GPU, succeeding the highly sought-after H100, boasts 1.4 times more memory bandwidth and 1.8 times more memory capacity. These enhancements significantly enhance its capability to manage demanding generative AI tasks.

Moreover, the H200 has a faster memory specification known as HBM3e, elevating its memory bandwidth to 4.8 terabytes per second from the H100’s 3.35 terabytes per second. Its total memory capacity also rises to 141GB, up from the 80GB of its predecessor.

“To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory,” said Ian Buck, vice president of hyperscale and HPC at NVIDIA. “With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

NVIDIA also launched a new AI supercomputer with H200. The NVIDIA DGX H200 utilises NVLink interconnect technology alongside the NVLink Switch System, merging 256 H200 superchips into a single GPU unit.

The setup achieves an impressive 1 exaflop of performance and offers 144 terabytes of shared memory, marking a significant leap from the previous generation NVIDIA DGX A100 introduced in 2020, which had considerably less memory.