|

Listen to this story

|

According to the 2011 census, India is home to about 83 million native Marathi speakers, especially in Maharashtra. This makes Marathi the third most-spoken Indian language after Hindi and Bengali. The state, a major hub for startups and major tech firms, accounts for 12.92% of India’s GDP and has a per capita income higher than the national average.

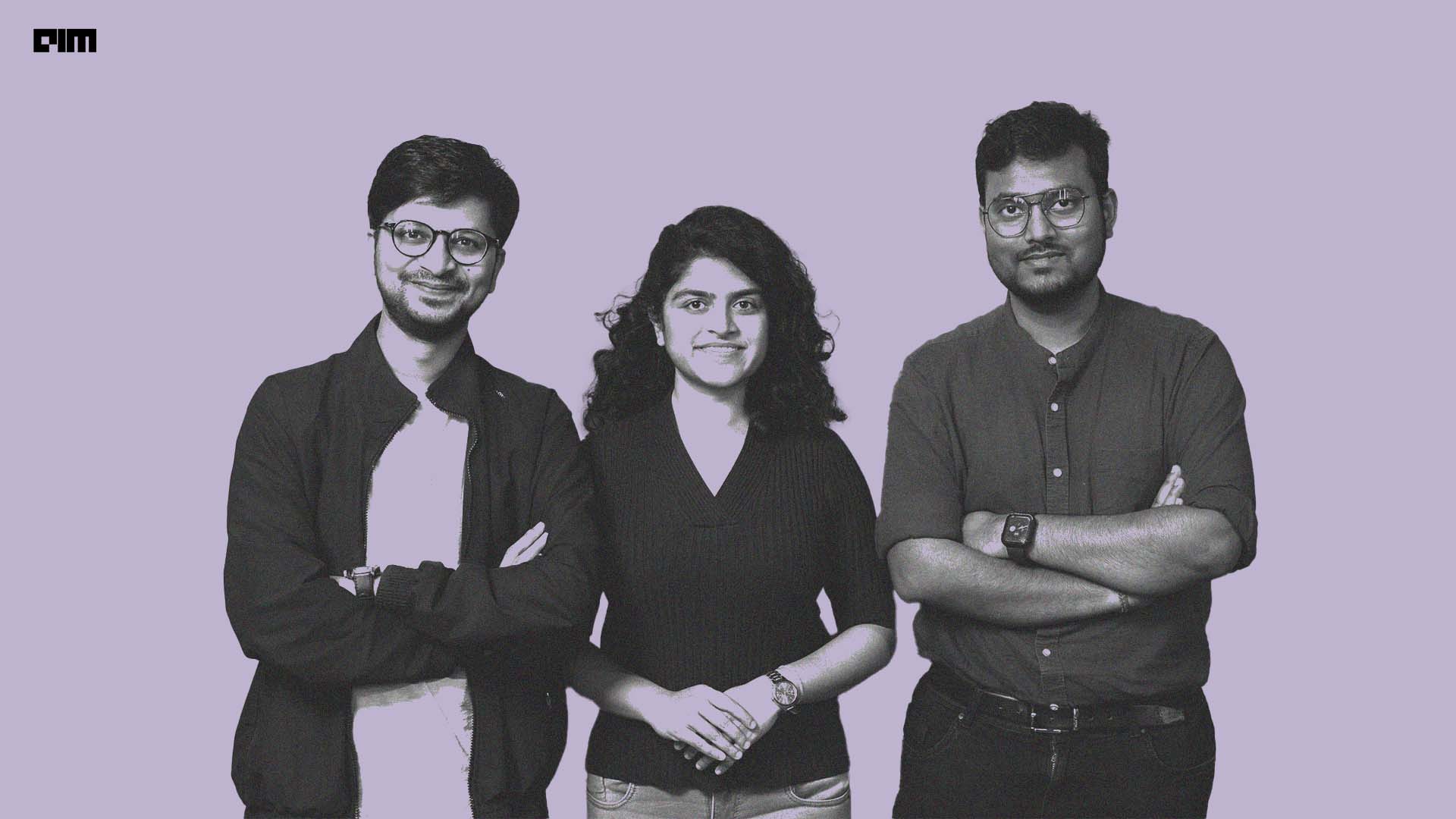

Understanding this importance, a team of US-based Indian researchers—Aakash Patil, a postdoctoral researcher at Stanford University, Mrunmayee Shende, cofounder of CourtEasy AI and Niraj Kumar Singh, an ML engineer at Inbound Health—came up with MahaMarathi 7B. Joining the league of indic LLMs like Telugu, Malayalam, Tamil, and Odia Llama, MahaMarathi has been built on seven billion parameters. It is domain-adapted, continually pre-trained, and instruction fine-tuned using the Llama-2 and Mistral AI framework.

The Inception

“When GPT-4 came out, we realised the importance of doing something for Indian languages. Although it wasn’t our primary focus initially, the release of newer models like Meta’s Llama motivated us to build on top of them. The idea for MahaMarathi started in June last year, initially just as a concept,” Patil told AIM, sharing the inception story of the model.

He said that MahaMarathi would not have been possible without the help of Microsoft for Startups backed legal tech startup CourtEasy AI, which provided them with all the computing resources and data to train the model. The model was trained on NVIDIA A1 100 GPUs, procured by the startup under the program.

“Why Marathi? It is our mother tongue, and we have spoken it since childhood, so we thought we could contribute to the Indic LLM space,” added Shende. All of them were born and raised in Maharashtra. Patil is from Akola, Shende is from Satara, and Singh is from Nagpur.

“Our shared passion for Marathi and the desire to bridge technological gaps motivated us to work on this project,” commented Patil, adding to what Shende said.

Challenges Galore

The making of MahaMarathi was not a cakewalk, as the team was hindered by computing power and data availability challenges. Even though Patil had access to powerful supercomputers because of his research, he could not use them for personal projects.

However, things started to change around June last year when he discovered Shende and Singh share his vision of building an indigenous Marathi LLM.

Shende’s CourtEasy AI primarily operates in the legal sector, developing AI tools for lawyers, paralegals, and law firms in India. “Initially focusing on English, we soon recognised the necessity of including Indian languages, as numerous cases in lower courts are conducted in these languages,” said the co-founder.

So, Shende had been collecting data for quite some time, and by the end of December, she had amassed a significant corpus.

Datasets and Training Method

The researchers compiled a large corpus of about five million words in Marathi for their language dataset. This corpus, sourced over six months from publicly available content on websites, blogs, media, and news outlets, formed the basis for the initial pre-training of their model.

The team developed a new tokeniser for the Marathi language to manage this large corpus. After creating the tokeniser and expanding the vocabulary size, they pre-trained the model for next-token prediction tasks.

During pre-training, after achieving satisfactory results in next-token and next-sequence prediction, the team shifted their focus to fine-tuning. For this phase, they used datasets from Stanford Alpaca and Microsoft Orca, which were translated into Marathi and then cleaned to ensure accurate and contextually appropriate translations.

Satisfied with the model’s sequence prediction and context generation capability, they further refined the fine-tuning process using various datasets, including translations done with IndicTrans by AI4Bharat. IndicTrans is notable as the first open-source transformer-based multilingual NMT model supporting high-quality translations across all 22 scheduled Indic languages.

When asked about the primary databases used, Patil explained that they stored the tokenized data on a hard disk, while MongoDB Atlas was used to store the fine-tuned pairs, which amounted to approximately 60,000. Since vectorised databases are unsuitable for storing large corpora, the team primarily relied on Amazon S3 due to its extensive storage capacity.

The researchers chose not to use alternatives like the BLOOM family of open-source models or other multilingual models. Instead, they opted for a combination of the Mistral AI framework and Llama 2 architecture. This approach involved enhancing the Llama architecture by initialising Transformer layers with Mistral’s weights and modifying the MergeSkate library to improve efficiency and multilingual support.

What’s Next?

In the last three weeks since the release, the feedback has been overwhelmingly positive for MahaMarathi, especially from the Marathi community in the Bay Area and large enterprises, Intel being one of them, as Shende explained.

Depending on the feedback for the base model, the trio will also release SFT and DPO models. However, the team also noticed a need to educate the public on using pre-trained models and fine-tuning. They plan to release collaborative notebooks to assist smaller businesses and medium-scale enterprises in integrating AI into their operations.