Rapidly developing sensor technology and software processing has enabled autonomy for trucks — improving fleet operations by decreasing downtime, reducing personnel costs, crashes and fatalities. According to Fortune Business Insights, the global autonomous trucks market is expected to reach $2,013.34 million growing at a CAGR of 12.6 percent by 2027.

Banking on this booming business, NVIDIA has created end-to-end solutions for software-defined autonomous vehicles (AV) for the transportation industry, allowing for continuous improvement and deployment via over-the-air upgrades. In addition, it provides everything required to develop AV on a large scale.

Improving on NVIDIA’s technology, researchers at MIT recently developed a single deep neural network (DNN) for autonomous power vehicles. They used NVIDIA DRIVE AGX Pegasus to help run the vehicle’s network, which helped process large amounts of LiDAR data in real-time.

Usually, an AV sensor generates as much as 1.6 petabytes of sensor data by a fleet of 50 vehicles in just six hours. For safe navigation, self-driving cars need to interpret this data in real-time. However, owing to the challenge of processing this enormous volume of data using a single DNN, most techniques employ several networks and high-definition maps. This combination enables an AV to rapidly determine its location in space and recognise other road users and traffic signs. While this strategy ensures the redundancy and diversity required for safe autonomous driving, it is challenging to deploy in unmapped locations. Additionally, AV systems that rely on lidar sensing must process over two million points in their environment every second. Unlike two-dimensional image data, lidar points are exceedingly sparse in three dimensions, posing a significant barrier for modern computing machinery, which is not optimised for this type of data.

Efficiency through single DNN

In their paper, published at COMPUTEX, the MIT team described how it is pursuing a new self-driving technique using a single DNN, beginning with the task of processing real-time lidar sensor data. Beyond the fundamental architectures, researchers have created new enhancements to significantly increased speed and energy efficiency. The DNN is intended to execute all the operations of the self-driving system. This comprehensive capability is provided through extensive training on massive amounts of human driving data that teaches the network to approach driving holistically, as a human driver would, rather than dividing it down into specific functions.

While the approach is still in its infancy, it has the potential to yield considerable benefits. A single DNN is significantly more efficient than numerous dedicated networks in the vehicle, freeing up computing headroom for additional features. Additionally, it is more adaptable, as the DNN navigates unknown roadways using its training rather than a map. Finally, the efficiency gain enables the real-time processing of large amounts of rich perceptual data.

Increased performance with NVIDIA DRIVE

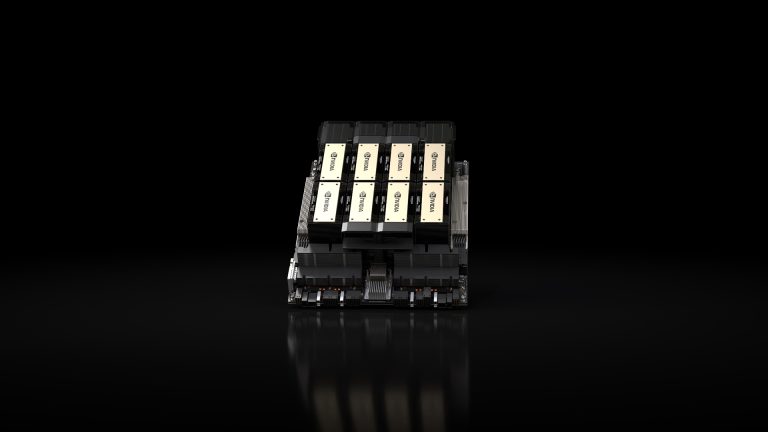

The MIT researchers used NVIDIA DRIVE AGX Pegasus to boost computing performance and achieve full automation. The AI embedded supercomputer is built for level 4 and level 5 autonomous systems to avoid human involvement. A total of 320 trillion operations per second are achieved by coupling two Xavier SoCs and two Turing GPUs, enabling quick processing of LiDAR data. To create the DNN, the MIT researchers began their work on a machine that wasn’t just powerful but also used in many other AV systems. First, when analysing LiDAR-only models, the research team examined how they performed on the lane-stable test. Then, in the analysis of navigation-enabled models, the research team compared the models’ overall performance.

Additionally, when researchers enabled evidential fusion, the model’s results were at par with LiDAR-only testing. It followed the map’s directions and aligned its trajectory closely to that of a human driver. The researchers proposed an efficient and resilient end-to-end navigation framework based on LiDAR in their article. To accomplish faster LiDAR processing, they constructed a new 3D neural architecture and improved the sparse convolution kernel.

Not long ago, NuPort Robotics, a self-driving trucking startup, used NVIDIA DRIVE to build autonomous driving systems for middle-mile short-haul routes. Its business, headquartered in Canada, collaborates with the Ontario government and Canadian Tire on a two-year trial project to accelerate the commercialisation of NVIDIA DRIVE technology. It will be interesting to see how startups and NVIDIA come together to develop and implement the findings of this new single DNN research by MIT.