|

Listen to this story

|

While everyone is looking to acquire H100 GPUs, NVIDIA has introduced another computer to acquire as soon as possible – NVIDIA HGX H200. The key feature of this platform is the NVIDIA H200 Tensor Core GPU, equipped with advanced memory designed to efficiently handle extensive datasets for generative AI and high-performance computing (HPC) workloads.

AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are among the initial cloud service providers set to deploy H200-based instances in 2024. CoreWeave, Lambda, and Vultr are also part of the early adopters.

Utilising the Hopper architecture, NVIDIA H200 marks a significant milestone as the first GPU to incorporate HBM3e, a faster and larger memory that propels the acceleration of generative AI and large language models. This development also promises to advance scientific computing for HPC workloads.

It's the first system to use HBM3e, with 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor.

— Bojan Tunguz (@tunguz) November 13, 2023

Read more: https://t.co/Rock1mRZHD#Supercomputing #HPC #LLMs #GPU #Computing #AI #ArtificialIntelligence

2/2

Notably, the HBM3e-powered NVIDIA H200 boasts an impressive 141 GB of memory at 4.8 terabytes per second, almost doubling the capacity and offering 2.4 times more bandwidth compared to its predecessor, the NVIDIA A100.

Ian Buck, the Vice President of Hyperscale and HPC at NVIDIA, emphasised the critical role of efficient data processing in creating intelligence with generative AI and HPC applications. He stated, “With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

The NVIDIA Hopper architecture demonstrates a remarkable performance leap, continually raising the bar through ongoing software enhancements. Recent releases, such as the NVIDIA TensorRT-LLM open-source libraries, showcase the platform’s perpetual commitment to innovation.

The introduction of the H200 is expected to nearly double the inference speed on Llama 2, a 70 billion-parameter large language model, compared to the H100. Future software updates are anticipated to bring additional performance leadership and improvements with the H200.

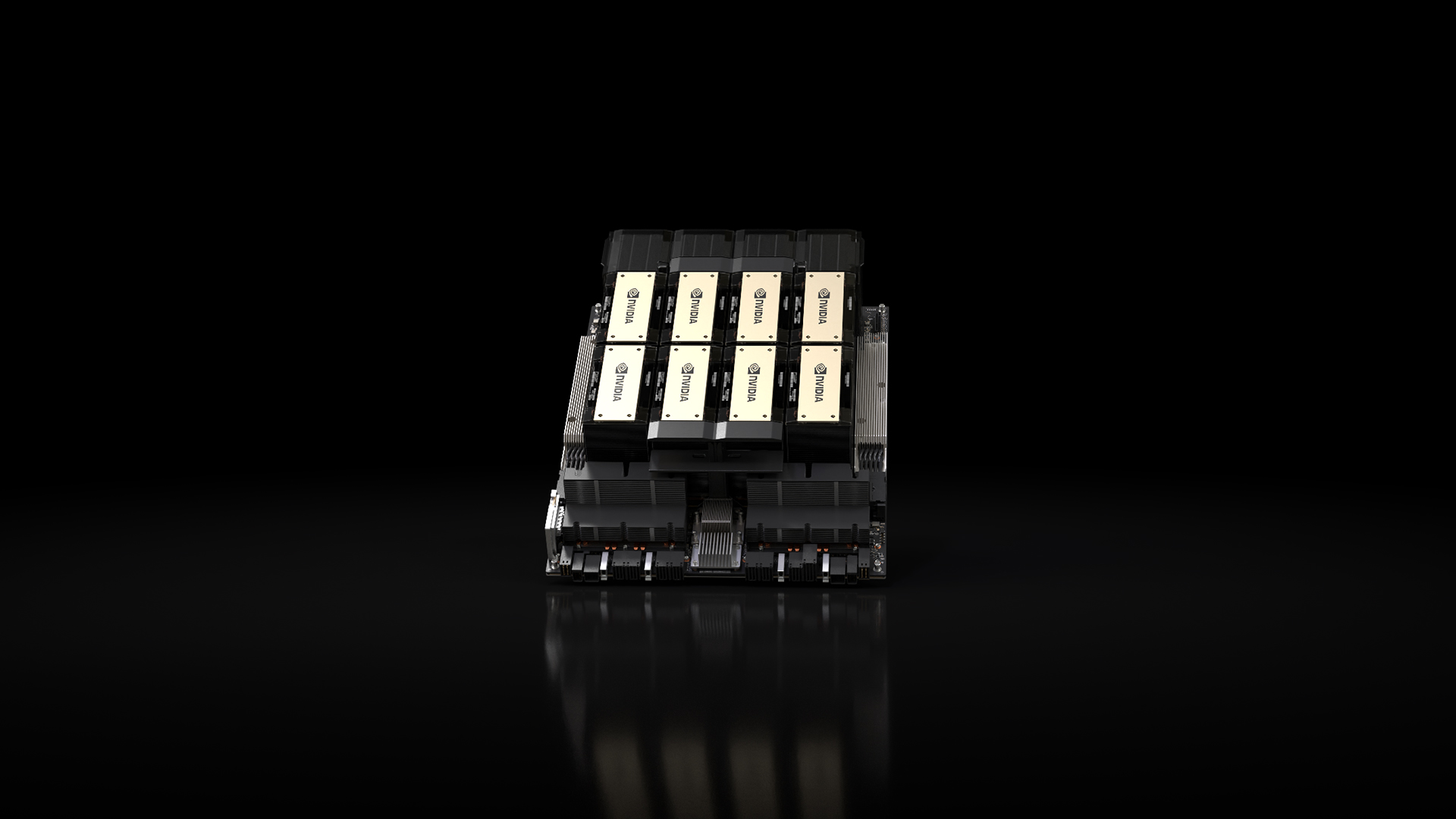

The NVIDIA H200 will be available in NVIDIA HGX H200 server boards, offering four- and eight-way configurations. These boards are compatible with both the hardware and software of HGX H100 systems. Additionally, the H200 is integrated into the NVIDIA GH200 Grace Hopper Superchip with HBM3e, providing versatility in deployment across various data centre environments, including on premises, cloud, hybrid-cloud, and edge.

NVIDIA’s extensive global ecosystem of partner server manufacturers, including ASRock Rack, ASUS, Dell Technologies, Eviden, GIGABYTE, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron, and Wiwynn, can upgrade their existing systems with the H200.

The HGX H200, powered by NVIDIA NVLink and NVSwitch high-speed interconnects, offers unparalleled performance on various application workloads, LLM training and inference for models exceeding 175 billion parameters.

An eight-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory, ensuring optimal performance in generative AI and HPC applications.

When paired with NVIDIA Grace CPUs and an ultra-fast NVLink-C2C interconnect, the H200 contributes to the creation of the GH200 Grace Hopper Superchip with HBM3e. This integrated module is specifically designed to cater to giant-scale HPC and AI applications.