|

Listen to this story

|

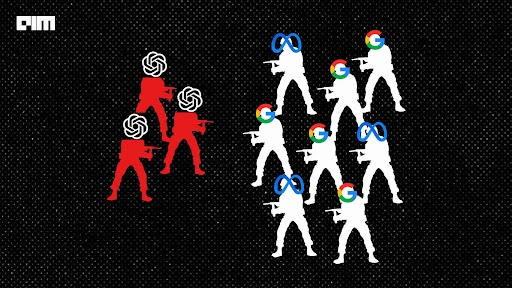

With a lean workforce of just about 375 individuals, San Francisco-based OpenAI has achieved an impressive record of breakthroughs and advancements in the field of AI, especially in the past two years. Chief Sam Altman recently took to Twitter to applaud and “not brag” about the talent density of the company. The Microsoft-backed company’s ability to produce such significant results with a relatively small team is a testimony to the power of its innovative approach and cutting-edge research.

Small Team, Big Impact

OpenAI gave the world ChatGPT, GPT-3.5, DALL-E, Codex, and MuseNet, among others. In addition, the AI research lab released 13 research publications in 2022, followed by nine in 2021.

Read more: Google, Meta, Why NO ChatGPT?

OpenAI knows how to channelise the full potential of its employees towards a common goal. This, incidentally, can be traced in the company culture, which prioritizes teamwork, and impact, fostering an environment in which employees are encouraged to take creative risks in pursuit of advancements in AI. The organization places a strong emphasis on collaboration and open communication, promoting diversity and inclusivity among its workforce.

Deep Research, Low Noise

In contrast, Google’s DeepMind has over 1,000 employees, almost thrice more than OpenAI. While the former is mostly research-oriented, the latter focuses on research as well as getting its products into productization faster and monetizable. Given the team size, DeepMind has produced 30 times more research papers in the past three years with more diversity than OpenAI.

DeepMind grew to fame with AI gaming program AlphaGo that defeated Lee Sedol, one of the world’s top Go players. It also delved into the health and life sciences space with projects like 3D protein-prediction model AlphaFold.

However, DeepMind does not have a publicly available API for general use. It is known to form partnerships with companies and organizations to allow access to its algorithms via APIs or other means, typically for specific and limited or narrow use cases. For example, in 2019, DeepMind announced a partnership with Google Cloud to make some of its AI models available on the Google Cloud platform. These models are available via an API and are intended for use in healthcare and life sciences research.

Read more: Protein Wars: It’s ESM Vs AlphaFold

When Meta released ESM and DeepMind came up with AlphaFold, it solved the 50-year-old grand challenge of protein folding.

AlphaFold 2 and other alternatives use multiple sequence alignments (MSAs) and templates of similar proteins to achieve optimal performance or breakthrough success in atomic-resolution structure prediction. However, ESMFold generates structure prediction using only one sequence as input by leveraging the internal representations of the language model. The launch of these breakthrough models gave several developers the chance to build their own works. MIT researchers used AlphaFold to see if existing computer models can figure out how antibacterial compounds work.

On the other hand, Meta AI introduced ‘Galactica’, which unfortunately had to be shut down three months later owing to safety and accuracy concerns. According to Meta AI chief Yann LeCun, LLMs are reliable as writing assistants but not when you use them as search engines to solve queries. RL just mitigates the mistakes without fixing the problem.

Fueling Startups

But one thing is certain, Google and Meta have been avid contributors to the open-source community. They have been instrumental in helping set up thousands of tech startups built on their research and technologies. For instance, OpenAI’s flagship chatbot ChatGPT still builds on Transformer, the paper ‘Attention is all you need’ that Google published six years ago.

Creating a high-impact research paper is a delicate balance between innovation and validation. It requires not only a novel idea, but also the ability to prove its validity through rigorous experimentation, in the face of numerous failures. In contrast, the development of a successful product depends on technical proficiency and market relevance, with a focus on product-market fit taking precedence over technical innovation.

With products like ChatGPT and other offerings, OpenAI has made significant strides in navigating the complex landscape of product development. OpenAI, touted as a not so open-source friendly company, generates profit by commercializing its models through its APIs. It uses a pay-per-use system for its APIs, wherein the developers have to buy tokens to use the API.

Interestingly, many employees left OpenAI to establish their own firms. One prime example of this is Anthropic, which has recently acquired over $700 million in investment. Cofounded by former OpenAI employee Dario Amodei, Anthropic has developed an AI system named Claude that is comparable to OpenAI’s ChatGPT but differs from it in several significant ways.

This list also includes Pilot, Covariant, Adept, Living Carbon, Quill (acquired by Twitter), Daedalus, and others. In the last five years, close to 30 employees have left OpenAI to start their own AI ventures – aka ‘OpenAI Mafia,’ a large majority of them belong to senior to mid-leadership roles. And some are even in junior roles. In 2021, a former research intern at OpenAI, Anish Athalye, started Cleanlab, a data-centric AI platform that automatically finds and fixes errors in ML datasets. Another former research engineer at OpenAI, Taehoon Kim, recently founded Symbiote AI, a real-time, 3D avatar generation platform.

OpenAI has also set up a Startup Fund to identify talent early on and help them in their journey.

AI Labs are Codependent

While OpenAI leads the pack in product deployment, outpacing contemporaries such as DeepMind and Meta AI, the latter have more contributions in the field of research, actually giving birth to several companies, like OpenAI.

In the world of AI, collaboration is crucial. Both Microsoft and OpenAI recognize this and have chosen to use PyTorch, while PyTorch in turn uses OpenAI’s Triton. However, despite the potential benefits of open science, OpenAI’s own approach to sharing its algorithms is restrictive.

Healthy cooperation is needed to solve bigger problems. Even the big shots of AI agree that collaboration is the key.

In an exclusive interaction with AIM, Yoshua Bengio, one of the founding fathers of Deep Learning said that learning about each others’ insights and brainstorming sessions with other scientists as well as his students is very powerful. “If I was doing my thing alone, I don’t think I would have achieved what I have. So, it’s not only like an intellectual thing, but when we’re together trying to understand or figure out something, we are motivated by the energy of others. We want to find solutions to problems. Especially when you’re younger, all of these human social factors of the collaboration really make a difference,” he added.

Although Sam Altman questions if the AI labs can get along, but unfortunately, OpenAI itself is not open-source friendly. OpenAI gives limited to no access to its algorithms, and gives them out to the public in the form of API.

But does OpenAI actually care?