The era of using a CPU for all computational tasks is long gone. At the Google I/O 2018, the search engine giant announced a new generation of Tensor Processing Unit (TPU) which will help turbocharge their various products. The TPUs will also be available on Google Cloud Platform for enterprises and machine learning researchers for a fee. Sundar Pichai, the CEO of Google announced that the TPUs were at least eight times more powerful than the last edition and go up to 100 petaflops in performance. Reportedly, he also underscored that underscored that high-performance ML is a major key differentiator for them, and that’s true for their Google Cloud customers as well.

When tech companies understood that Moore’s Law no longer worked, they went on to create their own computing chips. Google, along with other tech giants like Microsoft and Tesla, have started building their own electronic chips. Yann LeCun, Facebook’s AI chief has said, “The amount of infrastructure if we use the current type of CPU is just going to be overwhelming.” LeCun went on to warn chip vendors saying, “If they don’t, then we’ll have to go with an industry partner who will build hardware to specs, or we’ll build our own.” Google is also giving out free trial accounts, and the Google Cloud hosting TPU v3 is also available in alpha.

History And The Need For Tensor Processing Unit

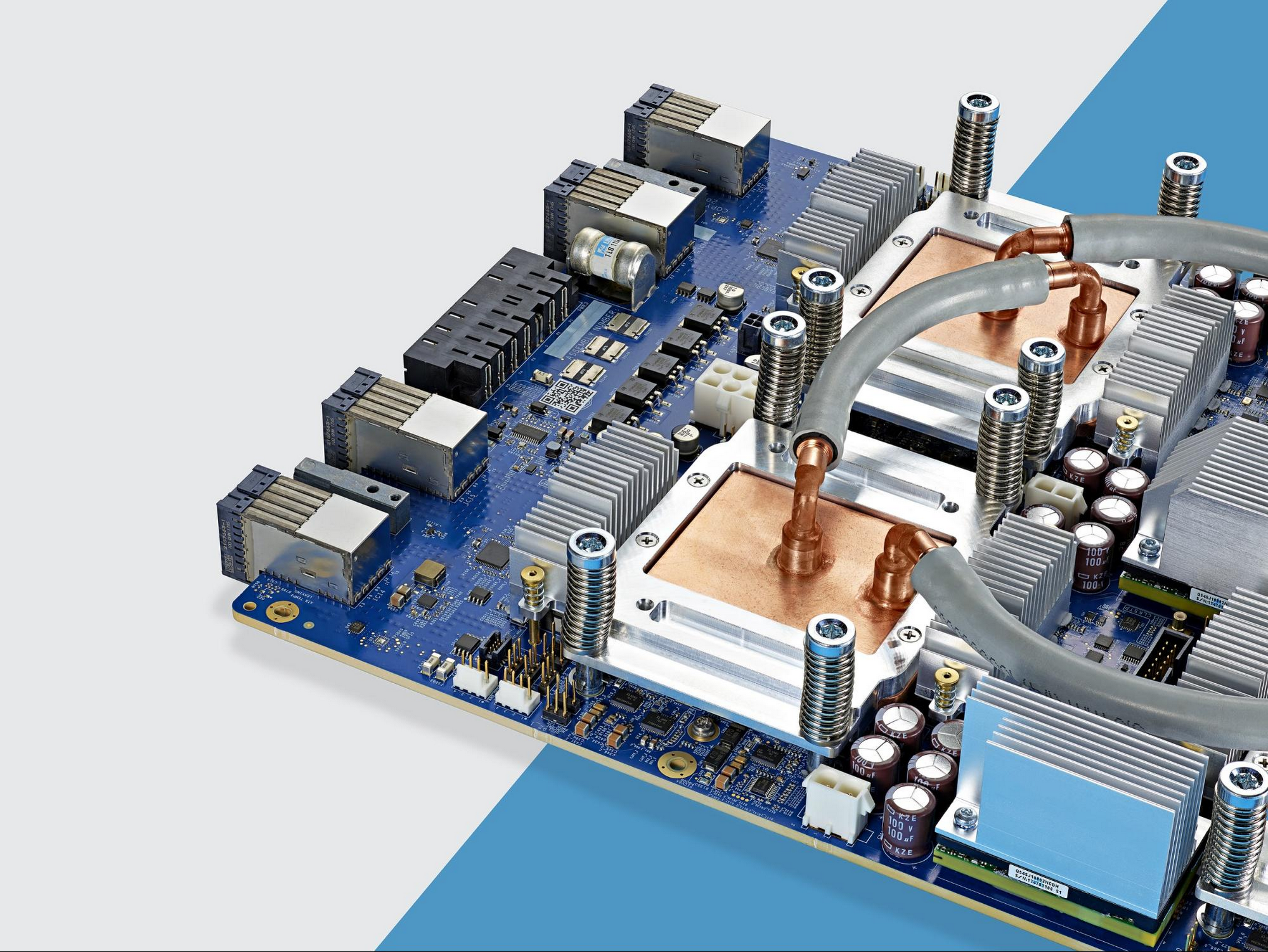

TPUs are basically an application-specific integrated circuit (ASIC) chip custom-built for mathematical computing heavy ML tasks. TPUs were designed and deployed by an internal team at Google and as mentioned earlier they have been used in multiple Google products. They are designed keeping in mind how neural networks and other deep learning algorithms work. There is also support for distributed training via TPU leveraging some high-performance computing technologies. John Leroy Hennessy, the legendary computer scientists who has done amazing work in computer architecture, believes that we live in an era where we need to create task-specific chips.

Deep Learning and CPUs

Deep learning works on the concepts of neural networks where neurons are arranged in many layers. There are three distinct layer groups called the input layer, hidden layers and the output layer. There is a “weight” associated with each neuron connection. The crux of learning in neural networks is the learning of the weights in huge neural networks. Sometimes the number of weights or “parameters” that need to be figured out run into millions and billions.

ML researchers over the decades used to run such large computations on CPUs. They can handle doing simple maths calculations, handling database entries, even run engines in an aircraft. But since they can do so many tasks they are not well designed to predict what instruction is going to come next and hence are very slow. There is a well set out algorithm for neural networks and future computations are very predictable but still CPUs because of their fundamental design can’t predict future calculations and hence fail to provide speed up.

Deep Learning And TPUs

Compare this to a TPU which is hand designed to work in ML applications where billions of parameters need to be calculated. Google calls it the “domain-specific architecture”. Hence they replaced the general purpose CPU with a matrix processor built only for neural network workloads. In contrast to CPUs, TPUs can’t run multiple applications. They can’t run word processors and other simple applications but they do one thing well — run billions and billions of massive multiplications and additions to support neural networks with emphasis on power saving. This is because TPUs are able to sidestep the problem of von Neumann bottleneck. The TPU does only one thing and hence can predict what kind of operations it will receive in the next instruction.

Google says, “…We were able to place thousands of multipliers and adders and connect them to each other directly to form a large physical matrix of those operators. This is called systolic array architecture. In case of Cloud TPU v2, there are two systolic arrays of 128 x 128, aggregating 32,768 ALUs for 16-bit floating point values in a single processor.”

TPU loads the parameters from memory into the matrix of multipliers and adders. For once, TPU reads the data from the memory. As execution proceeds it is passed down to the next multipliers which does the summations and hence the output is the summation of all multiplications between data and parameters. No memory access is needed during this process and massive improvements in performance are delivered.

Conclusion

The achievements of TPU underline the impact and importance of “domain-specific architectures”. Apart from other resources the TPUs are capable of saving money for enterprises. Stanford University published DAWNBench contest which closed on April 2018 reported that the lowest training cost by non-TPU processors was $72.40 (for the task of training a ResNet-50 at 93% accuracy with ImageNet using spot instance). The same training on Google Cloud TPU v2, cost around $12.87. Therefore it costs less than 1/5th of non-TPU cost. This is the power of domain-specific architecture for you. Hats off, Google.