|

Listen to this story

|

In his ten years as a data scientist, Rajiv Shah has worn multiple hats – a sales engineer at DataRobot, a solutions architect at Snorkel AI, a tech evangelist and now an ML engineer at Hugging Face. Even as he works with enterprise partners to monetise Hugging Face products, Shah continues to serve as adjunct assistant professor at the University of Illinois.

Analytics India Magazine caught up with Rajiv to understand how he keeps up with the breakneck speed of AI innovation, the ethical questions surrounding open-source research and doomsdayers.

AIM: In the recent past, we’ve been seeing all these open-source models since Meta’s LLaMA, there’s a lot that’s owed to the open-source community. How important is open-source in the picture?

Rajiv: I’ve never seen so much participation in the open-source movement around AI. For the last 10 years, we’ve had various open-source AI tools but just the size of the community and the interest is so huge now. Every time I look at TikTok or LinkedIn or even the Hugging Face meetup that just happened in San Francisco, about a month ago 5,000 people show up.

And we’re starting to see that progress in terms of building useful datasets for training these LLMs. Apart from the pre-existing ones, there’s a bunch of new organisations as well now like Together.xyz which is starting to build a model and they’ve just shared their RedPajama dataset. Or even H2O.ai or Databricks and now Stability AI which have all open-sourced models. So, there’s a lot of companies getting involved now to try and improve the state of open-source.

AIM: There are ethical concerns around how so many of these models have been trained on recycled data from say ShareGPT or other OpenAI models. How do you view these issues?

Rajiv: There’s a whole can of worms when we start looking at the data that’s being used to train these models. If you step back and look, there’s a host of lawsuits right now against Stability AI by people whose content is fed into their models or GitHub Copilot which is being sued because the code they had should not have been inside the models. This is why now there’s a bunch of companies like Reddit or StackOverflow which are holding their data back.

There’s another part where some of these models are released for “educational purposes,” but then you see all these companies jumping on to them and using them. So, you really wonder if they’re made for educational or commercial purposes?

Then, there’s another category where the outputs of these GPT models are used to train other models because they are high-quality. Needless to say, this is a very grey area. It’s not clear if that is something that’s acceptable to do both in terms of copyright laws or OpenAI’s Terms of Services.

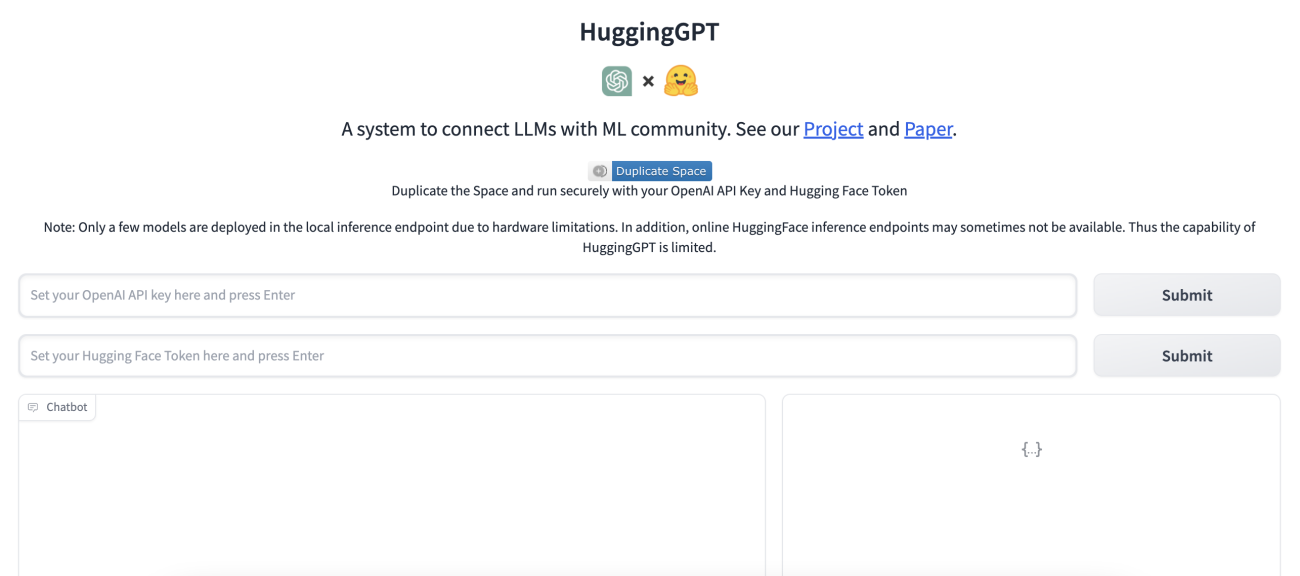

AIM: HuggingGPT was just released on the Hugging Face platform? How does the chatbot fill in the gaps left open by ChatGPT?

Rajiv: First off, I think it’s important to clarify that what we really introduced was a UI for chatting, and then you could just plug in any kind of model into it. So, the model actually isn’t trained by Hugging Face – it’s the open assistants group that built and trained the model. I know it’s easy to kind of conflate these things together because it seems like we are creating a rival to OpenAI products. But what we introduced was an open-source chat interface, so if people want to use that they can by just plugging in their own model. So, when you’re talking about hallucinations, and all the other issues, it’s pretty well known that all LLMs are very susceptible to this problem.

AIM: The open letter which proposed the six-month pause created a lot of noise. What do you think about that?

Rajiv: I never took the moratorium very seriously. There weren’t policy people involved in it so it didn’t seem very realistic. On the other hand, what Europe is doing over banning ChatGPT and similar models seems to stand on much firmer ground because it asks questions around the data, consent for obtaining the data, privacy and other important issues. I see that as way more valid.

These models have just ripped data from the internet on a wholescale which has been acceptable in the US so far but as we see, Europe and other places will not agree with this kind of behaviour.

AIM: What can you tell us about the kind of partnerships Hugging Face is looking at?

Rajiv: Hugging Face really believes in the democratisation of AI which is why we don’t want AI models in a moratorium or closed off because we believe there’s value in having transparency. Now, there’s many other companies that are aligned with that mission like, say, AWS, which is one of our core partnerships. One of our recent partners, Databricks also leans towards open-source. There’s other non profit organisations like Eleuther AI that we’ve worked with to build open source models, as well.

As a whole, we just want to see the open-source community grow and anything we can do to build more of these bridges or enrich these connections, we will do.

AIM: There’s an entire section of researchers who are serious doomsdayers and believe that these models shouldn’t even be open-source. How do you respond to that?

Rajiv: If we step back and look at what humans have made over the last 100 or 200 years, you’ll find that lots of engineers have built many dangerous technologies across history. So, I think sometimes the AI industry is just a little bit too into themselves in that sense.

A lot of these concerns that have been raised are pretty theoretical and are so presumptuous. I mean I’m still waiting for my self-driving car which we were promised 10 years ago! So I think it’s healthy to be a little sceptical. Sure, there’s a probability of real dangers but in terms of all the other dangers and issues we have right now on the planet, AI is pretty low on the list.

AIM: I am really curious to understand how you keep up with everything that’s going on right now.

Rajiv: I know right! It just feels like everyday and every week there’s a new thing. The hard part about this is that we are at an inflection point where things are changing really fast. But the thing is we’ve been through events like this before where we have a lot of change for a couple of months but things eventually slow down as we start to understand more. People get used to the tech slowly and aren’t as amazed later.

But like you said, it’s such a radical change that will affect so many different domains and the questions around it are endless. There are legal questions, the impact on enterprises, jobs, society. We could honestly talk about this forever.