Deep learning implements structured machine learning algorithms by making use of artificial neural networks. These algorithms help the machine to learn by itself and develop the ability to establish new parameters with which help to make and execute decisions. Deep learning is considered to be a subset of machine learning and utilizes multi-layered artificial neural networks to carry out its processes, which enables it to deliver high accuracy in tasks such as speech recognition, object detection, language translation and other such modern use cases being implemented every day. One of the most intriguing implementations in the domain of artificial intelligence for creating deep learning models has been the Boltzmann Machine.

In this article, we will try to understand what exactly a Boltzmann Machine is, how it can be implemented and its uses. The major points that we are going to discuss in this article are given below.

Table Of Contents

- What is a Boltzmann Machine?

- Uses Of Boltzmann Machine

- Components Of Boltzmann Machine

- Creating Boltzmann Machine In Python

- Conclusion

What is a Boltzmann Machine?

Boltzmann Machine is a kind of recurrent neural network where the nodes make binary decisions and are present with certain biases. Several Boltzmann machines can be collaborated together to make even more sophisticated systems such as a deep belief network. Coined after the famous Austrian scientist Ludwig Boltzmann, who based the foundation on the idea of Boltzmann distribution in the late 20th century, this type of network was further developed by Stanford scientist Geoffrey Hinton. It derives its idea from the world of thermodynamics to conduct work toward desired states. It consists of a network of symmetrically connected, neuron-like units that make decisions stochastically whether to be active or not.

Boltzmann Machines consist of a learning algorithm that helps them to discover interesting features in datasets composed of binary vectors. The learning algorithm is generally slow in networks with many layers of feature detectors but can be made faster by implementing a learning layer of feature detectors. Boltzmann machines are typically used to solve different computational problems such as, for a search problem, the weights present on the connections can be fixed and are used to represent the cost function of the optimization problem. Similarly, for a learning problem, the Boltzmann machine can be presented with a set of binary data vectors from which it must find the weights on the connections so that the data vectors are good solutions to the optimization problem defined by those weights. Boltzmann machines make many small updates to their weights, and each update requires solving many different search problems.

Uses Of Boltzmann Machine

The main purpose of the Boltzmann Machine is to optimize the solution of a problem. It optimizes the weights and quantities related to the particular problem assigned to it. This method is used when the main objective is to create mapping and learn from the attributes and target variables in the data. When the objective is to identify an underlying structure or the pattern within the data, unsupervised learning methods for this model are considered to be more useful. Some of the most popular unsupervised learning methods are Clustering, Dimensionality reduction, Anomaly detection and Creating generative models.

Each of these techniques has a different objective of detecting patterns such as identifying latent grouping, finding irregularities in the data, or generating new samples from the available data. These networks can also be stacked layer-wise to build deep neural networks that capture highly complicated statistics. The use of Restricted Boltzmann Machines has gained popularity in the domain of imaging and image processing as well since they are capable of modelling continuous data that are common to natural images. They also are being used to solve complicated quantum mechanical many-particle problems or classical statistical physics problems like the Ising and Potts classes of models.

Components Of Boltzmann Machine

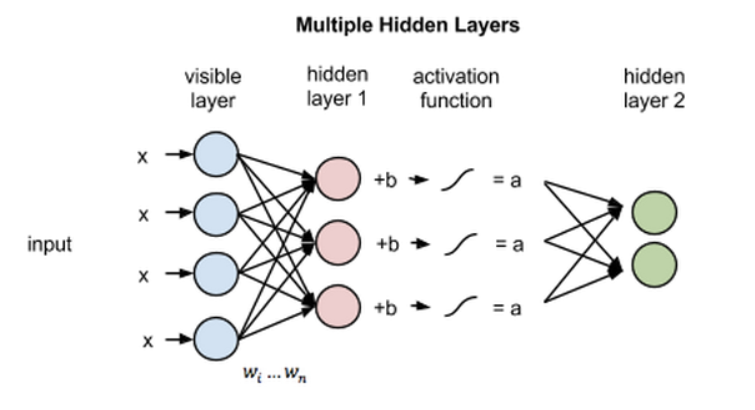

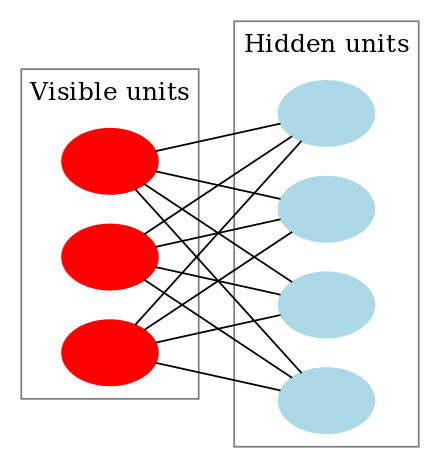

The architecture of the Boltzmann Machine comprises a shallow, two-layer neural network that also constitutes the building blocks of the deep network. The first layer of this model is called the visible or input layer and the second is the hidden layer. They consist of neuron-like units called a node and nodes are the areas where calculations take place. These nodes are interconnected to each other across layers, but no two nodes of the same layer are linked. Therefore there is no intra-layer communication and hence being one of the restrictions in a Boltzmann machine. Each node through computation processes input, and makes stochastic decisions about whether to transmit that input or not. When data is fed as input, these nodes learn all the parameters, their patterns and correlation between them, on their own and form an efficient system. Hence a Boltzmann Machine is also termed as an Unsupervised Deep Learning model.

This model can then be trained to get ready to monitor and study abnormal behaviour depending on what it has learnt. The coefficients that modify the inputs are randomly initialized. Each visible node takes a low-level feature from an item present in the dataset to be learned. The result of those two operations is fed into an activation function which in turn produces the node’s output also known as the strength of the signal passing through it. The outputs of the first hidden layer would be passed as inputs to the second hidden layer, and from there through as many hidden layers created, until they reach a final classifying layer. For simple feed-forward movements, the nodes function as an autoencoder. Learning is typically very slow in Boltzmann machines with a high number of hidden layers as large networks may take a long time to approach their equilibrium distribution, especially when the weights are large and the distribution being highly multimodal.

Creating Boltzmann Machine In Python

A Boltzmann Machine can easily be created using Python and PyTorch Library. As similar as creating a neural network, Boltzmann Machine’s architecture too can be defined using similar functions. For this example, here I have created a Restricted Boltzmann Machine and have tested its loss. A restricted Boltzmann Machine consists of a layer of visible units and a layer of hidden units with no visible-visible or hidden-hidden connections. It received a lot of attention and interest after being proposed as the building block of the multi-layer learning architecture known as the Deep Belief Network.

To create the model PyTorch’s autograd function has also been used which provides classes and functions implementing automatic differentiation and the activation function is selected to be as sigmoid. Here are some snippets of the example :

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.parallel

import torch.optim as optim

import torch.utils.data

from torch.autograd import Variable

class RBM():

def __init__(self, nv, nh):

self.W = torch.randn(nh, nv)

self.a = torch.randn(1, nh)

self.b = torch.randn(1, nv)

def sample_h(self, x):

wx = torch.mm(x, self.W.t())

activation = wx + self.a.expand_as(wx)

p_h_given_v = torch.sigmoid(activation)

return p_h_given_v, torch.bernoulli(p_h_given_v)

def sample_v(self, y):

wy = torch.mm(y, self.W)

activation = wy + self.b.expand_as(wy)

p_v_given_h = torch.sigmoid(activation)

return p_v_given_h, torch.bernoulli(p_v_given_h)

rbm.train(v0, vk, ph0, phk)

train_loss += torch.mean(torch.abs(v0[v0 >= 0] - vk[v0 >= 0]))

s += 1.

print('epoch: '+str(epoch)+' loss: '+str(train_loss/s))

Output :

epoch: 1 loss: tensor(0.2473) epoch: 2 loss: tensor(0.2495) epoch: 3 loss: tensor(0.2440) epoch: 4 loss: tensor(0.2373) epoch: 5 loss: tensor(0.2421) epoch: 6 loss: tensor(0.2445) epoch: 7 loss: tensor(0.2477) epoch: 8 loss: tensor(0.2475) epoch: 9 loss: tensor(0.2456) epoch: 10 loss: tensor(0.2439) epoch: 11 loss: tensor(0.2466) epoch: 12 loss: tensor(0.2467) epoch: 13 loss: tensor(0.2475) epoch: 14 loss: tensor(0.2427) epoch: 15 loss: tensor(0.2484) epoch: 16 loss: tensor(0.2480) epoch: 17 loss: tensor(0.2465) epoch: 18 loss: tensor(0.2475) epoch: 19 loss: tensor(0.2436) epoch: 20 loss: tensor(0.2474) epoch: 21 loss: tensor(0.2438) epoch: 22 loss: tensor(0.2460) epoch: 23 loss: tensor(0.2476) epoch: 24 loss: tensor(0.2459) epoch: 25 loss: tensor(0.2425)

print('test loss: '+str(test_loss/s))test loss: tensor(0.2706)

Conclusion

Boltzmann Machines can be considered as a form of an advanced neural network as they possess numerous qualities and a large number of uses that are currently being applied in the era of modern technology. There are many variations to it and with continuous improvements and developments still to be discovered. They provide a huge edge when used and are popular for their training and optimization.

In this article, we understood what a Boltzmann Machine is and what its architecture consists of. We also explored its uses and implementation and how it can be created using Python and the PyTorch library. I would like to encourage the reader to explore further the topic as still there is a lot to be discovered in the domain of Boltzmann Machines.

Happy Learning!