Machine learning has been an important component in the vastly growing field of data science. Making use of statistical methods, different algorithms are used to create models, which are further trained to make classifiers or prediction systems, which help uncover key insights within data mining and exploration projects. These insights later drive the decision-making process within created applications and businesses, deeply impacting its growth metrics in particular. As big data continues to expand and grow in today’s world, the demand for data scientists has increased, requiring them to identify relevant business questions and subsequently use data and exploration tools and techniques to answer them. This can be done using methods of Machine and Deep Learning. In addition, deep learning and machine learning techniques can be used to uncover hidden insights further and help automate the created system and technologies.

Machine learning, deep learning, and neural networks, in general, are all subfields of Artificial Intelligence. However, deep learning is a sub-field of machine learning, and neural networks are a sub-field of deep learning. As mentioned, machine learning algorithms are generally used to make a prediction or classification system. The algorithm estimates a pattern in the data based on the input data, which can be labelled or unlabeled. Suppose the model can fit better and more accurately according to the data points in the training set. In that case, weights are adjusted to reduce the inconsistency between the known labels and the model estimate. The algorithm repeats this evaluation and optimization process, updating weights automatically until a threshold of accuracy has been met.

Choosing the right machine learning algorithm for the problem depends on several factors, such as data size, data quality and diversity, and what answers the businesses want to derive from the given data. Additional parameters to be noticed include accuracy, training time, parameters, data points and much more. Therefore, choosing the right algorithm is both a combination of problem need, specification, experimentation and time available. Not even experienced data scientists can tell which algorithm will perform the best before experimenting with the others. Machine learning has revolutionized growth strategies in the business world. As new projects and the need for new and better systems have gained importance through emerging technology, Machine Learning’s strengths and uses have become self-evident. Classical machine learning techniques are often categorized by how an algorithm learns to become more accurate in its predictions. There are four basic Machine Learning approaches, supervised learning, unsupervised learning, semi-supervised learning and reinforcement learning. Machine learning is used in a wide range of applications. One of the most well-known examples of machine learning in action can be a recommendation system that powers Facebook’s news feed and Amazon’s buying schemes.

What is FLAML?

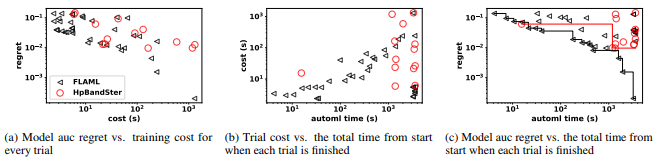

FLAML is an open-source automated python machine learning library that leverages the structure of the search space in search tree algorithmic problems and is designed to perform efficiently and robustly without relying on meta-learning, unlike traditional Machine Learning algorithms. To choose a search order optimized for both cost and error and it iteratively decides the learner, hyperparameter, sample size and resampling strategy while leveraging their compound impact on both cost and error of the model as the search proceeds. This makes FLAML an easy to use library for new application scenarios without requiring developers to collect many diverse meta-training datasets before using it. It allows the user to easily customize learners, search spaces and optimization metrics immediately without waiting for another expensive round of meta-learning in the model. FLAML uses single learners over ensembles and provides an advantage in model complexity, inference latency, ease of deployment, debuggability and explainability.

Many AutoML libraries have been developed, but they usually involve multiple trials of different configurations. Another drawback in such existing solutions is that they require a long time or large amounts of resources to help create and produce accurate models for large scale training datasets. FLAML, as an AutoML system, handles the complex dependency among the multiple variables mentioned. It provides an economical system that considers the multiple factors in the cost-error tradeoff and handles different tasks robustly and efficiently, reducing the training time and increasing accuracy when implemented as a model.

Getting Started with the Code of FLAML

This article will try to compare FLAML with traditional search tree algorithms and try to explore FLAML’s efficiency and accuracy as a model. The following code is an inspired implementation from FLAML’s official documentation, which can be accessed using the link here. In addition, we will be working on an airlines dataset to tackle a binary classification problem and check each model’s performance; you can download the CSV file from the link here.

Installing the Library

The first step will be to install the FLAML library. To do so, we will use the following code,

!pip install flaml

Loading the Dataset

We will be loading the airline’s dataset from OpenML to preprocess it before feeding it to the model.

#loading the dataset from flaml.data import load_openml_dataset X_train, X_test, y_train, y_test = load_openml_dataset(dataset_id=1169, data_dir='./')

Running FLAML

When we run the FLAML model, it automatically assigns parallel models according to the datasets and deploys models. Here the default learners for classification task are ‘lgbm’, ‘xgboost’, ‘catboost’, ‘rf’, ‘extra_tree’, ‘lrl1’. We will be comparing FLAML with lgbm and xgboost in particular.

#import aml class from flaml package

from flaml import AutoML

aml = AutoML()

#setting hyperparameters

settings = {

"time_budget": 300, # total running time in seconds

"metric": 'accuracy',

"task": 'classification', # task type

"log_file_name": 'airlines_experiment.log', # flaml log file

}

#training the models

aml.fit(X_train=X_train, y_train=y_train, **settings)

#determining and comparing the best learning algorithm

print('Best Learner Found:', aml.best_estimator)

print('Ideal hyperparmeter config:', aml.best_config)

print('Highest accuracy on validation data: {0:.4g}'.format(1-aml.best_loss))

print('Training duration of run: {0:.4g} s'.format(aml.best_config_train_time))

Output :

Best Learner Found: lgbm

Ideal hyperparmeter config: {'n_estimators': 164, 'num_leaves': 304, 'min_child_samples': 75, 'learning_rate': 0.21886405778268478, 'subsample': 0.9048064340763577, 'log_max_bin': 9, 'colsample_bytree': 0.632220807242231, 'reg_alpha': 0.03154355161993957, 'reg_lambda': 190.9985711118577, 'FLAML_sample_size': 364083}

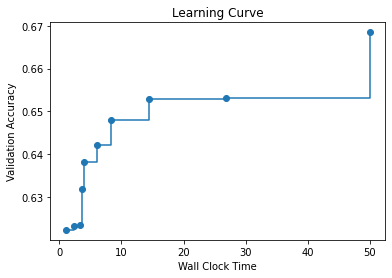

Highest accuracy on validation data: 0.6734

Training duration of run: 8.068 s

#getting the metrics aml.model.estimator

Output :

LGBMClassifier(colsample_bytree=0.6711349016933148,

learning_rate=0.06722830938196284, max_bin=16,

min_child_samples=19, n_estimators=215, num_leaves=746,

objective='binary', reg_alpha=0.005566009891732475,

reg_lambda=48.11469986593752, subsample=0.827351358517848)

Compute predictions of testing dataset,

#compute predictions of testing dataset

y_pred = aml.predict(X_test)

print('Predicted labels', y_pred)

print('True labels', y_test)

y_pred_proba = aml.predict_proba(X_test)[:,1]

Output :

Predicted labels ['1' '0' '1' ... '1' '0' '0']

True labels 118331 0

328182 0

335454 0

520591 1

344651 0

..

367080 0

203510 1

254894 0

296512 1

362444 0

Name: Delay, Length: 134846, dtype: category

#calculating loss scores

from flaml.ml import sklearn_metric_loss_score

print('accuracy found ', '=', 1 - sklearn_metric_loss_score('accuracy', y_pred, y_test))

print('roc_auc found', '=', 1 - sklearn_metric_loss_score('roc_auc', y_pred_proba, y_test))

print('log_loss found', '=', sklearn_metric_loss_score('log_loss', y_pred_proba, y_test))

Output :

accuracy found = 0.6724559868294202

roc_auc found = 0.726127451035431

log_loss found = 0.6027438636866834

Plotting the learning curve of the FLAML Model,

Comparing the Models

Now we will be focusing on our main three models as mentioned above and testing their performance accuracy.

Setting parameters for comparison,

from flaml.model import SKLearnEstimator

from flaml import tune

from rgf.sklearn import RGFClassifier, RGFRegressor

class RegGreedyFor(SKLearnEstimator):

def __init__(self, task='binary:logistic', n_jobs=1, **params):

super().__init__(task, **params)

if 'regression' in task:

self.estimator_class = RGFRegressor

else:

self.estimator_class = RGFClassifier

# convert to int for integer hyperparameters

self.params = {

"n_jobs": n_jobs,

'max_leaf': int(params['max_leaf']),

'n_iter': int(params['n_iter']),

'n_tree_search': int(params['n_tree_search']),

'opt_interval': int(params['opt_interval']),

'learning_rate': params['learning_rate'],

'min_samples_leaf': int(params['min_samples_leaf'])

}

@classmethod

def search_space(cls, data_size, task):

space = {

'max_leaf': {'domain': tune.lograndint(lower=4, upper=data_size), 'init_value': 4, 'low_cost_init_value': 4},

'n_iter': {'domain': tune.lograndint(lower=1, upper=data_size), 'init_value': 1, 'low_cost_init_value': 1},

'n_tree_search': {'domain': tune.lograndint(lower=1, upper=32768), 'init_value': 1, 'low_cost_init_value': 1},

'opt_interval': {'domain': tune.lograndint(lower=1, upper=10000), 'init_value': 100},

'learning_rate': {'domain': tune.loguniform(lower=0.01, upper=20.0)},

'min_samples_leaf': {'domain': tune.lograndint(lower=1, upper=20), 'init_value': 20},

}

return space

@classmethod

def size(cls, config):

max_leaves = int(round(config['max_leaf']))

n_estimators = int(round(config['n_iter']))

return (max_leaves * 3 + (max_leaves - 1) * 4 + 1.0) * n_estimators * 8

@classmethod

def cost_relative2lgbm(cls):

return 1.0

aml = AutoML()

aml.add_learner(learner_name='RGF', learner_class=RegGreedyFor)

#hyperparameter tuning and deploying for training

settings = {

"time_budget": 60, # total running time in seconds

"metric": 'accuracy',

"estimator_list": ['RGF', 'lgbm', 'rf', 'xgboost'], # list of ML learners

"task": 'classification', # task type

"log_file_name": 'airlines_experiment_custom.log', # flaml log file

"log_training_metric": True, # whether to log training metric

}

aml.fit(X_train = X_train, y_train = y_train, **settings)

Checking the accuracy of models one by one,

#checking FLAML's accuracy

print('flaml accuracy', '=', 1 - sklearn_metric_loss_score('accuracy', y_pred, y_test))

flaml accuracy = 0.7065900360411136

#checking light BGM accuracy

from lightgbm import LGBMClassifier

lgbm = LGBMClassifier()

lgbm.fit(X_train, y_train)

y_pred = lgbm.predict(X_test)

from flaml.ml import sklearn_metric_loss_score

print('default lgbm accuracy', '=', 1 - sklearn_metric_loss_score('accuracy', y_pred, y_test))

default lgbm accuracy = 0.6702346380315323

#accuracy for XGBoost

from xgboost import XGBClassifier

xgb = XGBClassifier()

xgb.fit(X_train, y_train)

y_pred = xgb.predict(X_test)

from flaml.ml import sklearn_metric_loss_score

print('default xgboost accuracy', '=', 1 - sklearn_metric_loss_score('accuracy', y_pred, y_test))

default xgboost accuracy = 0.6676060098186078

As we can observe, FLAML provides better accuracy with a better adaptation to the data’s learning.

EndNotes

This article tried to explore and understand the importance of Machine Learning algorithms and how they work. We also tried to explore the FLAML library and its components for creating an efficient and accurate model for Machine Learning. The above implementation can be found in a Colab notebook using the link here.

Happy Learning!