|

Listen to this story

|

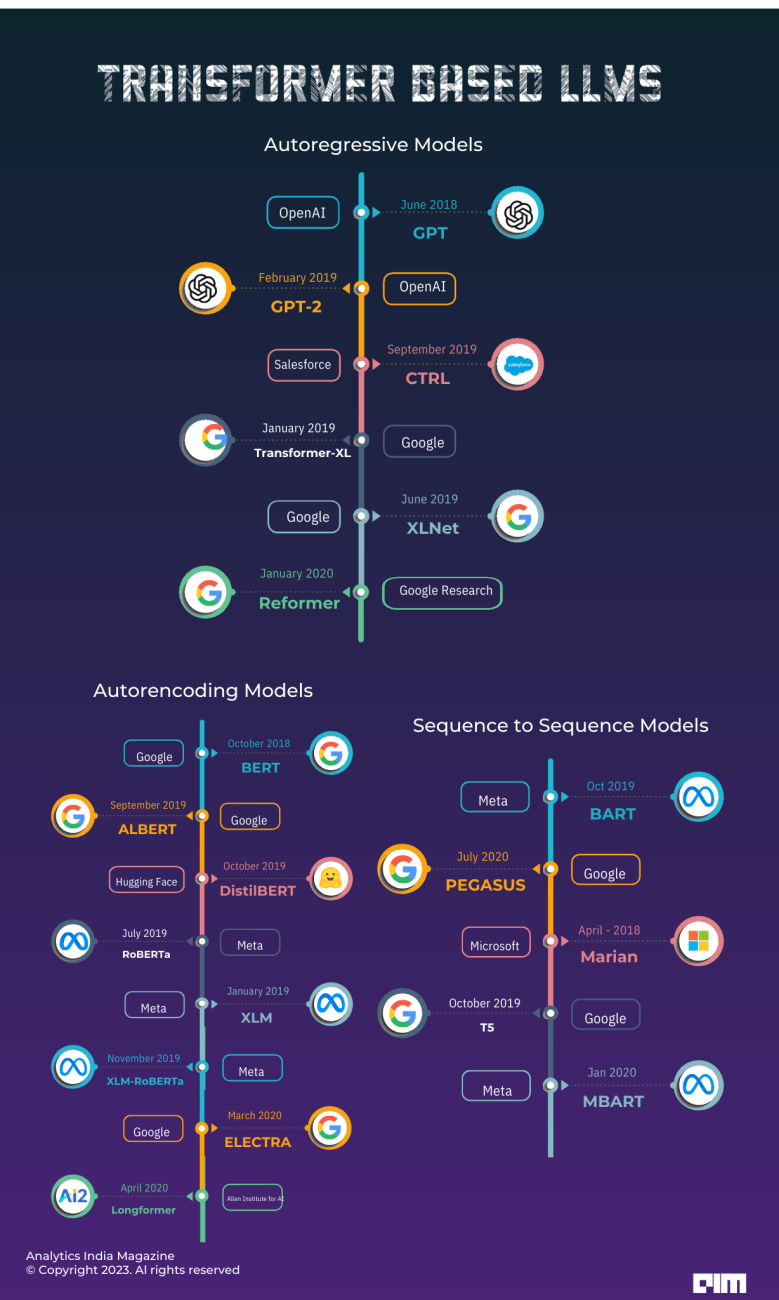

The Transformer architecture, introduced in 2017, revamped natural language processing. Soon models like GPT, BERT, GPT-2, DistilBERT, BART, T5, and GPT-3 and now GPT-4 followed, each with unique capabilities and improvements. The models can be divided into three categories based on their design: auto-encoding models, autoregressive models, and sequence-to-sequence models.

Autoencoding Models

Trained by altering the input tokens and then reconstructing the initial sentence, auto-encoding models follow a similar pattern to the original transformer model’s encoder. They can access the complete inputs without any mask. These models create a two-way representation of the entire sentence and can be further refined for excellent performance in tasks like text generation.

However, their most suitable use is in sentence or token classification. Here are some of them.

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

In 2018, Google unveiled BERT that introduces randomness into its input data during its pre-training phase. Typically, 15% of the tokens are masked using three different probabilities: a special mask token is used with a probability of 0.8, a random token other than the masked one is used with a probability of 0.1, and the same token is used with a probability of 0.1.

The model’s main task is to predict the original sentence from the masked input. Additionally, the model is given two sentences A and B with a separation token in between. There’s a 50% chance that these sentences are consecutive in the corpus, and a 50% chance that they are unrelated. The model’s secondary objective is to predict whether the sentences are consecutive or not.

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

Google Research and Toyota Technological Institute at Chicago’s ALBERT is similar to BERT but with a few modifications like the embedding size (E) differs from the hidden size (H) because embeddings are context-independent (one token per vector), while hidden states are context-dependent (representing a sequence of tokens), making H >> E more logical. The large embedding matrix (V x E) results in more parameters when E < H.

Additionally, layers are grouped with shared parameters to save memory. Instead of next-sentence prediction, ALBERT employs sentence ordering prediction, where two consecutive sentences A and B are given as input, and the model predicts if they have been swapped or not.

DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter

Hugging Face created this smaller version of BERT through distillation, where it learns to predict probabilities like the larger version. It aims to achieve the same probabilities as the teacher model, correctly predict masked tokens (without next-sentence objective), and maintain similarity between hidden states of the teacher and student models.

RoBERTa: A Robustly Optimised BERT Pre-training Approach

Again similar to BERT, RoBERTa introduced enhanced pre-training techniques. One notable improvement is dynamic masking, where tokens are masked differently in each training epoch, unlike BERT’s fixed masking. Built by the Paul G Allen School of Computer Science & Engineering and the University of Washington, the model eliminates the NSP loss, instead combining chunks of continuous texts to form 512 tokens, possibly spanning multiple documents.

Moreover, larger batches are used during training, boosting efficiency. Lastly, BPE with bytes as subunits are employed to handle unicode characters more effectively.

XLM: Cross-lingual Language Model Pre-training

Built by Meta, XLM is another transformer-based model trained on multiple languages with three types of training: Causal Language Modelling (CLM), Masked Language Modelling (MLM), and a combination of MLM and Translation Language Modelling (TLM). CLM and MLM involve selecting a language for each training sample and processing 256-token sentences that may extend across multiple documents in that language.

TLM combines sentences in two different languages with random masking, allowing the model to use both contexts for predicting masked tokens. The model’s checkpoints are named based on the pre-training method used (clm, mlm, or mlm-tlm), and it incorporates language embeddings alongside positional embeddings to indicate the language used during training.

XLM-RoBERTa: Unsupervised Cross-lingual Representation Learning at Scale

XLM-RoBERTa combines RoBERTa techniques with XLM, excluding translation language modelling. Instead, it focuses on masked language modelling in sentences from a single language. Made by Meta, the model is trained on a vast array of languages (100) and possesses the ability to identify the input language without relying on language embeddings.

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

Stanford University and Google developed ELECTRA, a special transformer model that learns by utilising a smaller masked language model. This smaller model corrupts input text by randomly masking some parts, and ELECTRA’s task is to figure out which tokens are original and which are replacements.

Similar to GAN training, the smaller model is trained for a few steps with the original texts as the objective, not to deceive ELECTRA like in a traditional GAN. Afterward, ELECTRA is trained for a few steps to improve its performance.

Longformer: The Long-Document Transformer

Longformer by Allen Institute for AI is a faster transformer model than traditional ones because it uses sparse matrices instead of dense attention matrices. This allows it to focus on the nearby context for each token, using only the two tokens to its left and right. While some input tokens still receive global attention, the overall attention matrix has fewer parameters, making it more efficient. It is pre-trained similarly to RoBERTa.

We will soon come up with Part 2 with autoregressive and sequence-to-sequence models.

Read more: LLM Chatbots Don’t Know That We Know They Know We Know