Convolutional Neural Network provides various features to perform different tasks of image processing but in any convolutional neural network, most of the layers like convolutional layers and pooling layers downsample the height and width of the input or by using padding, keep them unchanged. In many processes like semantic segmentation, object detection which processes classification at the pixel level, it is convenient to not change the size of output or keep the dimensions of input and output the same. This can be achieved by the transposed convolution in a better way. In this article, we will discuss in detail the transposed convolution, its importance and how to use it. Following are the major points that we will cover in this article:

Table of Contents

- Problem with Simple CNN

- Different Upsampling Techniques

- Transposed Convolutions

- How Can We Use Transposed Convolutions?

Problem with Simple CNN

When we intend to perform image mapping, or object detection or segmentation, or enhance the resolution, we need to start from an input image where every pixel of the image consists of important information. In this type of situation, we can understand how every pixel and dimension matters.

For example, a 3-layered CNN takes an image of size 128⤬128⤬3 (128-pixel height and width and 3 channels) as input and passes an image of size 44⤬64 after going through a convolutional layer. This means we have 1024 neurons in our convolutional layer. Next in the output layer, we try to upsample it by a factor of 32 while the output layer will omit an image with 32,768 pixels in it.

To deal with such a situation where we try to preserve the size of input so that we can get the same size in output, we can follow the same padding procedure. But the problem with padding is that it increases the calculation of the model and it has to apply to the original input.

In the above architecture of a fully connected CNN model, we can see how the data gets downsampled before reaching the final layer. Instead of padding we can divide a network in two parts – in the first part, the network will downsample the data and in the second part, the network will upsample the data.

The above image represents the architecture of a CNN model which consists of downsampling and upsampling. There can be various methods to perform upsampling.

Different upsampling techniques:

- Nearest neighbor:

It works by filling pixel values into the nearest neighbor to increase the size or dimensions of the output image.

- Bed of nails:

In a bed of nails, we use zeros to fill the blank pixels and copy-paste the input pixel values to the output image.

- Bilinear interpolation:

In this method of upsampling, we apply linear interpolation in two directions. It uses the 4 nearest neighbors for taking their weight average and puts them to the blank pixels.

- Max-Unpooling;

In CNN the max-pooling layer extracts the max values from the image portions which are covered by the filter to downsample the data then in upsampling the unpooling layer provides the value to the position from where the values have got picked up.

But these methods are not dependent on the data meaning they don’t learn anything from data, they are just predefined calculations which makes them task-specific and costly in calculation timing. Transposed convolutions are the generalized solution for these problems.

Transposed convolutions

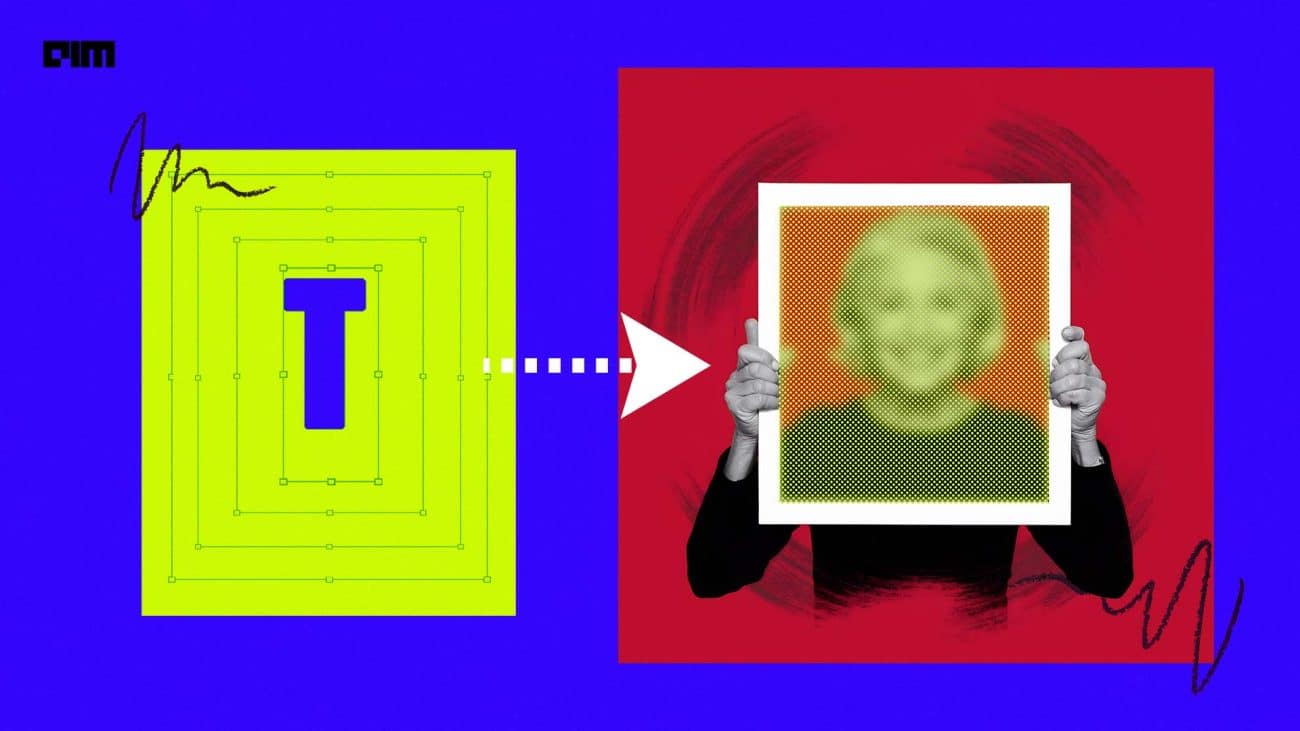

It is a method to upsample the output using some learnable parameters. It is a process that can be considered as an opposite process to any simple CNN. Suppose we are applying a CNN on an image of 7*7 size and our CNN is having a filter of 3*3, stride of 1*1 and no padding or valid padding is applied. Given in the below image.

In the below image we can see the output of the process as an image of size 5*5. For the given image, the size of output from a CNN can be calculated by:

Size of output = 1 + (size of input – filter/kernel size + 2*padding)/stride

Size of output image = 1+ (7-3 + 2*0)/1.

Size of output = 5

Now suppose we want an output of size similar to the size of the input. This means you want to upsample your output before the output layer. You can get this output size by changing the formula. Which is:

Output size = (input -1) * strides + filter – 2* same padding + output padding

Output size = (7-1)*1 + 3 – 2*1 + 0

Output size = 7.

So here we have got the output size similar to the input size. But the question is, in the pixel value how will we get the values like input image pixels value?

Let’s take an example of an image of size 4*4 which is the input image.

Suppose after passing through some convolution layers we get a 4*4 size image. Now it’s the turn for transpose convolution to get the image passed through it. Here in the image, the black color will always be black. And white is a mixture of RGB colors in similar quantities. And our transpose convolution layers have the following properties.

- Filter = 3*3.

- Strides = 4*4.

- Padding = 0

- Output padding = 1

So with these properties, the output image size will be:

Output = (4-1) * 4 + 3 – 2 * 0 + 1 = 16.

The output image will look like this

So what happened here? After multiplying by filter 3*3, each pixel is generating a block of 3*3 for output. Since we are giving the stride of size 4, we are getting zero values which are shown in the black strip. Added the padding of 1 so that we can compare this output to our next output.

Let’s change the properties of transpose convolution.

- Filter = 4*4

- Stride = 4*4

- Padding = 0

- Output padding = 0

With these properties the size of output is:

Output = (4-1) * 4 + 4 – 2 * 0 + 0 = 16.

The image in the output will look like this.

Here we can see that in the image as we discussed the black will always be black(because its pixel value is zero any multiplication will not affect it). In the results, we can see some more color. In the left upper corner, the first four-pixel cells are red which means they have (255,0,0) color space. And at the start of the next 4 x 4 cells, we can see yellow which means the color space in this section is (255,255,0). By this, we can say that overlapping cells have a matching of two different colors.

How can we use them?

We can add these layers into our keras CNN model by using tf.keras.layers.Conv2DTranspose layer. For example,

tf.keras.layers.Conv2DTranspose(filters_depth, filter_size, strides=(1, 1), padding='valid', output_padding=0)The above line of code is used for adding a transposed convolution layer with a keras sequential model. If anyone is using PyTorch for implementation, they can use torch.nn.ConvTranspose2d() as:

torch.nn.ConvTranspose2d(in_channels, out_channels, kernel_size, stride=1, padding=0, output_padding=0, groups=1, bias=True, dilation=1, padding_mode='zeros', device=None, dtype=None)Final Words

Here we have seen how transposed convolution layers are a better way to upsample any image. We have seen some other techniques which can be used but when it comes to making a model which is learning in all stages we should use transposed convolution. There are various applications where sometimes it becomes compulsory to use these layers such as semantic segmentation, superresolution etc.

References: