Back in school time, we learned that the part of speech indicates the function of any word, like what it means in any sentence. There are commonly nine parts of speeches; noun, pronoun, verb, adverb, article, adjective, preposition, conjunction, interjection, and a word need to be fit into the proper part of speech to make sense in the sentence.

So the part of speech is not just part of studying the grammar of any language, but also it is a very useful part of text preprocessing in NLP as we know that NLP is a task where we make a machine able to communicate with a human or with a different machine. So it becomes compulsory for a machine to understand the part of speech.

Classifying words in their part of speech and providing them labels according to their part of speech is called part of speech tagging or POS tagging OR POST. Hence the set of labels/tags is called a tagset. Next in the article, we will discuss how we can implement that POST part of any NLP task.

Code Implementation: Parts Of Speech Tagging

Importing the libraries

Input:

import nltk

from nltk import word_tokenizeDownloading the packages.

Input:

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

nltk.download('tagsets')Viewing the POST tagsets.

Input:

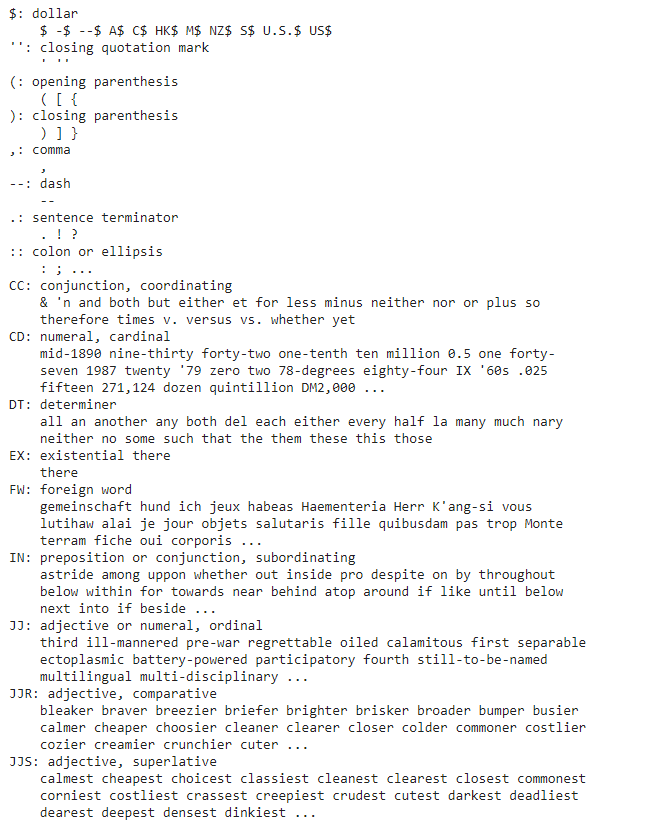

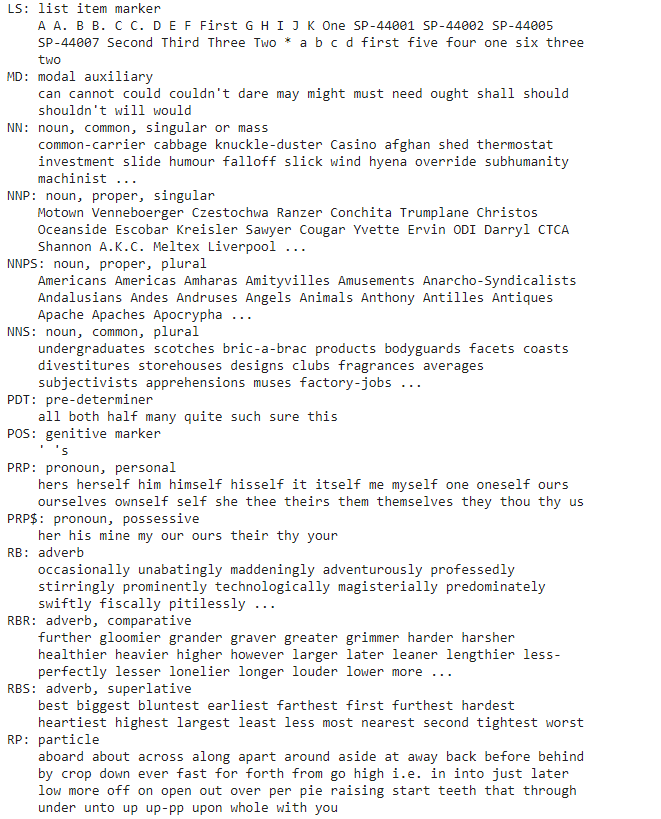

nltk.help.upenn_tagset()Output:

Here we can see the list or set of the tag which nltk provides us, and from those options, we will provide labels to every word.

Let’s check for the tags for any sentence.

Input:

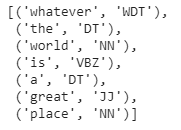

sentence = word_tokenize("whatever the world is a great place")

nltk.pos_tag(sentence)Output:

Here we can see that we have provided tags to every word. For example, the word “world” has got the tag NN, a noun, and great has got the tag JJ, which is a tag for an adjective.

Let’s check for some more examples; this time, we are focusing on homonyms.

Input:

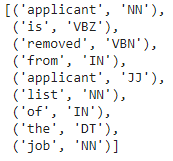

sentence = word_tokenize("applicant is removed from applicant list of the job ")

nltk.pos_tag(sentence)In the input, we have provided the applicant word two times with different parts of speech. So lets check for the labels it will give to both of them.

Output:

Here we can see for the first applicant that the label is NN, a noun, and for the second applicant, it labelled the word as JJ, which means adjective.

Input:

sentence = word_tokenize("allow us to add lines in list of allow actions")

nltk.pos_tag(sentence)

Output:

Again we have provided the exact tag to the ‘allow’ word.

This is not enough; there are some more features we can use. For introducing those features, let us just import the brown corpus.

Input:

from nltk.corpus import brown

brown.categories()Output:

Here we can see that we are having a corpus of 15 categories. We are going to use the news category of the corpus.

Input:

text_news = nltk.Text(word.lower() for word in nltk.corpus.brown.words(categories='news'))

text_newsOutput:

Here we have imported the brown corpus of the news category, and now one of the important features of tagging is that we can find or extract the word of similar tags; for example, man is a noun, and the tag given to it is NN and using the similar function we can find out the words with a similar label or part of speech. Before finding the lexical categories, let’s just have an overview of the corpus’s words count with their part of speech.

Input:

brown_news_tagged = brown.tagged_words(categories='news', tagset='universal')

nltk.download('universal_tagset')

tag_fd = nltk.FreqDist(tag for (word, tag) in brown_news_tagged)

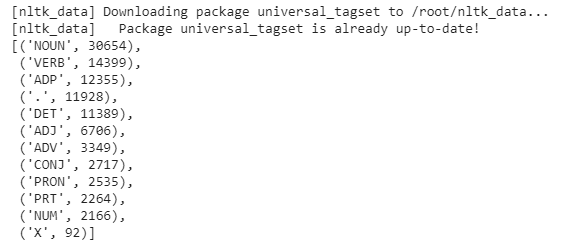

tag_fd.most_common()Output:

Here we can see the count of the universal tagsets in the corpus.

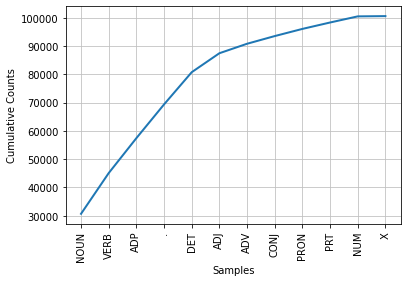

Input:

tag_fd.plot(cumulative=True)Output:

Now we can find out the words with similar POST.

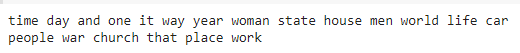

Input:

text_news.similar('man')Output:

Here we can see that the other noun, like man, comes out from the corpus. Similarly, we can extract for other parts of speeches as well.

Input:

text_news.similar('said')Output:

Input:

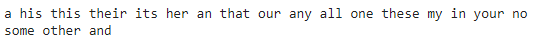

text_news.similar('the')Output:

Input:

text_news.similar('the')Output:

Observe the words and the results we are having with other words with similar POST. There are various corpus available in nltk that we can use for the practice. Also, the tagged corpora are available in other languages like Hindi, Portugueses, Chinese etc.

We can also import the list of words with their universal tag label. In the next step, I am importing the Indian corpus for tagged corpora of the hindi language.

Input:

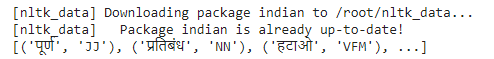

nltk.download('indian')

nltk.corpus.indian.tagged_words('hindi.pos')Output:

In this article, we have seen how we can provide tags of different parts of speech and extract the tags from the sentence—also, the usage of nltk for POST. We can get started with tagging using them also. In NLP, there is a huge use of POST or part of speech tagging. By sequencing words, if we had provided the tags to the words, it becomes more useful for algorithms to understand the exact representation of the similar word in different situations.

References :