Data Scientists at CRED, Ravi Kumar and Samiran Roy explained the essence of using graph neural networks and how the emerging technology is being utilised by CRED at the recently held Deep Learning DevCon 2021. The duo explained how graph neural networks should be modelled and the key factors separating graph data from the traditional tabular used data in neural network models.

CRED is an online payments app that’s linked to credit cards. The app was made by Kunal Shah, the founder of the company FreeCharge. The CRED app aims to make usage of credit cards automated. The app also offers many rewards for using in the form of CRED coins, which can be redeemed later for cash or various offers.

In the opening minutes, Ravi Kumar explained what graph analytics and graph neural networks are and discussed the problems related to traditional neural networks currently being used in the market.

“Graphs are a general language for describing and analysing entities with relations and interactions. In a graph network, nodes are entities that define a user, merchant or other similar items. Edges describe the relationships between two nodes, and the properties define the information associated with nodes or edges,” he said.

Image Source: DevCon 2021

“Real-world data is dynamic and ever-growing with time. A graph database brings deeper context to the data being processed and provides a high value to the relationship between the entities. Tabular data becomes sparse as the data grows,” Kumar said, explaining why graph databases are being used dynamically.

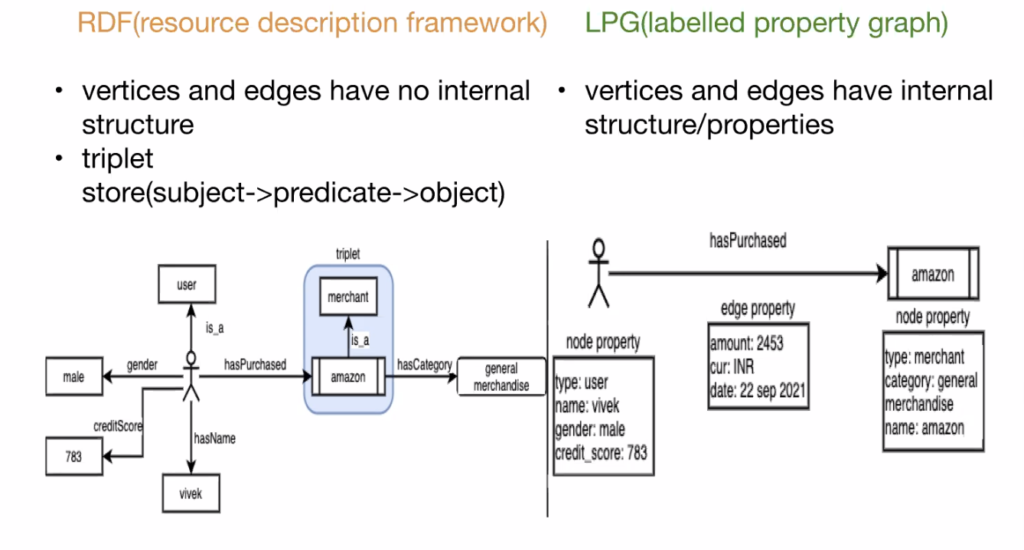

There are various types of graph networks; some examples include Wiki Networks, Flight Networks, Underground Networks and Social Networks. Further, he demonstrated how graphs represent data and the difference between two of the most popular representation types, RDF (resource description framework) and LPG (labelled property graph). In RDF, the graph network’s vertices and edges have no internal structure, whereas in LPG, the vertices and edges present in the graph have internal structure and properties. RDF does not support the same pair of nodes and relationships more than once, but LPG does.

Image Source: DevCon 2021

Ravi later elaborated on the fundamental problems with traditional neural networks, such as the interactivity between data points, drawbacks in the logical separation of nodes, problems faced when nodes are beyond 3, and more.

“In general machine learning, we can assume id as data points, but in case of graph neural networks, we cannot say the same,” added Ravi, going ahead with the discussion.

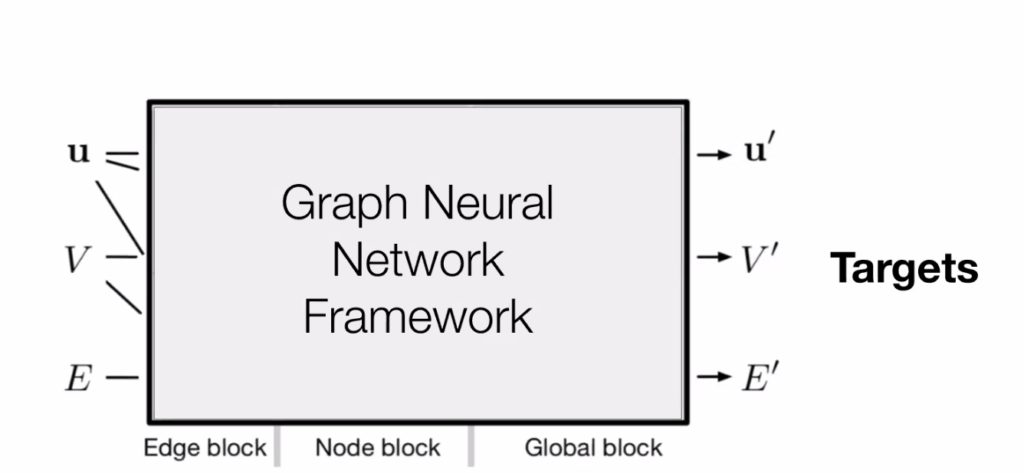

Samiran Roy later took over the presentation and spoke further on the subject, explaining a graph neural network’s working and framework. A graph neural network comprises three major blocks: the Edge Block, the Node Block, and the Global Block. These blocks together work with aggregator functions towards the required target goal.

Samiran said, “From a data science perspective, we have the flexibility to embed features to any of the graph network components. We can have either edge level, node level or graph level features embedded.”

He also described how the points present in a graph network influence each other and what methods can be used to overcome problems in a graph network. During his presentation, he explained the interactions between network blocks and their aggregators in graph networks and their different variants.

Variants for graph neural networks include the following types:

- Full GN Block

- Independent recurrent block

- Message passing neural network

- Non-local neural network

- Relation network

- Deep set

Talking about the issues of graph neural networks in practice, Roy added, “The graphs that we come across in the real world are heterogeneous graphs; there are multiple kinds of nodes. The aggregation functions being used are to be paid the most importance when defining our target output. While working with large graphs, we do not need to calculate for all edges and nodes; instead, we can subsample nodes and use that to train our graph neural networks.”

Graph neural networks have several use cases, such as Social Network User Classification and Molecular Property Prediction, to name a few.

The current use cases of graph neural networks at CRED include:

- Product Targeting Model

- Community Detection

- Graph Completion

- Referral Propensity Ranking

Use Cases being explored by CRED are embedding models for downstream use cases and creating affinity models for user-to-user or user-to-merchant.

Graph neural networks are nascent yet rapidly evolving as a field. Today, the industry data needs a lot of research on graph networks as the current research is being repeated on the same standard datasets. The flexibility of defining features and targets makes graph neural networks stand out from other typical neural networking types.