In Computer vision we often deal with several tasks like Image classification, segmentation, and object detection. While building a deep learning model for image classification over a very large volume of the database of images we make use of transfer learning to save the training time and increase the performance of the model. Transfer learning is the process where we can use the pre-trained model weights of the model like VGG16, ResNet50, Inception, etc that were trained on the ImageNet dataset.

Also, when we train an image classification model we always want a model that does not get overfitted. Overfitted models are those models that perform good in training but poorly while prediction on testing data is computed. This is the reason we make use of regularization to avoid overfitting situations like Dropouts and Batch Normalization.

Through this article, we will explore the usage of dropouts with the Resnet pre-trained model. We will build two different models one without making use of dropout and one with dropout. At last, we will compare both the models with graphs and performance. For this experiment, we are going to use the CIFAR10 Dataset that is available in Keras and can also be found on Kaggle.

What we will learn from this article?

- What is ResNet 50 Architecture?

- How to use ResNet 50 for Transfer Learning?

- How to build a model with and without dropout using ResNet?

- Comparison of both the built models

What is ResNet50 Architecture?

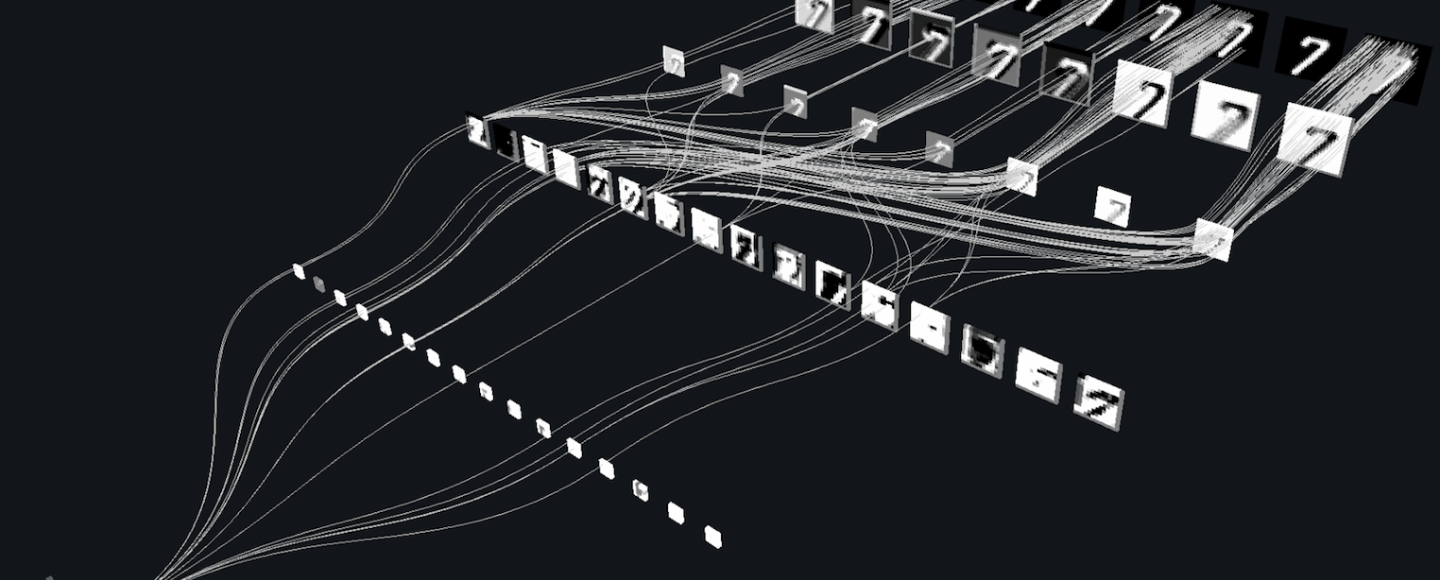

ResNet was a model that was built for the ImageNet competition. This was the first model that was a very deep network having more than 100 layers. It reduced the error rate down to 3.57% from 7.32% shown by vgg. The main idea behind this network was making use of Residual connections. The idea is not to learn the original function but to residuals. Read more about ResNet architecture here and also check full Keras documentation.

Dropout

Dropout is a regularization technique for reducing over fitting in neural networks by preventing complex co-adaptations on training data. It is an efficient way of performing model averaging with neural networks. The term dilution refers to the thinning of the weights.The term dropout refers to randomly “dropping out”, or omitting, units (both hidden and visible) during the training process of a network.

Model Without Dropout

Now we will build the image classification model using ResNet without making use of dropouts. First, we will define all the required libraries and packages. Use the below code to import the same.

import tensorflow as tf from keras import applications from keras.utils import to_categorical from keras.models import Sequential from keras.layers import Conv2D, MaxPooling2D from keras.layers import Dense, Dropout, Flatten from keras import Model from keras.applications.resnet50 import ResNet50 from keras.applications.resnet50 import preprocess_input from tensorflow import keras

Now we will load the data. We are directly loading it from Keras whereas you can read the data downloaded from Kaggle as well. After loading we will transform the labels followed by defining the base model that is ResNet50. Use the below code to the same.

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data()

y_train=to_categorical(y_train)

y_test=to_categorical(y_test)

base_model = ResNet50(include_top=False,weights='imagenet',input_shape=(32,32,3),classes=y_train.shape[1])

Now we will add the flatten and fully connected layer over this base model and will define the total no of classes as outputs. Use the below code to the same.

model_1= Sequential()

model_1.add(base_model)

model_1.add(Flatten())

model_1.add(Dense(512,activation=('relu'),input_dim=2048))

model_1.add(Dense(256,activation=('relu')))

model_1.add(Dense(512,activation=('relu')))

model_1.add(Dense(128,activation=('relu')))

model_1.add(Dense(10,activation=('softmax')))

Now we will check the model summary followed by compiling and training the model. Use the below code for the same. We will be training the model for 15 epochs with batch size of 100.

.

model_1.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

model_1.fit(x_train,y_train,validation_data=(x_test,y_test),epochs=15,batch_size=100)

Now we will evaluate the model performance on the testing data. Use the below code for the same.

print("\nTraining Loss and Accuracy: ",model_1.evaluate(x_train,y_train))

print("\nTesting Loss and Accuracy: ",model_1.evaluate(x_test,y_test))

Model With Dropout

Now we will build the image classification model using ResNet without making dropouts. Use the below code to do the same. We will follow the same steps. We will first define the base model and add different layers like flatten and fully connected layers to it. Use the below code for the same.

model_2= Sequential()

model_2.add(base_model)

model_2.add(Flatten())

model_2.add(Dense(512,activation=('relu'),input_dim=2048))

model_2.add(Dense(256,activation=('relu')))

model_2.add(Dense(512,activation=('relu')))

model_2.add(Dense(128,activation=('relu')))

model_2.add(Dense(10,activation=('softmax')))

We will now compile the model and will train the model over the training data. Use the below code for the same.

model_2.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

history = model_2.fit(x_train,y_train,validation_data=(x_test,y_test),epochs=15,batch_size=100)

Now we will evaluate this model performance on the testing data. Use the below code for the same.

print("\nTraining Loss and Accuracy: ",model_2.evaluate(x_train,y_train))

print("\nTesting Loss and Accuracy: ",model_2.evaluate(x_test,y_test))

Now we will see the graph for model loss and accuracy for both the models. Use the below code to do the same.

Comparison of Training and Testing Accuracy and Loss

| Performance | Without Dropouts | With Dropouts |

| Training Accuracy | 94.5% | 97.54% |

| Testing Accuracy | 78.76% | 79.87% |

| Training Loss | 0.17 | 0.08 |

| Validation Loss | 0.86 | 1.01 |

Conclusion

Through this article, we explored practically how using dropout increases the accuracy of the model built using ResNet architecture. Training the model for only 10 epochs gave the accuracy of 74% whereas without dropout it only went up to 62%. We did not make use of any preprocessing techniques. If we make use of such techniques like Data Augmentation we can get better performance of the model. Regularization techniques have also shown good results as the learning of the model gets improved.