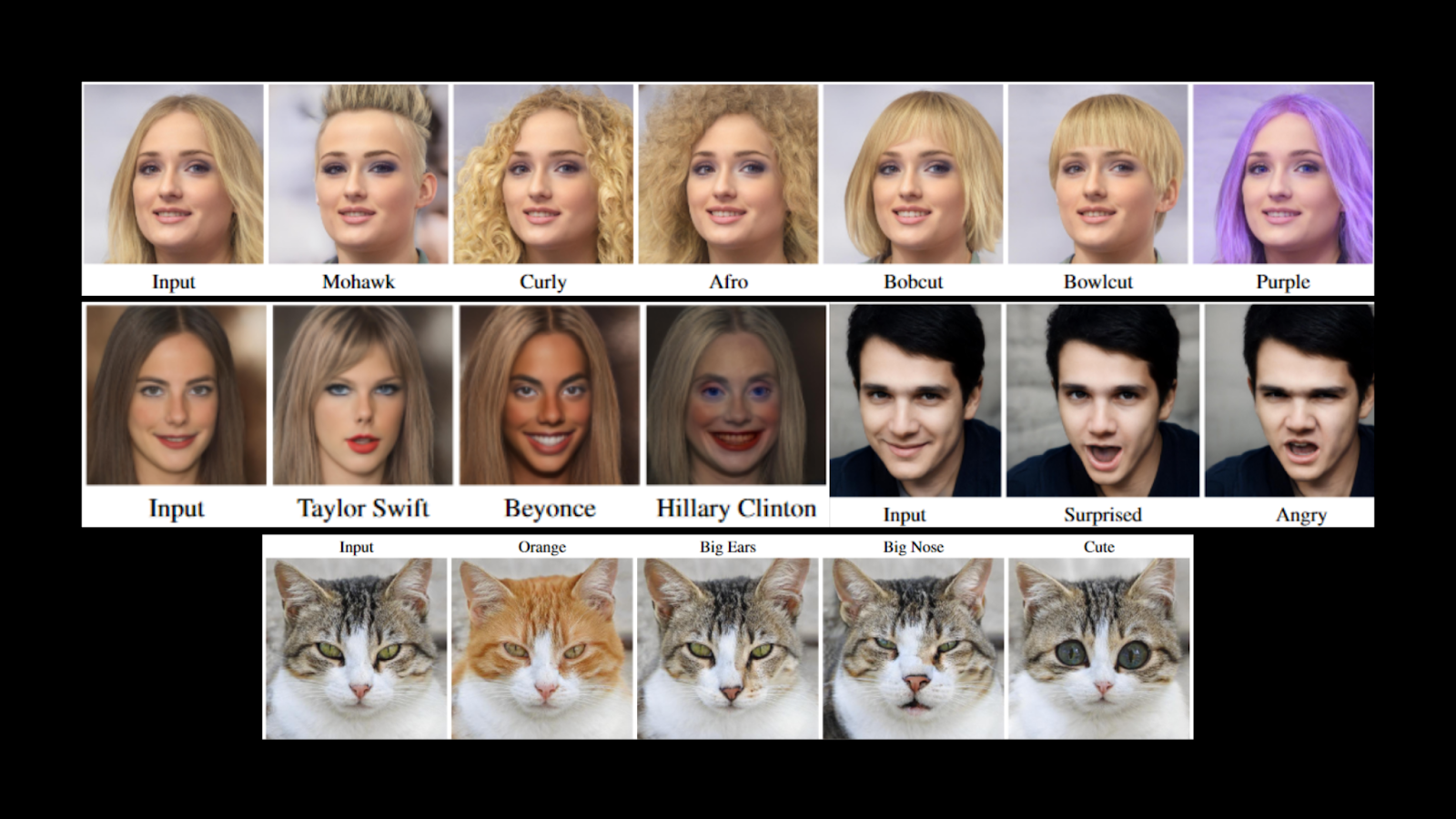

The generative power of StyleGAN and the W+ latent space has brought about a troupe of new GAN architectures for image synthesis and manipulation, such as Pixel2Style2Pixel and AnycostGAN. One of the major problems faced in these tasks is the encoding of the desired attribute into the generator’s latent space. Existing approaches require large amounts of annotated data, a pre-trained classifier, or manual examination and even then they struggle with complex attributes. CLIP models have this innately human ability to map visual concepts into natural language. StyleCLIP combines the generative power of StyleGAN with CLIP’s joint image-text embedding to enable intuitive text-based image manipulation.

Architecture & Approach

One common to all three StyleCLIP appraoches is that the input images are first inverted using e4e into StyleGAN’s W+ latent space or the more disentangled style space S.

Latent Optimization

The first approach for leveraging CLIP is a simple latent optimization technique. This method regresses the input latent code in the StyleGAN’s W+ space to the desired latent code by minimizing a loss computed in the CLIP space. For a given input latent code ws ∈ W+ and a text prompt t, the optimization objective is:

Here, G is the pre-trained StyleGAN generator, LID is the identity loss, and DCLIP is the cosine distance between the CLIP embeddings of the two arguments: source image and text prompt. λL2 and λID are used to vary the contribution of the different losses in the optimization objective according to the nature of the edit. The optimization problem is solved by back-propagating the loss function gradient through the StyleGAN generator and CLIP image encoder. This approach performs a dedicated optimization for each input pair, this makes it versatile, but it also slows it down as each manipulation requires several minutes.

Latent Mapper

The second approach uses an auxiliary mapping network to manipulate the image’s desired attributes as described by the text prompt. The mapper consists of three fully connected networks that correspond to three different levels of details: coarse, medium, and fine. These networks have the same architecture as the StyleGAN mapper but with fewer layers, 4 instead of 8. Let w = (wc, wm, wf ) denote the latent code of the input image the mapper is given by:

The mapper is first trained for a specific text prompt t, then used to carry out the manipulation step Mt(w), where w is the input image’s latent embedding. Depending on the type of manipulation and level of detail, one can choose to train a subset of the three mapping networks.

Although the mapper infers manipulation steps based on the input image for a given text prompt, these steps have high cosine similarities over vastly different input images. This means that the direction of manipulation steps in the latent space for a text prompt is generally the same irrespective of the input latent code.

Global Directions

The third approach described in the paper maps the text prompts into a global direction in StyleGAN’s style space S. This approach enables fine-grained disentangled manipulations because style space is more disentangled than other latent spaces. The image is first encoded into style code. Let the image be denoted by s ∈ S, and G(s) be the corresponding generated image. For a text prompt t, StyleCLIP needs to find a manipulation direction ∆s, such that G(s + ????∆s) generates an image where the desired attribute is enhanced or added without changing other aspects. To find this manipulation direction ∆s, a vector ∆t in CLIP’s joint language-image embedding is created using CLIP’s encoder. This vector ∆t is then mapped onto the style space to obtain the manipulation direction ∆s. The manipulation strength is controlled using the parameter ???? and ???? dictates the extend of disentanglement.

Requirements

- Tensorflow 1.x or 2.x

- PyTorch=1.7.1

- Torchvision

- ftfy

- regex

- gdown

- CLIP

Manipulating Images using StyleCLIP and Text Prompts

The following code has been taken from the official global directions notebook available here.

Notebooks for the other methods-

- Set Tensorflow to use version 1.x and install other requirements.

%tensorflow_version 1.x ! pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html ! pip install ftfy regex tqdm !pip install git+https://github.com/openai/CLIP.git

- Clone the StyleCLIP GitHub repository and navigate into the global directions folder named

global.

! git clone https://github.com/orpatashnik/StyleCLIP ! cd /content/StyleCLIP/global/

- Import necessary libraries and classes; and load a pre-trained CLIP model.

import tensorflow as tf

import numpy as np

import torch

import clip

from PIL import Image

import pickle

import copy

import matplotlib.pyplot as plt

from MapTS import GetFs,GetBoundary,GetDt

from manipulate import Manipulator

model, preprocess = clip.load("ViT-B/32", device=device)

- Download and prepare the FFHQ dataset, and load the corresponding StyleCLIP manipulator model.

!python GetCode.py --dataset_name ffhq --code_type 'w'

!python GetCode.py --dataset_name ffhq --code_type 's'

!python GetCode.py --dataset_name ffhq --code_type 's_mean_std'

M=Manipulator(dataset_name='ffhq')

fs3=np.load('./npy/ffhq/fs3.npy')

- Select an input image and generate its latent code.

img_index = 21 img_indexs=[img_index] dlatent_tmp=[tmp[img_indexs] for tmp in M.dlatents] M.num_images=len(img_indexs) M.alpha=[0] M.manipulate_layers=[0] codes,out=M.EditOneC(0,dlatent_tmp) original=Image.fromarray(out[0,0]).resize((512,512)) M.manipulate_layers=None original

- Set the attributes, manipulation strength ???? and disentanglement threshold ????.

neutral='smiling face' target='angry face’ classnames=[target,neutral] dt=GetDt(classnames,model) beta = 0.15 alpha = 3

- Carry out the manipulation and display the modified image.

M.alpha=[alpha] boundary_tmp2,c=GetBoundary(fs3,dt,M,threshold=beta) codes=M.MSCode(dlatent_tmp,boundary_tmp2) out=M.GenerateImg(codes) generated=Image.fromarray(out[0,0]).resize((512,512)) generated

Last Epoch

This article discussed StyleCLIP’s three text-based image manipulation methods that use CLIP’s intuitive joint text-image embeddings to manipulate images through StyleGAN. These methods enable a plethora of unique image manipulations that existing annotation-based approaches have struggled with. The third (global direction) approach exhibits fine-grain control over manipulation strength and disentanglement. This enables StyleCLIP to perform complicated image manipulations without affecting the rest of the image. One interesting use case of GANs like MixNMAtch and StyleCLIP I see in the future is the generation of suspect images from verbal descriptions. But maybe we’ll have omnipresent cameras by then.