Linear regression is one of the first algorithms taught to beginners in the field of machine learning. Linear regression helps us understand how machine learning works at the basic level by establishing a relationship between a dependent variable and an independent variable and fitting a straight line through the data points. But, in real-world data science, linear relationships between data points is a rarity and linear regression is not a practical algorithm to use.

To overcome this, polynomial regression was introduced. But the main drawback of this was as the complexity of the algorithm increased, the number of features also increased and it became difficult to handle them eventually leading to overfitting of the model. To further eliminate these drawbacks, spline regression was introduced.

In this article, we will discuss spline regression with its implementation in python.

What is Spline Regression?

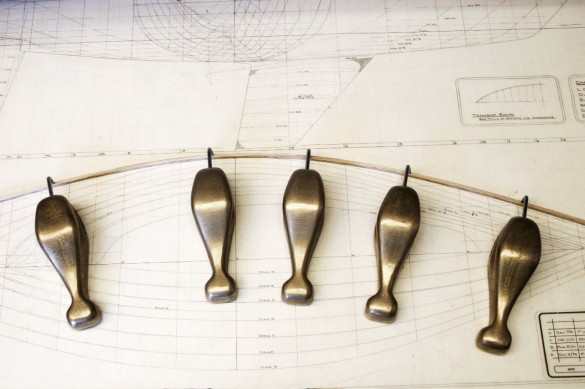

Spline regression is a non-linear regression which is used to try and overcome the difficulties of linear and polynomial regression algorithms. In linear regression, the entire dataset is considered at once. But in spline regression, the dataset is divided into bins. Each bin of the data is then made to fit with separate models. The points where the data is divided are called knots. Since there are separate functions that fit the bins, each function is called piecewise step functions.

What are the Piecewise Step Functions?

Piecewise step functions are those functions that can remain constant only over an interval of time. Individual step functions can be fit on these bins and thus avoid using one model on the entire dataset. We break the features into X ranges and apply the following functions.

Here, we have split the data X into c0,c1,,,ck functions and fit them to indicator functions I(). This indicator returns 0 or 1 depending on the condition it is given.

Though these functions are good for the non-linearity, binning of the functions does not essentially establish the relationship between input and output as we need. So, we need to include some basic functions which are discussed below.

Basic functions and piecewise polynomials

Instead of treating the functions that are applied to the bins as linear, it would be even more efficient to treat them as non-linear. To do this, a very general family of functions is applied to the target variable. This family should not be too flexible to overfit or be too rigid to not fit at all.

These families of functions are called basic functions.

y= a0 + a1b0(x1) + a2b1(x2)….

In the above function, if a degree is added to x to make it polynomial, it is called piecewise polynomial function.

y=a0+a1x1+a2x2

Now that we have understood the overall concept of spline regression let us implement it.

Implementation

We will implement polynomial spline regression on a simple Boston housing dataset. This data is most commonly used in case of linear regression but we will use cubic spline regression on it. The dataset contains information about the house prices in Boston and the features are the factors affecting the price of the house. You can download the dataset here.

We will load the dataset now.

import pandas as pd

from patsy import dmatrix

import statsmodels.api as sm

import numpy as np

import matplotlib.pyplot as plt

dataset=pd.read_csv('https://raw.githubusercontent.com/selva86/datasets/master/BostonHousing.csv')

dataset

Let us now plot the graph of age and the prices that are indicated as medv in the dataset and check how it looks.

plt.scatter(dataset['age'], dataset['medv'])

Clearly, there is no linear relationship between these points. So we will use spline regression as follows:

Cube and natural spline

spline_cube = dmatrix('bs(x, knots=(20,30,40,50))', {'x': dataset['age']})

spline_fit = sm.GLM(dataset['medv'], spline_cube).fit()

natural_spline = dmatrix('cr(x, knots=(20,30,40,50))', {'x': dataset['age']})

spline_natural = sm.GLM(dataset['medv'], natural_spline).fit()

Here, we have used the generalized linear model or GLM and fit the natural and cube splines. It is in the form of the matrix where the knots or divides have to be mentioned. These knots are where the data will divide and form bins and act on them. The knots used above are 20,30,40 and 50 since the age is upto 50.

Creating linspaces

Next, we will create linspaces from the dataset based on minimum and maximum values. Then, we will use this linspace to make the prediction on the above model.

range = np.linspace(dataset['age'].min(), dataset['age'].max(), 50)

cubic_line = spline_fit.predict(dmatrix('bs(range, knots=(20,30,40,50))', {'range': range}))

line_natural = spline_natural.predict(dmatrix('cr(range, knots=(20,30,40,50))', {'range': range}))

Plot the graph

Finally, after the predictions are made it is time to plot the spline regression graphs and check how the model has fit on the bins.

plt.plot(range, cubic_line, color='r', label='Cubic spline')

plt.plot(range, line_natural, color='g', label='Natural spline')

plt.legend()

plt.scatter(dataset['age'], dataset['medv'])

plt.xlabel('age')

plt.ylabel('medv')

plt.show()

As you can see, the bins at 20 and 30 vary slightly more and the bins at 40 and 50 also fit differently. This is because different models are fit on the different bins of the data. But it is efficient since most points are being covered by the model.

Conclusion

In this article, we saw how to improve linear and polynomial regression to fit on non-linear relationships using spline regression. This type of regression can be used more efficiently to establish relationships between variables without linearity involved and for real-world problems.

The complete code of the above implementation is available at the AIM’s GitHub repository. Please visit this link to find the notebook of this code.