OpenAI’s GPT 3 has more or less taken over the tech world regarding language models, but earlier this year, Google introduced its NLP model Switch Transformers. Along with improved parameters, this model was supplemented by an ethics debate and job firings.

In February, Google’s Ethical Team leader Margaret Mitchell was fired from Google just a few months after her co-leader Timnit Gebru exited the company. This is supposedly over a paper about the real-world dangers of large language models that they co-wrote. Considering that this move was right around the corner with the release of the Switch Transformer, the situation gave rise to a significant ethics debate.

Google’s ethics in the AI research unit has been under scrutiny since December’s dismissal of Gebru. Gebru’s exit prompted thousands of Google workers to protest.

How large is too large?

OpenAI’s GPT-3 is based on 175 billion computational parameters, AI21 Labs’ Jurassic-1 Jumbo on 178 billion parameters, and Google’s Switch Transformer on 1.6 trillion parameters. So, language models are getting bigger, but they don’t seem to be becoming more ethical proportionately.

Large language models parrot human-like language based on their training through data available on the internet – leaving no doubt that their predictions will unpin Google searches, Wikipedia, or forum discussions. While there have been breakthroughs in text analysis, an increasing number of researchers, ethicists, and linguists are not satisfied with these tools, calling them overused and under-vetted. Moreover, the underlying biases and possible harms haven’t been fully reckoned with.

For instance, when GPT 3 was asked to complete a sentence containing the word “Muslims,” more than 60% of cases introducing words like bomb, murder, assault, and terrorism. These models effectively mirror the internet, perpetuating stereotypes, hate speech, misinformation, and toxic narratives.

Thousands of developers globally use GPT 3 to build their applications, generate emails and create AI-based text startups. With these tools reaching mass audiences, like Gmail’s email autocomplete and its two billion users, these tools are way too powerful to be left unchecked.

Training Data

The entirety of English-language Wikipedia makes up just 0.6% of GPT -3’s training data. Instead, large models ingest everything available on the internet.

All of this data is created by humans and is accompanied by racism, sexism, homophobia, and other toxic content. Online forums include white supremacist threads, gendered binaries, inevitably making the language model associate positions of power with men over women.

While some models are more biased than others, no model is free of it. The toxic and partial datasets inevitably are amplified by the models.

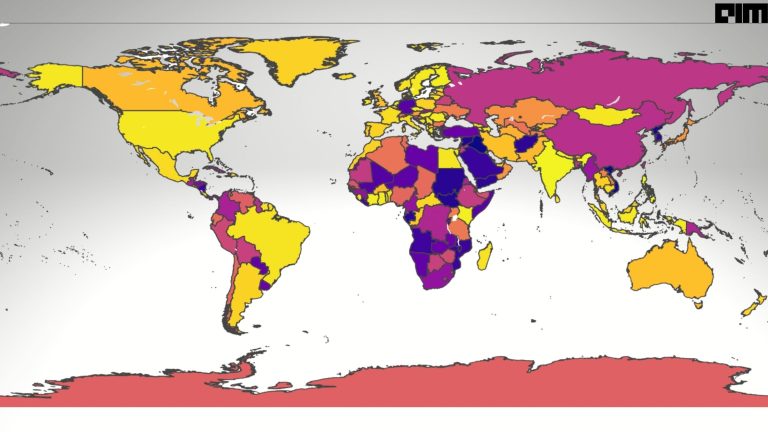

Another important aspect is the distribution of information found on the internet. The data contributed online is mainly young users from developed countries. For instance, recent surveys on Wikipedians found that only 8.8 — 15% are women, or Pew Internet Research’s 2016 survey found that 67% of Reddit users in the US are men, and 64% are between ages 18-29. Now, with models like GPT-2 that are sourced hugely from Reddit, this overrepresentation is bound to create huge biases and marginalisation.

Given the size of the training data, the lack of prominence of underrepresented groups establishes a feedback loop that lessens the impact of data from underrepresented populations.

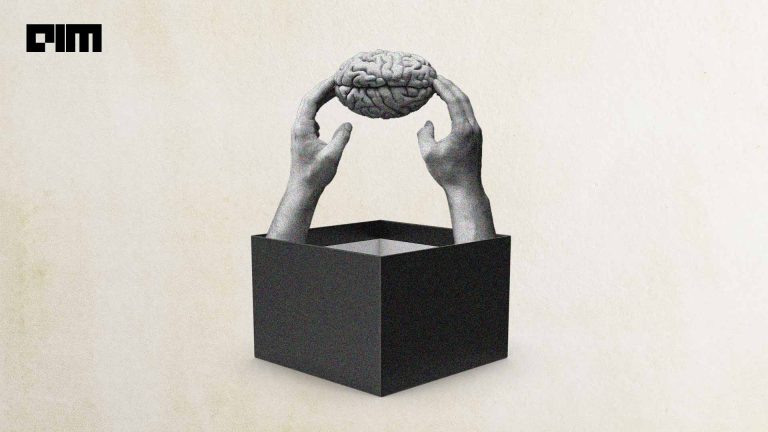

Aligning with Human Objectives

OpenAI and Stanford’s research revealed that open source projects are already attempting to recreate GPT-3 with an upper hand of six-nine months to set responsible NLP norms. “Participants discussed the need to align model objectives with human values better,” the paper stated. “Aligning human and model objectives was seen to be especially important for ’embodied’ AI agents which learn through active interaction with their environment.”

Thus, the focus needs to turn to define constraints and criteria that counter human bias instead of merely pulling data that reflects the world as it’s represented on the web.