Generally, a machine learning model requires a significant amount of training data to learn to recognise patterns. However, acquiring and processing swathes of data is no small task due to many reasons, including data regulations around privacy and safety, or time and resource constraints.

Nevertheless, ML models, especially vision models, can learn effectively from small datasets.

Few-shot learning

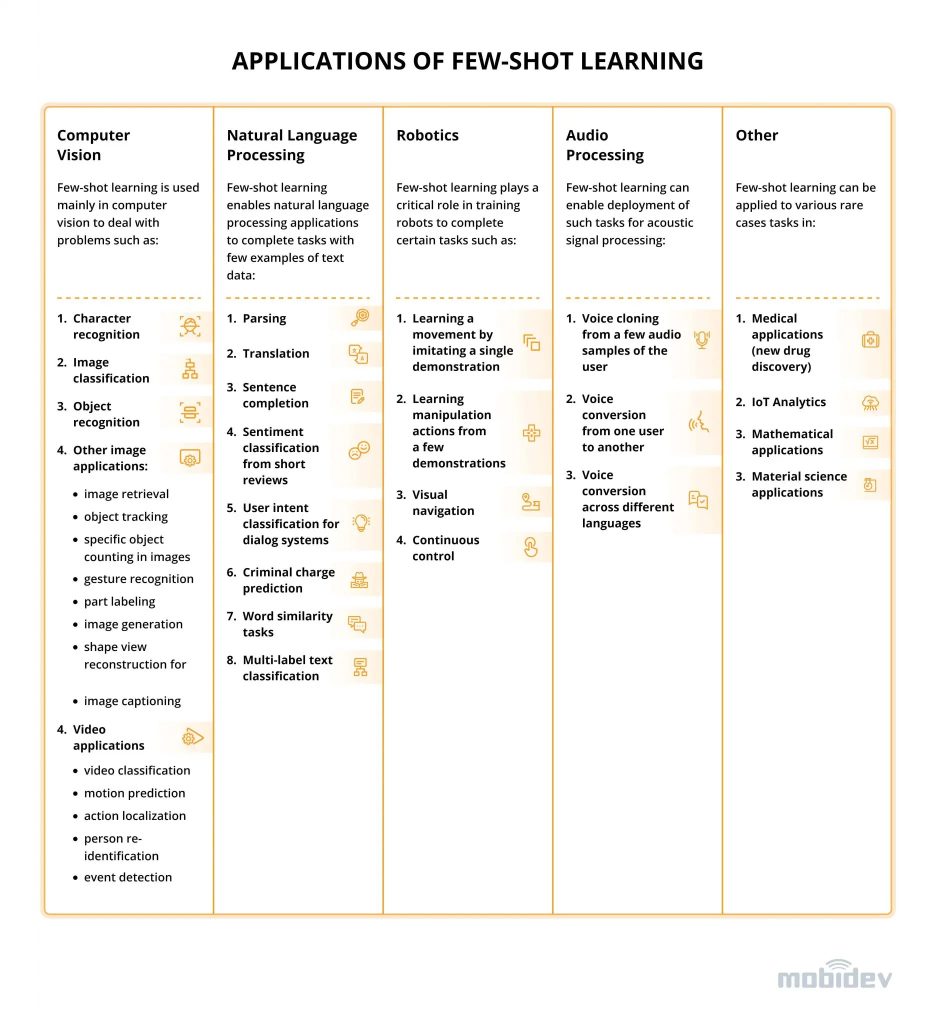

Few-shot learning (FSL) is a great example, where researchers have received 70% accuracy for an image classification task by using only four samples per class. N-shot learning can be used in computer vision, NLP, healthcare, and IoT applications. According to research by Maksym Tatariants, PhD, AI Solution Architect, “Through obtaining a big amount of data, the model becomes more accurate in predictions. However, in the case of few-shot learning (FSL), we require almost the same accuracy with fewer data. This approach eliminates high model training costs that are needed to collect and label data. Also, by the application of FSL, we obtain low dimensionality in the data and cut the computational costs.”

EditGAN

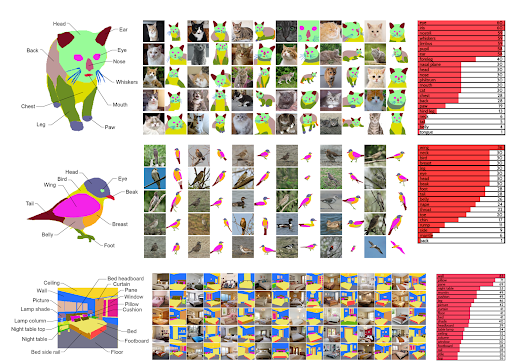

NVIDIA has introduced a generative adversarial network (GAN) model called EditGAN. The work—from NVIDIA, the University of Toronto, and MIT researchers—builds off DatasetGAN, an AI vision model that can be trained with as few as 16 human-annotated images. The model performs as effectively as other methods that require more than 100 images. EditGAN uses the power of the previous model and empowers the user to edit or manipulate the desired image with simple commands.

The model assigns each pixel of the image to a category, such as a tire, windshield, or car frame. Then, these pixels are controlled within the AI latent space and based on the input of the user. EditGAN then manipulates pixels associated with the desired change. The AI knows what each pixel represents based on other images used in training the model. EditGAN’s editing capabilities can be used to create large image datasets with certain characteristics. Such specific datasets can be useful in training downstream machine-learning models on different computer vision tasks.

NVIDIA believes the EditGAN framework may impact the development of future generations of GANs. The current version of EditGAN focuses on image editing, but similar methods can potentially be used to edit 3D shapes and objects.

ViT

Vision Transformers (ViTs) is emerging as an alternative to convolutional neural networks (CNNs) for visual recognition. Researchers claimed to have achieved competitive results with CNNs, but the lack of the typical convolutional inductive bias makes them more data-hungry than common CNNs. ViT are often pretrained on JFT-300M or at least ImageNet. Yun-Hao Cao, Hao Yu and Jianxin Wu from National Key Laboratory for Novel Software Technology Nanjing University, China, achieved state-of-the-art results when training from scratch on 7 small datasets under various ViT backbones. They used 2,040 images to train their model and based it on parametric instance discrimination. Their model – Instance Discrimination with Multi-crop and CutMix (IDMM) achieved 96.7% accuracy when training from scratch on flowers (2040 images), which shows that training ViTs with small data is surprisingly viable.

Omnivore

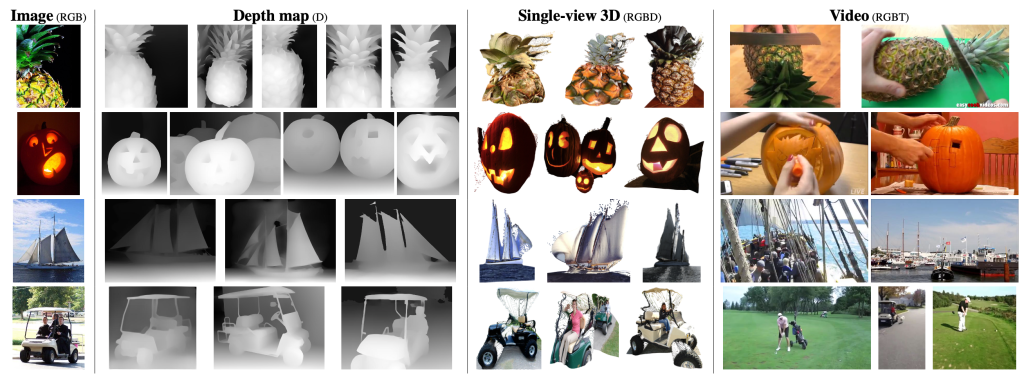

While a bigger data set is used to train a computer vision model, it often limits itself to the purpose it was trained for or is limited to the modality. Researchers at MetaAI believe that the model once trained must be applicable to multiple visual modalities. Bringing computer vision closer to human eyes, Meta AI team conducted a study to create a modality-agnostic vision model that uses current improvements in vision architectures. Computer vision research encompasses a wide range of visual modalities, like images, videos, and depth perception. The CV model treats each of these modalities separately. While the models outperform people on specialized tasks, they lack the flexibility of a human-like visual system.

According to Meta AI, instead of having an over-optimised model for each modality, it is important to design models that work fluidly across modalities. They built the omnivorous model, where each visual modality does not have its own architecture and instead it uses the shared model parameters to recognise all three modalities. It operates by turning each input modality into embeddings of Spatio-temporal patches, which are then processed by the same transformer to provide an output representation. “A single OMNIVORE model obtains 86.0% on ImageNet, 84.1% on Kinetics, and 67.1% on SUN RGB-D. After fine-tuning, our models outperform prior work on a variety of vision tasks and generalize across modalities,” said the researchers.

PhygitalPlus is a combination of a small dataset and no code. The makers created a photorealistic DeepFake in high 4K resolution with several neural networks in their app on 3DML engine basis with a small dataset of 13 images.

The photorealistic DeepFake in high 4K resolution was generated with several neural networks in our app on 3DML engine basis. The fascinating result was achievable even with a small dataset of 13 old photos https://t.co/rCefQiTXt5 #CreatedWithPhygitalPlus #DeepFake #PTI #3DML pic.twitter.com/CIwwRPKB6v

— PHYGITALISM (@phygitalism) January 24, 2022