“India is a great place to help humanity using AI”

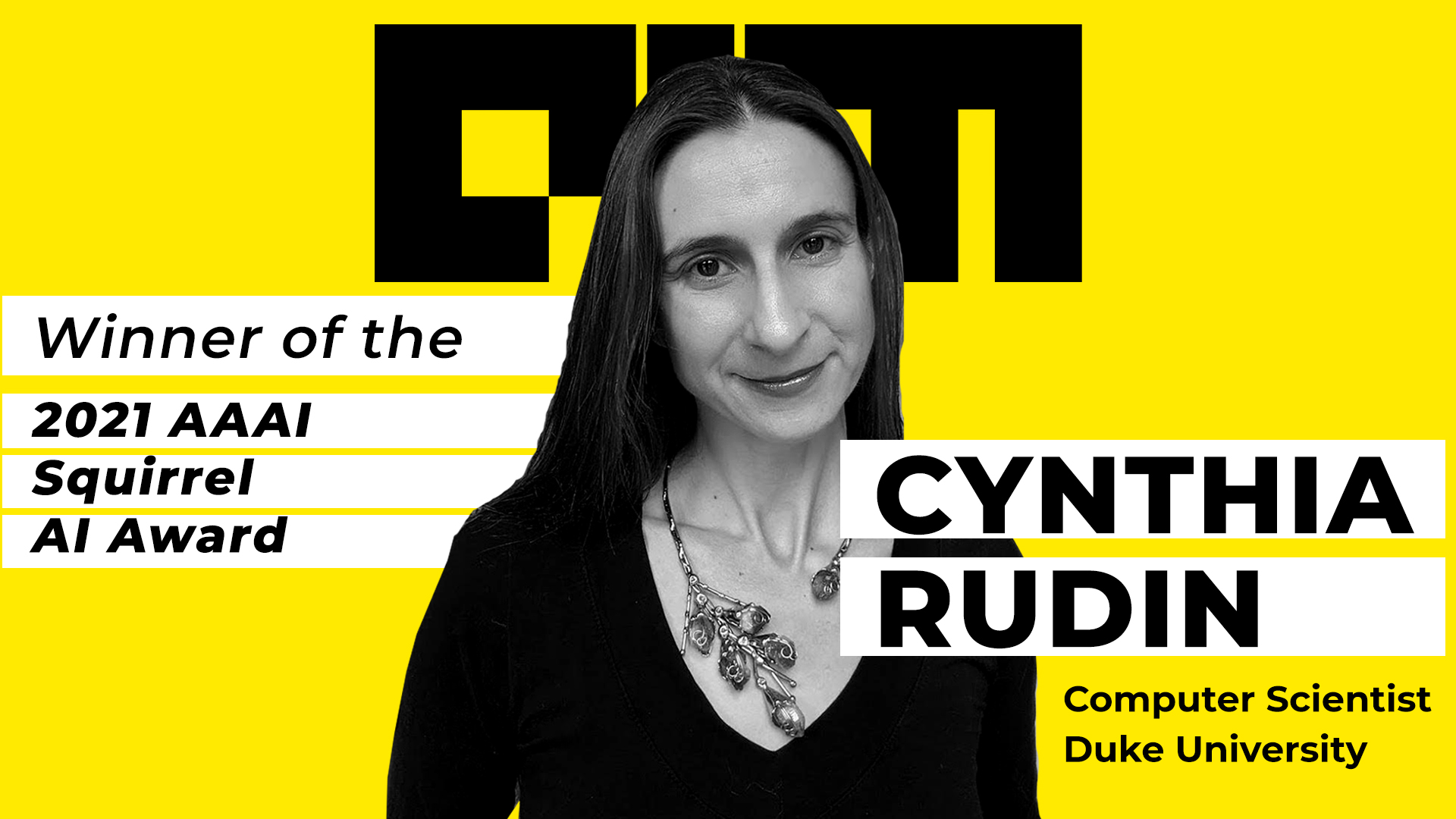

Cynthia Rudin

A lot of researchers and scholars have stepped into the field of artificial intelligence and machine learning. But, while many are focused on improving the algorithms for the machines, Duke University computer scientist and engineer Cynthia Rudin decided to tread on a less covered path — working tirelessly to use the power of AI to help society and serve humanity.

Analytics India Magazine caught up with Cynthia Rudin, Professor of Computer Science at Duke University. Her research work focuses on ML tools that help humans make better decisions, mainly interpretable machine learning and its applications. Recently, the Association for the Advancement of Artificial Intelligence (AAAI) has awarded Rudin the $1 million Squirrel AI Award for Artificial Intelligence for the Benefit of Humanity for her contributions to the area. AAAI is a significant international scientific society that supports AI researchers, practitioners, and educators. It was founded in 1979.

AIM: Can you take us through your journey from entering the ML field to winning the Squirrel AI Award for Artificial Intelligence for the Benefit of Humanity 2021?

Cynthia: As a PhD student, I was studying the theoretical properties of algorithms. It was definitely fun, but after I graduated, I wanted to see how ML worked in the real world, so I took a job trying to help maintain the NYC power grid with AI. That project was very difficult, and it led me to understand that more “powerful” ML techniques weren’t helping with most of the real-world problems I was working on. On the other hand, if I wanted to troubleshoot, trust, and use ML models in practice, they needed to be interpretable – I needed to explain my models to the power engineers to get their help troubleshooting. So I changed my research agenda to focus on interpretable models.

At the time, there were less than a handful of AI researchers in the world focusing on this, and the ML community as a whole saw no value in interpretability. In fact, they felt it was ruining the “mystique” of ML as a type of technology that could find subtle patterns that humans couldn’t understand. So, it was extremely unpopular. However, working on interpretable models let me have more of an impact on the world (even if it was hard to publish the papers!): I started working on many different healthcare problems, applying interpretable AI to problems in neurology, psychiatry, sleep medicine, urology, and so on.

On winning the award, A ‘New Nobel’

Some of our models are used in high-stakes decisions in hospitals. I also started working on problems in policing and criminal justice. Our code for crime series detection was posted on the internet on my website and picked up by the New York Police Department (NYPD), who turned it into the powerful Patternizr algorithm, which determines whether a new crime (perhaps a break-in) is part of a series of crimes committed by the same people. I worked on criminal recidivism prediction, showing that interpretable models were just as accurate as black-box models, which makes it unclear why we subject people to black-box predictive models in criminal justice.

I also worked on computer vision, showing that even for deep neural networks, we could create interpretable neural networks that were just as accurate. When the machine learning world started trying to “explain” black-box models, I pushed back, telling them how critical it is to use interpretable models rather than “explained” black-box models for high stakes decisions and highlighting the fact that interpretable models are just as accurate if you train them carefully. My unusual work path had also opened a lot of avenues for me to serve on professional and government committees, where I was often the only AI expert, and I’ve also done a lot of volunteer work for professional societies. So when this award came along, it was essentially designed for what I had already been doing but never expected to be rewarded for in a million years.

AIM: You direct the Interpretable ML lab at Duke University; what are some of the most important applications interpretable machine learning can produce for humanity?

Cynthia: Let’s think more broadly about the most serious problems affecting our society. To me, two things stand out: safety and inequity.

- In terms of inequity, healthcare stands out as an area where we need to improve. Healthcare is not distributed equitably around the world. We need to improve the availability of high-quality healthcare so that more people get access to better care. Interpretable machine learning can help with that. If we can provide interpretable ML tools that help clinicians make difficult decisions and use our tools to help train physicians (and patients), we can make a difference.

- In terms of safety, we need to reduce crimes and stem the violence. For instance, AI can find subtle but interpretable patterns in criminal activity that could be used to determine which crimes might be related to each other (think of patterns of break-ins). Criminals tend to use the same tactics because they have worked in the past. The patterns in their behaviour could allow interpretable machine learning to find the patterns and explain them to crime analysts or police officers.

AIM: What can be the three most pressing societal issues you wish to solve with the help of machine learning? Or if there is any for which you want to make a special appeal?

Cynthia: I admit that I often wish that AI could do more to solve the world’s problems. It would be amazing if AI could help deal with refugee crises, extreme poverty and reverse climate change in a major way. It could happen, I suppose. Who knows, perhaps AI will be used to uncover massive criminal bank accounts with billions of dollars in them, and this money would then be used for building homes for refugees or something. Don’t underestimate the power of AI!

AIM: As a professor, what will be your suggestions to Indian students who are working or aspiring to enter the ML field?

Cynthia: India is a great place to help humanity using AI because there is so much need. The challenge is setting up the problem and figuring out how to get data to support it and translate it into practice. I found that it was less difficult to get models launched in practice if the domain experts could understand them, which is why I went into interpretable machine learning.

Another important tip is that it is quite easy to make mistakes in differentiating between correlation and causation, so be careful when applying ML techniques; they are typically designed for prediction only, so it is important not to interpret the model’s parameters as causal effects. There are special techniques for causal inference instead. (I happen to like almost-exact-matching techniques for this because they are interpretable.)

AIM: Which all books and resources would you suggest for ML aspirants?

Cynthia: There are lots of online courses now. For instance, I post all my advanced graduate lectures on YouTube and lecture notes on my website, here. These materials are freely open to anyone. My lectures are somewhat advanced and require knowledge of probability and linear algebra, but there are many popular courses online you could take at a more introductory level.

AIM: Can you share any of your research works done till now that are very close to your heart?

Cynthia: It’s hard to pin down a favourite! The paper from my lab that is most well-known is this one: Stop Explaining Black-Box Machine Learning Models for High Stakes Decisions and use Interpretable Models Instead. One can find it here.

This paper explains why there is a chasm between explaining a black box and using an inherently interpretable model. It also gives many examples of interpretable machine learning models.

AIM: Many research suggests a dearth of women in the data science field, especially after good enrollment in colleges. Can you mention some of the reasons wrt this, since you have a long academic background as well?

Cynthia: I’m actually not qualified to answer this one because I have never studied it! To be honest, I think it’s getting better now that it’s acceptable to do a much broader type of work in data science than before. My lab has always had lots of women in it. I definitely remember times in the past when I would go to conferences and feel that I didn’t quite belong, either because I was female or because my ideas – and what I thought was important – were different from the mainstream, but I think maybe those days are coming to an end. Or at least I’m hopeful about that.