|

Listen to this story

|

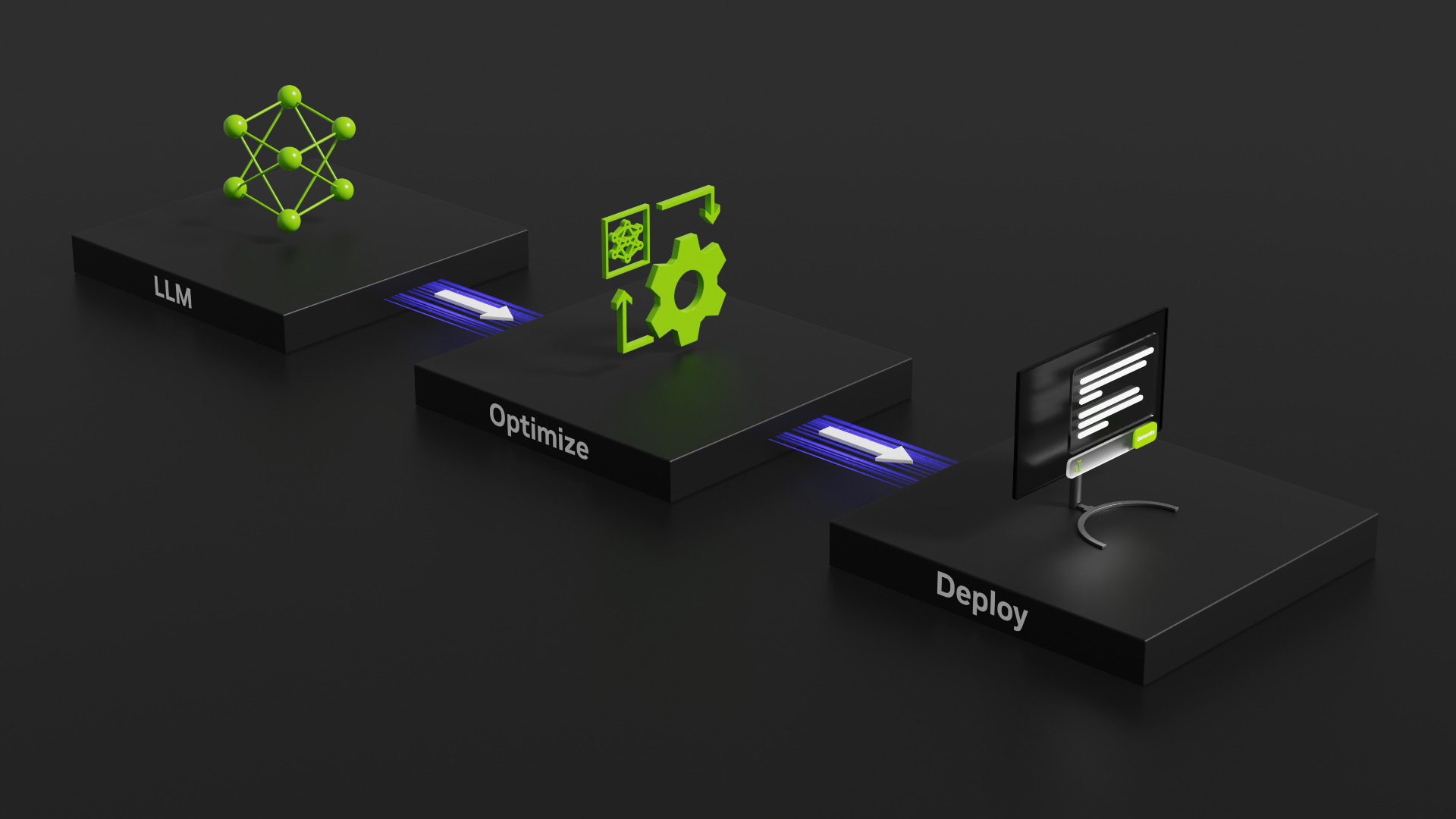

NVIDIA TensorRT-LLM has introduced optimisations for peak throughput and memory efficiency, resulting in significant enhancements in LLM inference performance. The latest TensorRT-LLM improvements on NVIDIA H200 GPUs showcase a remarkable 6.7x speedup for the Llama 2 70B LLM.

Notably, these enhancements also enable the efficient operation of massive models, such as Falcon-180B, on a single GPU, a task that previously necessitated a minimum of eight NVIDIA A100 Tensor Core GPUs.

The acceleration of Llama 2 70B is attributed to the optimisation of Grouped Query Attention (GQA), an extension of multi-head attention techniques, particularly crucial in the Llama 2 70B architecture.

The evaluation of Llama 2 70B performance, considering different input and output sequence lengths, demonstrates the impressive throughput achieved by H200. As the output sequence length increases, raw throughput decreases, but the performance speedup compared to A100 increases significantly.

Additionally, software improvements in TensorRT-LLM alone contribute to a 2.4x improvement compared to the previous version running on H200.

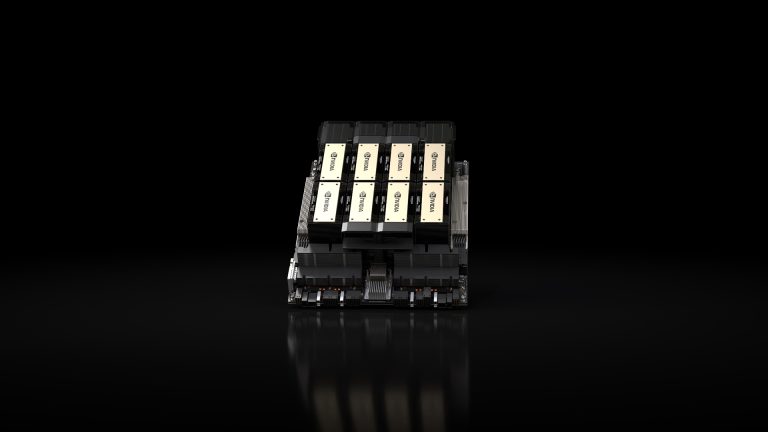

Falcon-180B, known for its size and accuracy, historically demanded eight NVIDIA A100 Tensor Core GPUs for execution. However, the latest TensorRT-LLM advancements, incorporating a custom INT4 AWQ, empower the model to run seamlessly on a single H200 Tensor Core GPU. This GPU boasts 141 GB of cutting-edge HBM3e memory with nearly 5 TB/s of memory bandwidth.

The latest TensorRT-LLM release implements custom kernels for AWQ, performing computations in FP8 precision on NVIDIA Hopper GPUs, utilizing the latest Hopper Tensor Core technology. This enables the entire Falcon-180B model to run efficiently on a single H200 GPU with an impressive inference throughput of up to 800 tokens/second.

In terms of performance, the TensorRT-LLM software improvements alone contribute to a 2.4x enhancement compared to the previous version running on H200.

The custom implementation of Multi-Head Attention (MHA) that supports GQA, Multi-Query Attention (MQA), and standard MHA leverages NVIDIA Tensor Cores during the generation and context phases, ensuring optimal performance on NVIDIA GPUs.

Despite the reduction in memory footprint, TensorRT-LLM AWQ maintains accuracy above 95%, demonstrating its efficiency in optimizing GPU compute resources and reducing operational costs.

These advancements are set to be incorporated into upcoming releases (v0.7 and v0.8) of TensorRT-LLM.