“All the impressive achievements of deep learning amount to just curve fitting.”

Judea Pearl

Machine learning in its most reduced form is sometimes referred to as glorified curve fitting. In a way, it is true. Machine learning models are typically founded on the principles of convergence; fitting data to the model. Whether this approach will lead to AGI is still a debatable subject. However, for now, deep neural networks are the best possible solution, and they use optimisation methods to arrive at the target.

Fundamental optimisation methods are typically categorised into first-order, high-order and derivative-free optimisation methods. One usually comes across methods that fall into the category of the first-order optimisation such as the gradient descent and its variants.

According to an extensive survey done on optimisation methods by Shiliang et al., here are a few of the top methods that one can frequently encounter in their ML journey:

Gradient Descent

The gradient descent method is the most popular optimisation method. The idea of this method is to update the variables iteratively in the (opposite) direction of the gradients of the objective function. With every update, this method guides the model to find the target and gradually converge to the optimal value of the objective function.

Stochastic Gradient Descent

Stochastic gradient descent (SGD) was proposed to address the computational complexity involved in each iteration for large scale data. The equation is given as:

Taking the values and adjusting them iteratively based on different parameters in order to reduce the loss function is called back-propagation.

In this method, one sample randomly used to update the gradient(theta) per iteration instead of directly calculating the exact value of the gradient. The stochastic gradient is an unbiased estimate of the real gradient. This optimisation method reduces the update time for dealing with large numbers of samples and removes a certain amount of computational redundancy. Read more here.

Adaptive Learning Rate Method

Learning rate is one of the key hyperparameters that undergo optimisation. Learning rate decides whether the model will skip certain portions of the data. If the learning rate is high, then the model might miss on subtler aspects of the data. If it is low, then it is desirable for real-world applications. Learning rate has a great influence on SGD. Setting the right value of the learning rate can be challenging. Adaptive methods were proposed to this tuning automatically.

The adaptive variants of SGD have been widely used in DNNs. Methods like AdaDelta, RMSProp, Adam use the exponential averaging to provide effective updates and simplify the calculation.

- Adagrad: weights with a high gradient will have low learning rate and vice versa

- RMSprop: adjusts the Adagrad method such that it reduces its monotonically decreasing learning rate.

- Adam is almost similar to RMSProp but with momentum

- Alternating Direction Method of Multipliers (ADMM) is another alternative to Stochastic Gradient Descent (SGD)

The difference between gradient descent and AdaGrad methods is that the learning rate is no longer fixed. It is computed using all the historical gradients accumulated up to the latest iteration. Read more here.

Conjugate Gradient Method

The conjugate gradient (CG) approach is used for solving large scale linear systems of equations and nonlinear optimisation problems. The first-order methods have a slow convergence speed. Whereas, the second-order methods are resource-heavy. Conjugate gradient optimisation is an intermediate algorithm, which combines the advantages of first-order information while ensuring the convergence speeds of high-order methods.

Know more about gradient methods here.

Derivative-Free Optimisation

For some optimisation problems, it can always be approached through a gradient because the derivative of the objective function may not exist or is not easy to calculate. This is where derivative-free optimisation comes into the picture. It uses a heuristic algorithm that chooses methods that have already worked well, rather than derives solutions systematically. Classical simulated annealing arithmetic, genetic algorithms and particle swarm optimisation are few such examples.

Zeroth Order Optimisation

Zeroth Order optimisation was introduced recently to address the shortcomings of derivative-free optimisation. Derivative-free optimisation methods find it difficult to scale to large-size problems and suffer from lack of convergence rate analysis.

Zeroth Order advantages include:

- Ease of implementation with only a small modification of commonly-used gradient-based algorithms

- Computationally efficient approximations to derivatives when they are difficult to compute

- Comparable convergence rates to first-order algorithms.

For Meta Learning

Meta-optimiser is popular within the meta learning regime. The purpose of meta learning is to achieve fast learning, which, in turn, makes gradient descent more accurate in the optimisation. The optimisation process itself can be regarded as a learning problem to learn the prediction gradient rather than a determined gradient descent algorithm. Due to the similarity between the gradient update in backpropagation and the cell state update, LSTM is often used as the meta-optimiser.

Whereas, model-agnostic meta learning algorithm (MAML) is another method, which learns the parameters of models subjected to gradient descent methods that include classification, regression and reinforcement learning. The basic idea of the model-agnostic algorithm is to begin multiple tasks at the same time, and then get the synthetic gradient direction of different tasks, so as to learn a common base model.

These are few of the frequently used optimisation methods. Apart from these, there are other methods, which can be found in this study.

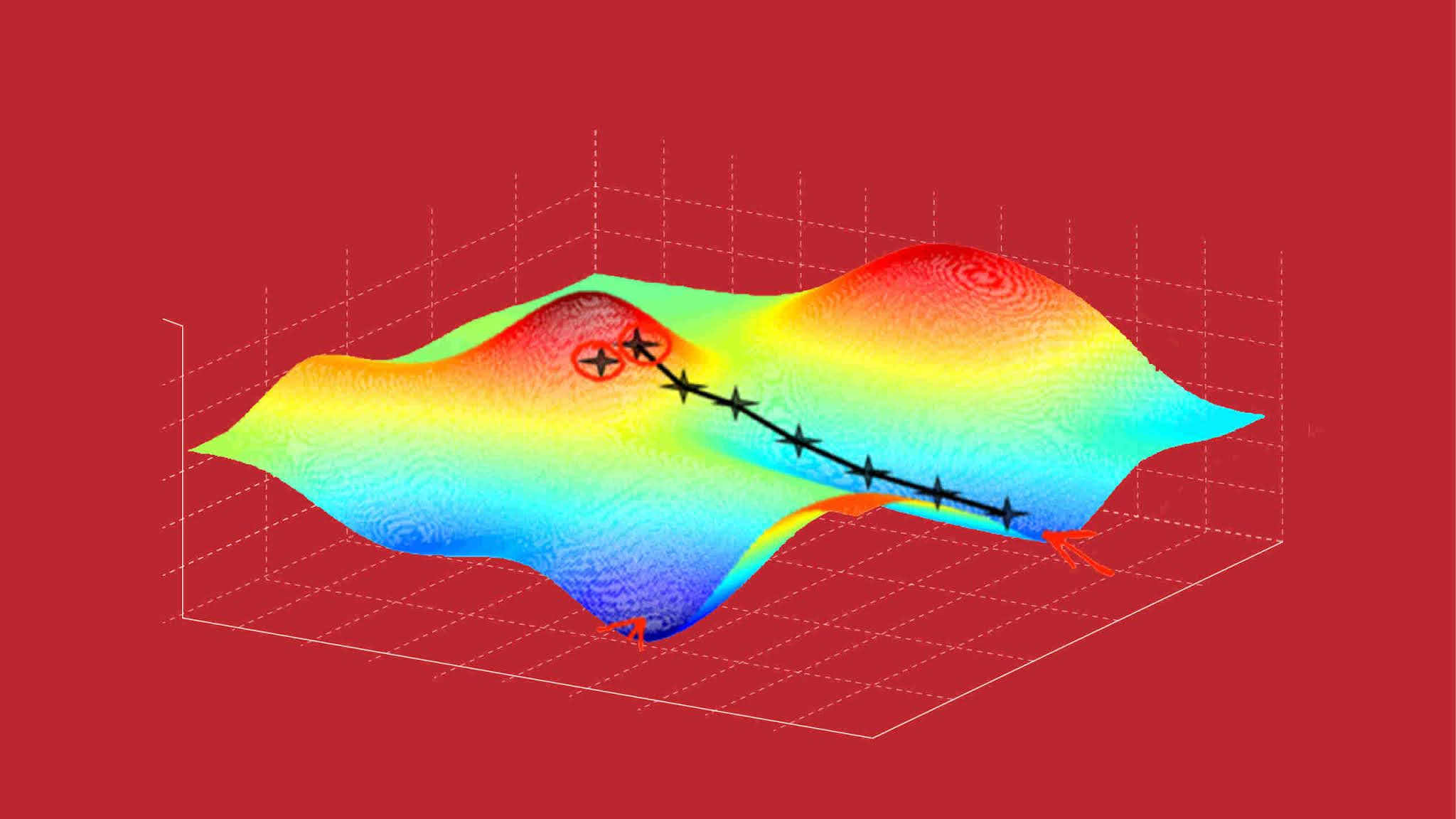

That said, the problem of optimisation for deep learning doesn’t end here because not all problems come under convex optimisation. Non-convex optimisation is one of the difficulties in the optimisation problem.

One approach is to transform the non-convex optimisation into a convex optimisation problem, and then use the convex optimisation method. The other is to use some special optimisation method such as projection gradient descent, alternating minimisation, expectation maximisation algorithm and stochastic optimisation and its variants.