Recurrent Neural Networks are one of the most common Neural Networks used in Natural Language Processing because of its promising results. The applications of RNN in language models consist of two main approaches. We can either make the model predict or guess the sentences for us and correct the error during prediction or we can train the model on particular genre and it can produce text similar to it, which is fascinating.

What is a Recurrent Neural Network?

The logic behind a RNN is to consider the sequence of the input. For us to predict the next word in the sentence we need to remember what word appeared in the previous time step. These neural networks are called Recurrent because this step is carried out for every input. As these neural network consider the previous word during predicting, it acts like a memory storage unit which stores it for a short period of time.

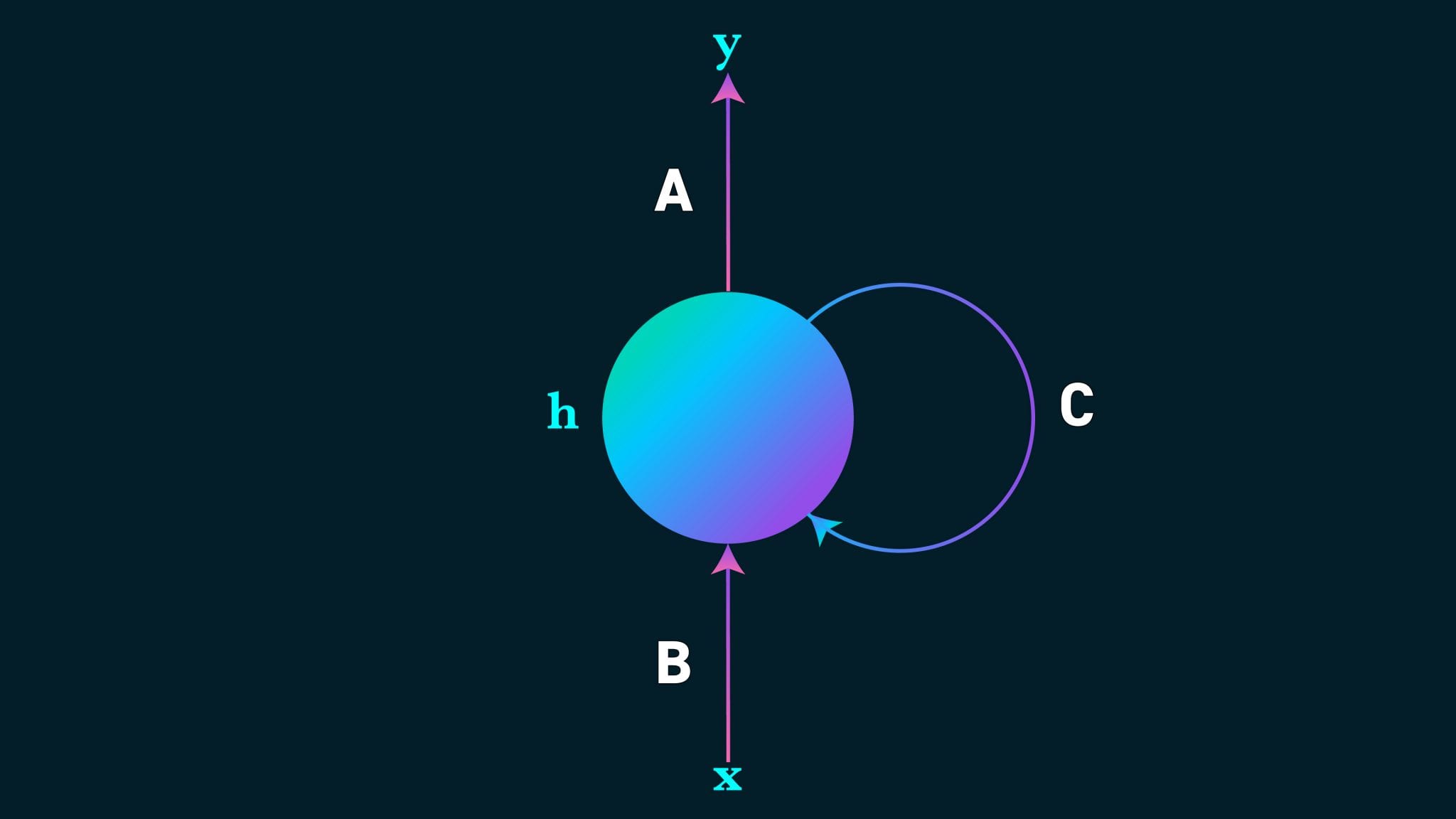

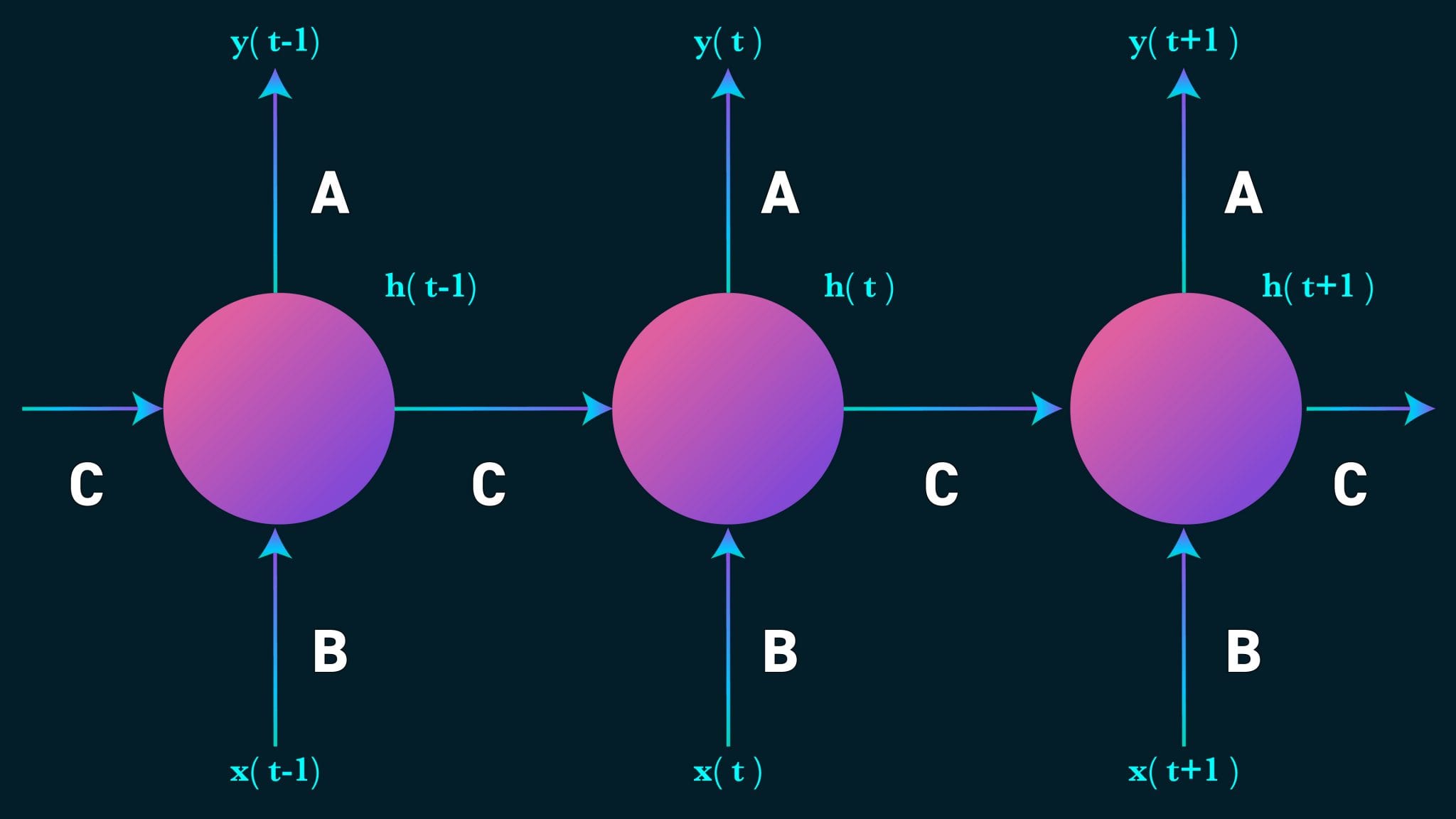

The above diagram represents a three layer recurrent neural network which is unrolled to understand the inner iterations. Lets look at each step,

- xt is the input at time step t. xt-1 will be the previous word in the sentence or the sequence.

- ht will be the hidden state at time step t. The output of this state will be non-linear and considered with the help of an activation function like tanh or ReLU. ht-1 is evaluated from the previous hidden layer, usually it is initialized to zero.

- yt will be our output at the time step t. It is the a word which has the highest probability considered using a an activation function like yt = maxout(Ast).

The RNN in the above figure has same evaluation at teach step considering the weight A, B and C but the inputs differ at each time step making the process fast and less complex. It remembers only the previous and not the words before it acting like a memory.

Language Modelling and Prediction:

In this method, the likelihood of a word in a sentence is considered. The probability of the output of a particular time-step is used to sample the words in the next iteration(memory). In Language Modelling, input is usually a sequence of words from the data and output will be a sequence of predicted word by the model. While training we set xt+1 = ot, the output of the previous time step will be the input of the present time step.

Speech Recognition:

A set of inputs containing phoneme(acoustic signals) from an audio is used as an input. This network will compute the phonemes and produce a phonetic segments with the likelihood of output.

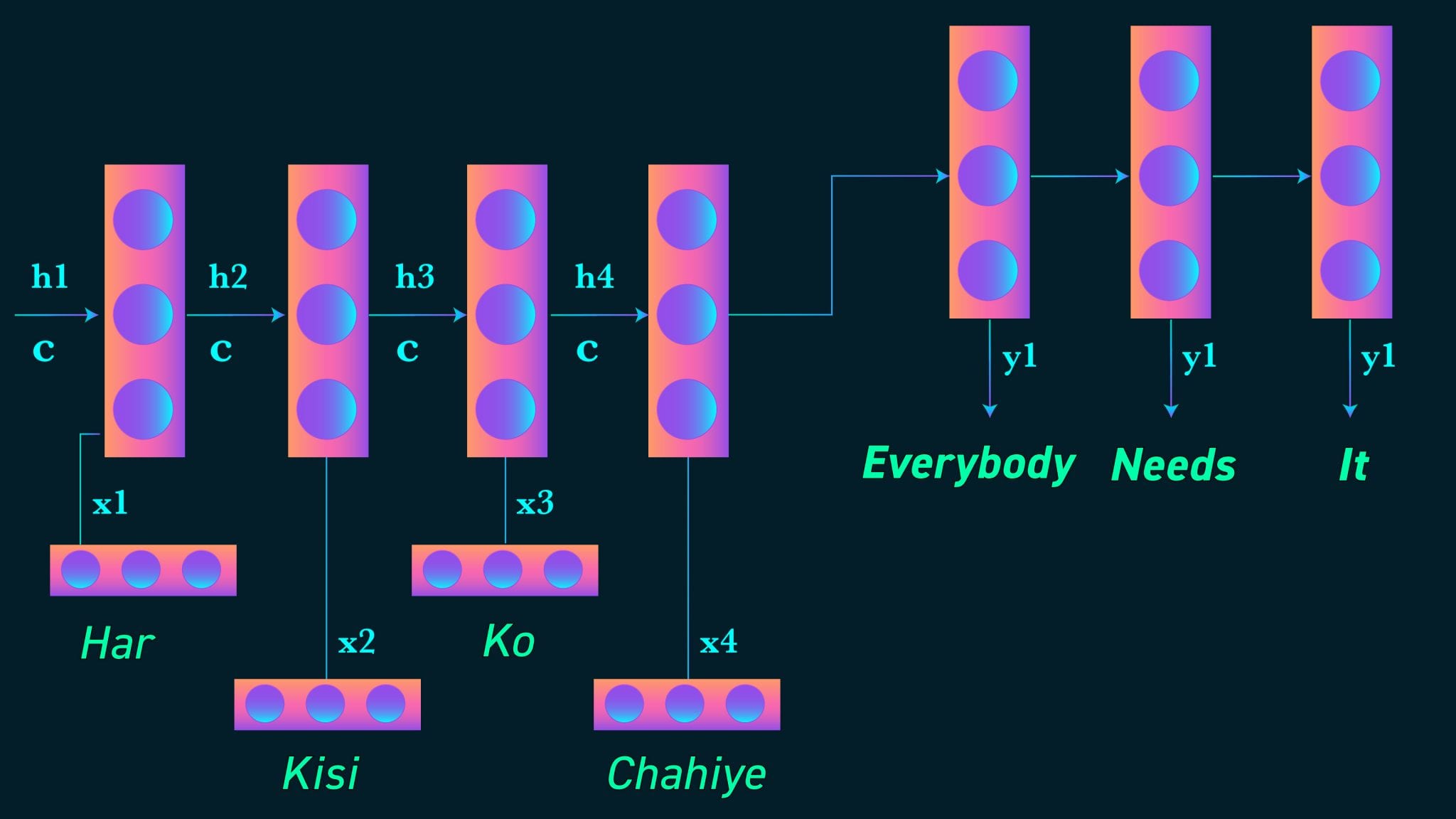

Machine Translation:

In Machine Translation, the input is will be the source language(e.g. Hindi) and the output will be in the target language(e.g. English). The main difference between Machine Translation and Language modelling is that the output starts only after the complete input has been fed into the network.

Image recognition and characterization:

Recurrent Neural Network along with a ConvNet work together to recognize an image and give a description about it if it is unnamed. This combination of neural network works in a beautiful and it produces fascinating results. Here is a visual description about how it goes on doing this, the combined model even aligns the generated words with features found in the images.

What are LSTM(Long Short Term Memory) Neural Networks?

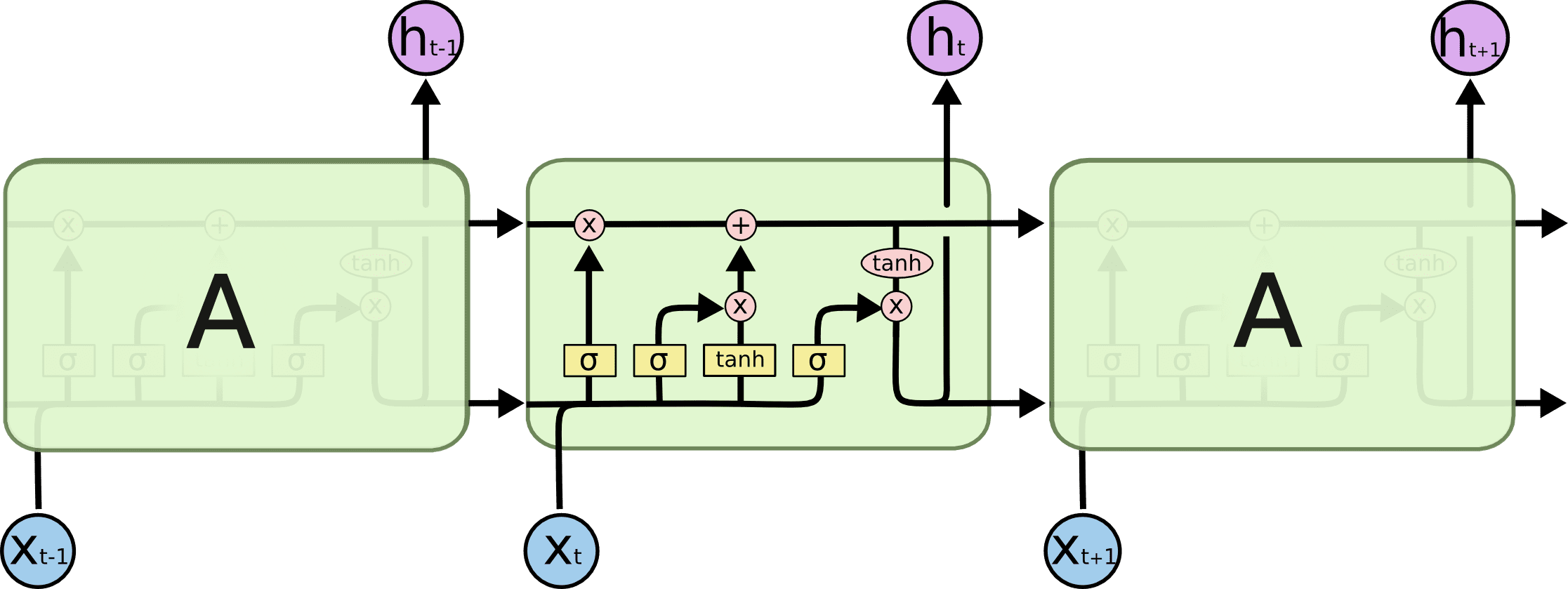

The LSTM networks are popular nowadays. The LSTM network are called cells and these cells take the input from the previous state ht-1 and current input xt. The main function of the cells is to decide what to keep in mind and what to omit from the memory. The past state, the current memory and the present input work together to predict the next output.

Architecture of LSTM network:

LSTM network have a sequence like structure, but the recurring network has a different module. Instead of having single neural network layer, they have small parts connected to each other which function in storing and removal of memory.

Working of LSTM networks:

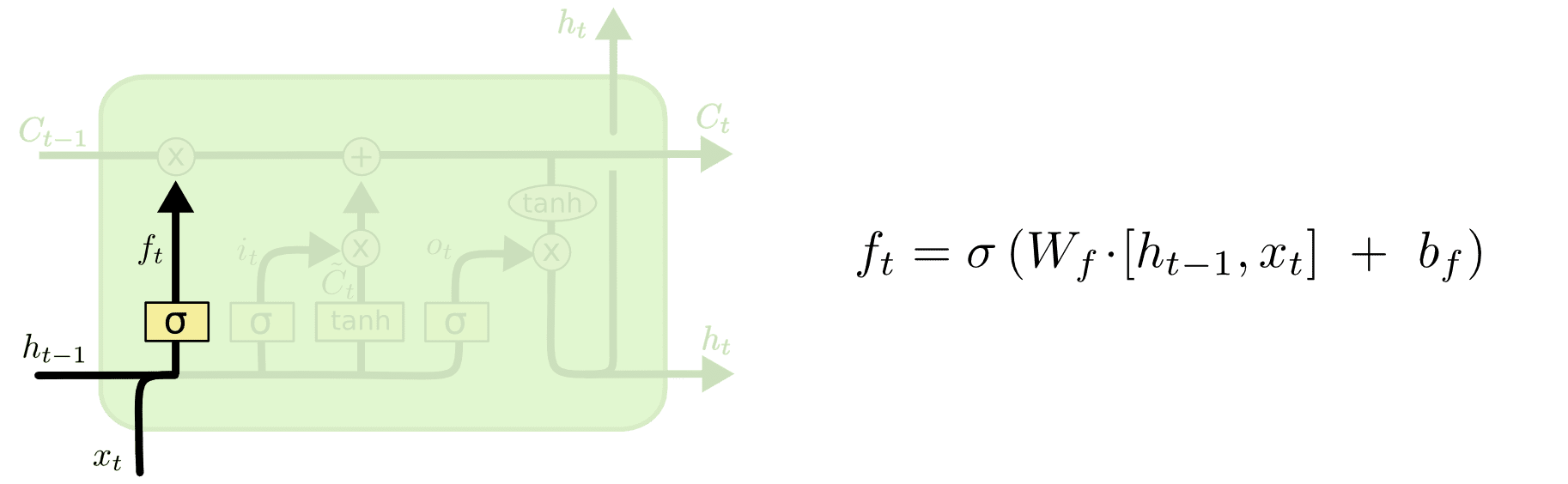

The first step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function which omits if it is 0 and stores if it is 1. It looks at the previous state ht-1 and the current input xt and computes the function. To understand the activation functions and the math behind it go here.

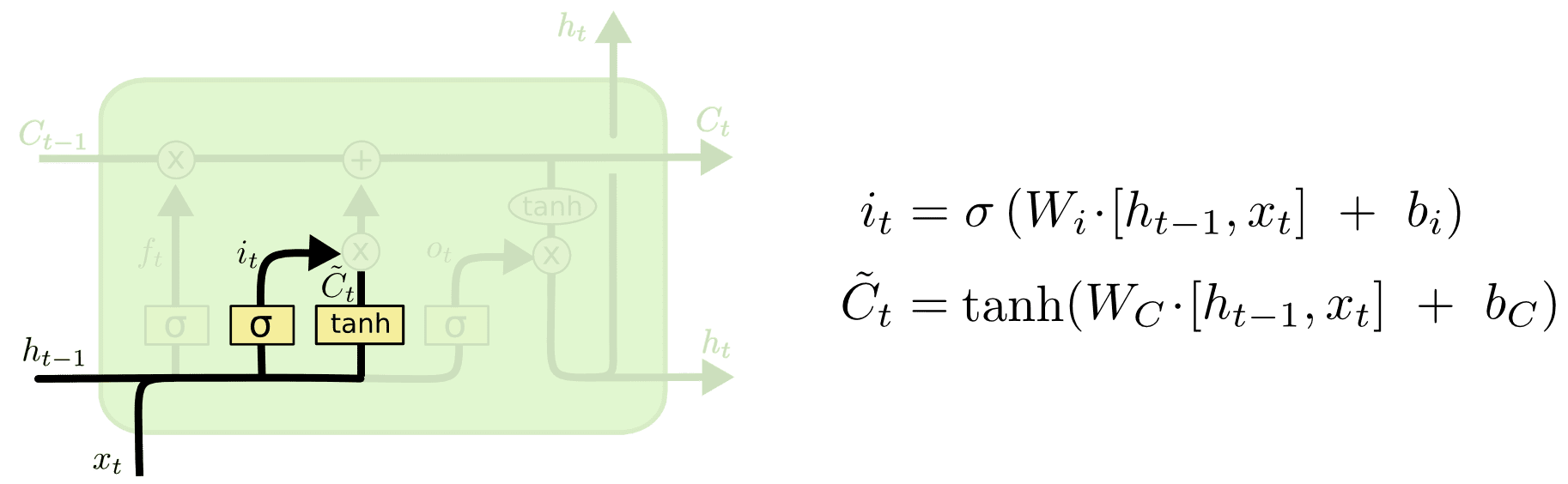

Then we have another layer which consists of two parts. One is the sigmoid function and the other is the tanh. In the sigmoid function, it decided which values to let through(0 or 1). And in the tanh function its gives the weightage to the values which are passed deciding their level of importance(-1 to 1).

Finally, we need to decide what we’re going to output. This output will be based on our cell state, but will be a filtered version. First, we run a sigmoid layer which decides what parts of the cell state we’re going to output. Then, we put the cell state through tanh to push the values to be between -1 and 1 and multiply it by the output of the sigmoid gate, so that we only output the parts we decided to.

Image Sources: Understanding of LSTM