Recently, a team of researchers from MIT CSAIL recommended that researchers should focus on three key areas that prioritise to deliver computing speed-ups, which are new algorithms, higher-performance software and more specialised hardware, and the need for moving away from focusing on creating only smaller hardware.

The researchers stated that semiconductor miniaturisation is running out of steam as a viable way to grow computer performance, and industries will soon face challenges in their productivity. However, the opportunities for growth in computing performance will still be available if the researchers focus more on software, algorithms, including hardware architecture.

Just out in Science: MIT researchers say that, with the end of Moore's Law, we have to forget about silicon and start looking elsewhere.

— MIT CSAIL (@MIT_CSAIL) June 4, 2020

Paper: https://t.co/xF4v0xKBg9

Executive summary: https://t.co/920rXe3i8K@ScienceMagazine @NewsfromScience pic.twitter.com/SJcKnBT1Be

Transistors have brought a plethora of advances and growth in computer performance over the past few decades. These improvements in computer performance come from decades of miniaturisation of computer components, for instance, from a room-sized computer to a cellphone. For decades, programmers have been able to prioritise writing code quickly rather than writing it so that it runs quickly since smaller, faster computer chips have always been able to pick up the slack.

In 1975, Intel founder Gordon Moore predicted the regularity of this miniaturisation trend, which is now called Moore’s law — the number of transistors on computer chips would double every 24 months.

The Solution

The researchers broke down their recommendations into the categories, they are software, algorithms, and hardware architecture as mentioned below.

Higher-Performance Software

According to the researchers, software can be made more efficient by performance engineering such as restructuring the software to make it run faster. Performance engineering can remove inefficiencies in programs, also known as software bloat. Software bloating is an issue that arises from traditional software-development strategies that aim to minimise applications’ development time rather than the time it takes to run. Performance engineering can also tailor the software to the hardware on which it runs, for example, to take advantage of parallel processors and vector units.

Algorithms

Algorithms offer more-efficient ways to solve problems. The researchers stated that the biggest benefits coming from algorithms are for new problem domains. For instance, machine learning and new theoretical machine models that better reflect emerging hardware.

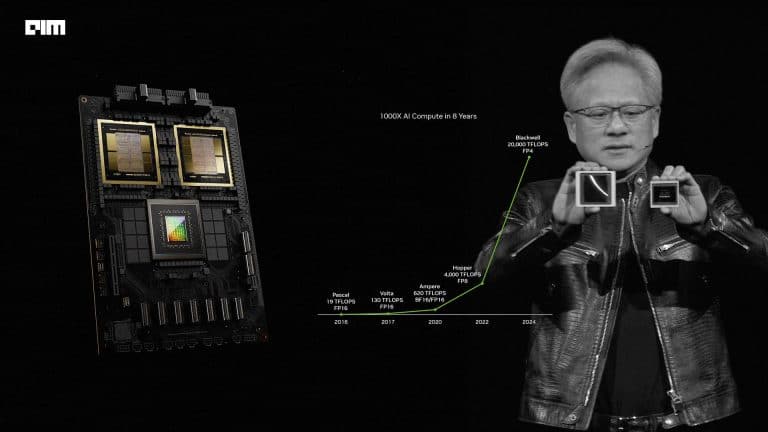

Specialised Hardware

According to the researchers, hardware architectures can be streamlined through processor simplification, where a complex processing core is replaced with a simpler core that requires fewer transistors. Then, the freed-up transistor budget can be redeployed in other ways. For example, by increasing the number of processor cores running in parallel, which can lead to large efficiency gains for problems that can exploit parallelism.

Also, another form of streamlining is domain specialisation, where hardware is customised for a particular application domain. This type of specialisation discards processor functionality that is not needed for the domain and can allow more customisation to the specific characteristics of the domain by decreasing floating-point precision for artificial intelligence and machine-learning applications.

Wrapping Up

Researchers have been following Moore’s law for a few decades now, i.e the overall processing power for computers will double every two years. Software development in the Moore era has generally focused on minimising the time it takes to develop an application, rather than the time it takes to run that application once it is deployed.

The researchers stated that as miniaturisation wanes, the silicon-fabrication improvements at the Bottom will no longer provide the predictable, broad-based gains in computer performance that society has enjoyed for more than 50 years.

In the post-Moore era, performance engineering, development of algorithms, and hardware streamlining will be most effective within big system components. From engineering-management and economic points of view, these changes will be easier to implement if they occur within big system components that include reusable software with typically more than a million lines of code or hardware of comparable complexity or a similarly large software-hardware hybrid.