|

Listen to this story

|

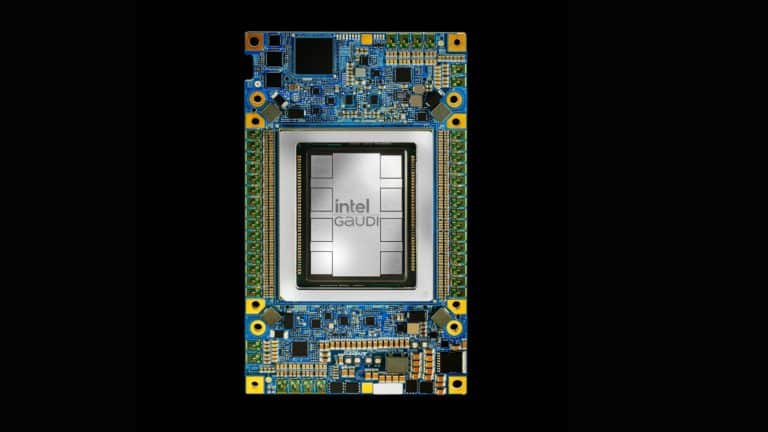

In a recent blog post titled “Behind the Compute,” Stability AI, unveiled shocking findings regarding the performance of Intel Gaudi 2 accelerators compared to NVIDIA’s H100 in training and inference of its upcoming image generation model Stable Diffusion 3.

Stability AI’s text-to-image model demonstrated promising results in the performance analysis. Utilising the 2B parameter multimodal diffusion transformer (MMDiT) version of the model, Stability AI compared the training speed of Intel Gaudi 2 accelerators with NVIDIA’s A100 and H100.

On 2 nodes configuration, Intel Gaudi 2 system processed 927 training images per second, 1.5 times faster than NVIDIA H100-80GB. Further increasing the batch size to 32 per accelerator in Gaudi 2 resulted in a training rate of 1,254 images/sec.

On 32 Nodes Configuration, the Gaudi 2 cluster processed over 3x more images per second compared to NVIDIA A100-80GB GPUs, despite A100s having a highly optimised software stack.

On inference tests with the Stable Diffusion 3 8B parameter model, Gaudi 2 chips offered similar inference speed to NVIDIA A100 chips using base PyTorch.

However, Stability AI admitted that with TensorRT optimisation, A100 chips produced images 40% faster than Gaudi 2, but Stability AI anticipates Gaudi 2 to outperform A100s with further optimisation. This can be further contented with the upcoming GH200 processors that might be announced at GTC 2024 this month.

Few months back, AMD also claimed that it has surpassed NVIDIA H100 on various performance metrics, but it was later debunked by NVIDIA as it said that AMD also did not include TensorRT optimisation for the test.

Intel has also launched its Gaudi 3 AI accelerator which would make this competition even interesting in the future.

Moreover, Stable Beluga 2.5 70B, Stability AI’s fine-tuned version of LLaMA 2 70B, showcased impressive performance on Intel Gaudi 2 accelerators. Running the PyTorch code out of the box on 256 Gaudi 2 accelerators, Stability AI measured an average throughput of 116,777 tokens/second.

Gaudi 2 demonstrated a 28% faster performance compared to NVIDIA A100 in inference tests with the 70B language model, generating 673 tokens/second per accelerator.