Depth estimation has several use cases in the real world, whether it be creating virtual avatars for your social media profiles or using the new portrait mode. However, it requires massive computational resources and sophisticated hardware which can accurately track the movement of the subject’s iris to estimate the metric distance from the camera. Such extensive computational resources make it a challenging task for deploying the technology for mobile devices, limiting its implementation. Also, with other obstacles like no fixed lighting source, locks of hair on the subject’s face or having their eyes squinched can make it even more difficult.

Thus, to enhance the depth estimation process, a team of researchers from Google AI, has announced a new machine learning model for accurate depth estimation by iris tracking — MediaPipe Iris. Led by Andrey Vakunov and Dmitry Lagun, research engineers at Google Research, this ML model has been designed to accurately track the iris using a single RGB camera, in real-time without any advanced hardware.

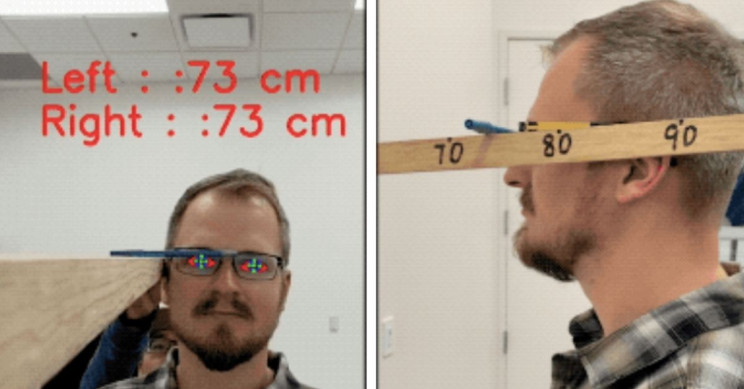

(A prototype) Use Case for far-sighted individuals. The font size that is seen remains independent of the distance of the device from the user. PC: Google AI Blog

According to Google’s recent blog, this ML model — MediaPipe Iris claims to evaluate the distance between the subject and the camera with 10% relatively less error without the use of a depth sensor. This means, now the current tracking method of iris will not rely on the point of location the subject is looking, neither will it provide any form of identity recognition.

Also Read: Google Open-Sources New Real-Time Hand Gesture-Tracking ML Pipeline

Behind MediaPipe Iris — ML Pipeline For Depth Estimation

To build this ML model, Google researchers first utilised their previous MediaPipe Face Mesh framework, which is a face geometry solution using AR to identify 3D face landmarks on mobile devices. From this mesh framework, the researchers pick up the eye region, which is then later used in the iris tracking machine learning procedure.

Iris is marked in blue, and the eyelid has been marked in red.

With that information in hand, the researchers divided the pipeline into three different models, which will first detect the face, then its landmarks and finally the iris. And therefore, the researchers designed a multitask model which was equipped with a unified encoder for each task, helping in using task-specific training data.

The ML Pipeline has been implemented as a MediaPipe graph, which leverages facial landmark subgraph, iris landmark subgraph, and an iris and depth, to detect the points on the subjects.

Eye landmarks are marked in red, and iris landmarks are marked in green.

To train the iris landmark ML Model, the researchers take a patch of the image where the eye region is prominent and evaluate the eyelid and iris of the subject. The researchers manually evaluated approximately 50,000 images with various obstacles and head poses. With the solution, the researchers were able to track the iris and estimate the metric distance accurately.

Cropped eye regions from the input to the model, which predicts landmarks via separate components.

Explaining further, researchers stated in their blog — a lot of this could be attributed to the fact that the diameter of an iris remains roughly constant at 11.7±0.5 mm across the majority of humans. Case in point — a pinhole camera model (refer the image below) used for projecting onto a square pixel sensor, where it can figure out the distance of the subject by using the focal length of the camera. With that information, it can easily be established that the distance between the two is directly proportional to the physical size of the eye.

Distance ‘d’ computed from focal length ‘f’ and the size of the iris.

Also Read: How Data Scientists Create High-Quality Training DataSets For Computer Vision

Evaluation & Release

To measure the accuracy of the ML model, the researchers compared it to the already established depth sensor on a smartphone — iPhone 11, which utilised front-facing synchronised images of over 200 respondents. To which, the researchers highlighted that the depth sensor of iPhone 11 shows an error of < 2% for distances up to two meters.

On the other hand, the proposed model for depth estimation using iris size has a mean relative error of 4.3% and a standard deviation of 2.4%. These results were tested on the respondents with and without eyeglasses and noted that glasses on subjects could increase the mean relative error slightly to 4.8%.

Left: histogram of estimation errors; Right: Comparison between the actual and estimated distance by MediaPipe Iris.

According to the researchers, as MediaPipe Iris requires no advanced hardware, the results highlight that it is a convenient method to estimate the metric distance with a wide range of cost-points. With the help of open-source, cross-platform framework for developers — MediaPipe — the solution can now be deployed on smartphones, desktops as well as on the websites.

Furthermore, the researchers are continuously working on not making the ML model as a piece of surveillance equipment and pushing it for the broader research and development community.

The MediaPipe Iris project page is on GitHub.