Tutel is a library from Microsoft that enables building mixture of experts (MoE) models – a subset of large-scale AI models. Tutel is open source and has been included in fairseq, one of Facebook’s PyTorch toolkits, to enable developers across AI disciplines.

Microsoft’s Ownership of MoE

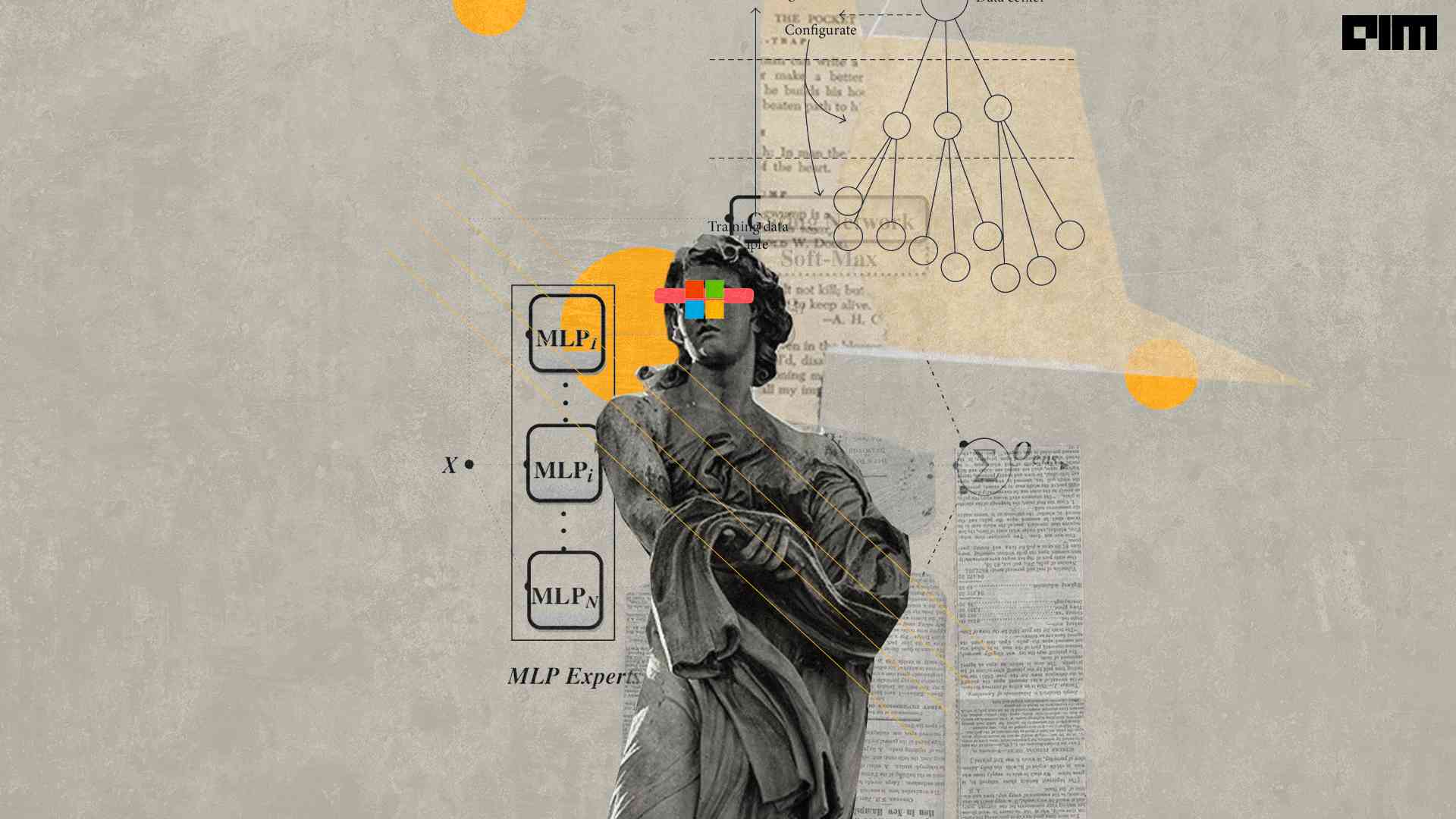

MoE is composed of small clusters of “neurons” that are activated only under very precise conditions. Lower “layers” of the MoE model extract features, which specialists then evaluate. For instance, MoEs can develop a translation system, with each expert cluster learning to handle a distinct chunk of speech or grammatical norm. Deep learning architecture MoE has a computational cost that is less than the number of parameters, making scalability easy.

MoEs have different advantages over other model architectures. They can specialise in response to situations, allowing the model to exhibit a broader range of behaviours. Indeed, MoE is one of the few methodologies proved to scale to over a trillion parameters, paving the door for models to power computer vision, speech recognition, natural language processing, and machine translation systems. Parameters are the components of a machine learning model that are learned from historical training data. The association between factors and sophistication has generally held up well, particularly in the language domain.

Tutel Features

Tutel is primarily concerned with optimising MoE-specific computing. The library is optimised, in particular, for Microsoft’s new Azure NDm A100 v4 series instances, which offer a sliding scale of NVIDIA A100 GPUs. In addition, Tutel features a “simple” interface designed to facilitate integration with other MoE systems, according to Microsoft. Alternatively, developers can leverage the Tutel interface to include standalone MoE layers directly into their DNN models.

Tutel’s comprehensive and adaptable MoE algorithmic support enables developers working in various AI disciplines to perform MoE more quickly and efficiently. Its high compatibility and extensive feature set ensure optimal performance when dealing with the Azure NDm A100 v4 cluster. Tutel is a free and open-source project that has been integrated into fairseq.

Optimisations to Tutel’s MOE

Tutel is a complement to previous high-level MoE solutions such as fairseq and FastMoE. It focuses on optimising MoE-specific computation and all-to-all communication and providing diverse and adaptable algorithmic MoE support. Tutel’s user interface is straightforward, making it simple to combine with other MoE systems. Alternatively, developers can use the Tutel interface to embed independent MoE layers directly into their own DNN models, gaining immediate access to highly optimised state-of-the-art MoE capabilities.

Computations for the MoE

Due to a lack of efficient implementations, MoE-based DNN models construct the MoE computation using a naive mixture of numerous off-the-shelf DNN operators given by deep learning frameworks such as PyTorch and TensorFlow. Due to redundant computing, this method incurs large performance overheads. Tutel develops and implements several highly efficient GPU kernels that provide operators for MoE-specific computation. In addition, Tutel will actively integrate emerging machine learning algorithms from the open-source community.

Conclusion

Microsoft is particularly interested in MoE because it makes efficient use of hardware. Computing power is only used by professionals with the specialised knowledge required to address a problem. The remainder of the model patiently awaits their turn, which increases efficiency. Microsoft demonstrates its commitment by launching Tutel, an open-source library for constructing models of equivalence. According to Microsoft, the Tutel programme helps developers expedite MoE models’ operation and maximise hardware use efficiency.

MoE offers holistic training through techniques from various disciplines. Tutel has a considerable advantage over the fairseq architecture, as proved by researchers. It has also been incorporated into the DeepSpeed architecture, which benefits Azure services.

To know more about Tutel, read here.