Over the last seven days, online media moguls Facebook, YouTube and Twitter have been in the news for stifling the content on their platforms.

While Facebook is removing the campaign ads of Donald Trump, YouTube has reportedly halved the number of conspiracy theory videos. Whereas, Twitter took a resolve to tighten the screws on hate speech or dehumanising speech as they call it.

YouTube Says Enough Conspiracies Theories

In January 2019, YouTube said it would limit the spread of videos “that could misinform users in harmful ways.”

YouTube’s recommendation algorithm follows a technique called Multi-gate Mixture Of Experts. Ranking with multiple objectives is really a hard task, so the team at YouTube decided to mitigate the conflict between multiple objectives using Multi-gate Mixture-of-Experts (MMoE).

This technique enables YouTube to improve the experience for billions of its users by recommending the most relevant video. Since the algorithm takes into account the type of content as an important factor, classification of a video based on its title and the context of the video for its conspiratorial nature becomes easier.

Ever since YouTube announced that it would recommend less conspiracy content and the numbers dropped by about 70% at the lowest point in May 2019. These recommendations are now only 40% less common.

Twitter Has A Speech Cleansing Policy

If your tweet is on the lines of any of the above themes, as shown above, you might risk losing your account forever. Last week, Twitter officially announced that they had updated their policies of 2019.

We continuously examine our rules to help make Twitter safer. Last year we updated our Hateful Conduct policy to address dehumanizing speech, starting with one protected category: religious groups. Now, we’re expanding to three more: age, disease and disability.

— Twitter Safety (@TwitterSafety) March 5, 2020

For more info:

The year 2019 had been turbulent for Twitter. The firm’s management faced a lot of flak for banning a few celebrities such as Alex Jones for their tweets. Most complained Twitter had shown double standard by banning an individual based on the reports of the rival faction.

Vegans reported meat lovers. The left reported the right and so on and so forth. No matter what the reason was, at the end of the day, the argument boils down to the state of free speech in the digital era.

Twitter, however, has been eloquent about their initiatives in a blog post, they wrote last year, which also has been updated yesterday. Here is how they claim that their review system is promoting healthy conversations:

- 16% fewer abuse reports after an interaction from an account the reporter doesn’t follow.

- 100,000 accounts suspended for creating new accounts after a suspension during January-March 2019 –– a 45% increase from the same time last year.

- 60% faster response to appeals requests with their new in-app appeal process.

- 3 times more abusive accounts suspended within 24 hours after a report compared to the same time last year.

- 2.5 times more private information removed with a new, easier reporting process.

Skimming over a million tweets in a second can be exhaustive, so Twitter uses the same algorithms that detect spam.

The same technology we use to track spam, platform manipulation and other rule violations is helping us flag abusive tweets to our team for review

With a focus on reviewing this type of content, Twitter has expanded teams in key areas and geographies for staying ahead and working quickly to keep people safe.

Twitter, now, offers an option to mute words of your choice that would eliminate any news related to that word on your feed.

uh, here’s a tutorial 😂 pic.twitter.com/wBsg9X4T1p

— whitney 👩🏾💻🌲 (@nonprofWHIT) February 19, 2020

Twitter has a gigantic task ahead as it has to find a way between relentless reporting of the easily offended and the inexplicable angst of the radicals.

Facebook Polices The President

From Cambridge Analytica to involvement in Myanmar genocide to Zuckerberg’s awkward senate hearings, Facebook had been the most scandalous of all social media platforms in the past couple of years.

However, amidst all these turbulence, Facebook’s AI team kept on delivering with great innovations. They have also employed plenty of machine learning models to detect deep fakes, fake news, and fake profiles. When ML is classifying at scale, adversaries can reverse engineer features, which limits the amount of ground truth data that can be obtained. So, Facebook uses deep entity classification (DEC), a machine learning framework designed to detect abusive accounts.

The DEC system is responsible for the removal of hundreds of millions of fake accounts.

Instead of relying on content alone or handcrafting features for abuse detection in posts, Facebook uses an algorithm called temporal interaction embeddings (TIEs), a supervised deep learning model that captures static features around each interaction source and target, as well as temporal features of the interaction sequence.

However, producing these features is labour-intensive, requires deep domain expertise, and may not capture all the important information about the entity being classified.

Last week, Facebook was alleged for displaying inaccurate campaign ads from the President of the US, Donald Trump’s team. Facebook then started taking down the ads, which were categorised as spreading misinformation.

When it comes to digital space, championing free speech is easier said than done. An allegation or a report need not always be credible and to make sure an algorithm doesn’t take down a harmless post is a tricky thing.

Free Speech In The Age Of Algorithms

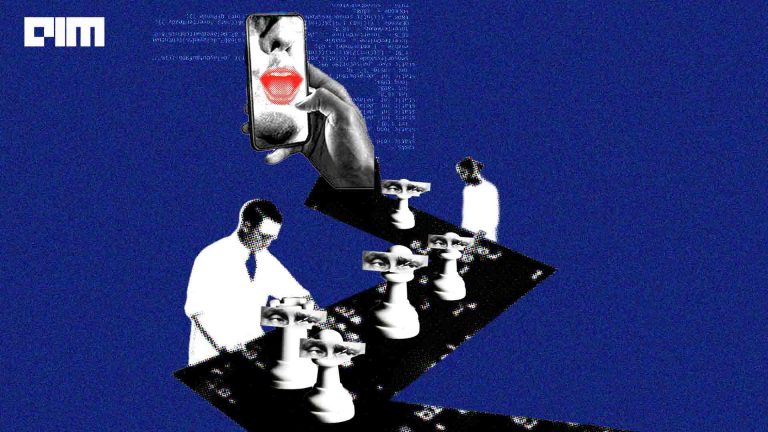

Curbing free speech is curbing the freedom to think. Thought policing has been practised for ages through different means. Kings and dictators detained those who spread misinformation regardless of its veracity. However, spotting the perpetrator was not an easy task in the pre-Internet era. Things took a weird turn when the Internet became a household name. People now carry this great invention, which is packed meticulously into a palm-sized slim metal gadget.

The flow of information happens at lightning speed. GPS coordinates, likes, dislikes and various other pointers are continuously gathered and fed into massive machine learning engines working tirelessly to churn profits through customer satisfaction. The flip side to this is, these platforms now have become the megaphone of the common man.

Anyone can talk to anyone about anything. These online platforms can be leveraged for a reach that is unprecedented. People are no longer afraid of being banned from public rallies or other sanctions as they can fire up their smartphone and start a periscope session. So, any suspension from these platforms is almost like potential obscurity forever. The opinions, activism or even fame, everything gets erased. This leads to an age-old existential question of an identity crisis, only this time, it is done by an algorithm.

A non-human entity[algorithm] classifying a humans act for being dehumanising

Does this make things worse or better? Or should we bask in the fact that we all would be served an equal, unbiased algorithmic judgement?

Machine learning models are not perfect. The results are as good as the data, and the data can only be as true as the ones that generate it. Monitoring billions of messages in the span of a few seconds is a great test of social, ethical and most importantly, computational abilities of the organisations. There is no doubt that companies like Google, Facebook and Twitter have a responsibility that has never been bestowed upon any other company in the past.

“We also realise we don’t have all the answers, which is why we have developed a global working group of outside experts to help us think.”

Twitter’s statement

The responsibilities are critical, the problems are ambiguous, and the solutions hinge on a delicate tightrope. Both the explosion of innovation and policies will have to converge at some point in the future. This will need a combined effort of man and machine as the future stares at us with melancholic indifference.