Suppose there are a string of assets whose value needs to be assessed based on the risk factors. These risk factors usually are correlated. To ensure profitability with a tolerable level of risk is the primary goal of a risk analyst. To calculate the correlation and risk tolerance, the analyst might use a covariance matrix. Switching to maths might sound abrupt but, that’s how things are done in the real world. A college level maths concept to tackle a million dollar problem.

Assuming a basic knowledge of linear algebra and matrices, this article introduces the reader to the significance of eigendecomposition in the field of machine learning.

Eigen (pronounced eye-gan) is a German word that means “own” or “innate”, as in belonging to the parent matrix.

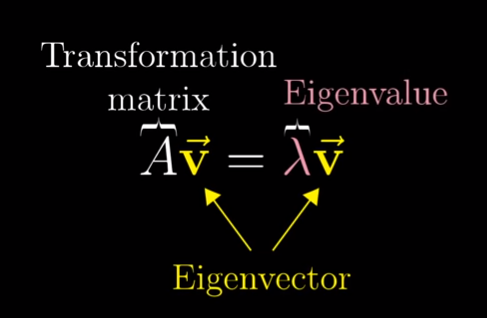

Eigenvectors are unit vectors, which means that their length or magnitude is equal to 1. They are often referred to as right vectors, which simply means a column vector. Whereas, eigenvalues are coefficients applied to eigenvectors that give the vectors their length or magnitude.

Eigendecomposition is used to decompose a matrix into eigenvectors and eigenvalues which are eventually applied in methods used in machine learning, such as in the Principal Component Analysis method or PCA. Decomposing a matrix in terms of its eigenvalues and its eigenvectors gives valuable insights into the properties of the matrix.

The whole matrix can be summed up to just a multiplication of scalar and a vector. In fact PCA uses this technique to effectively represent multiple columns to less number of vectors by finding linear combination among them and evaluating eigenvalues and eigenvectors for them.

To check whether a vector is an eigenvector of a matrix, the candidate eigenvector is multiplied by the eigenvector and the result with the eigenvalue is compared.

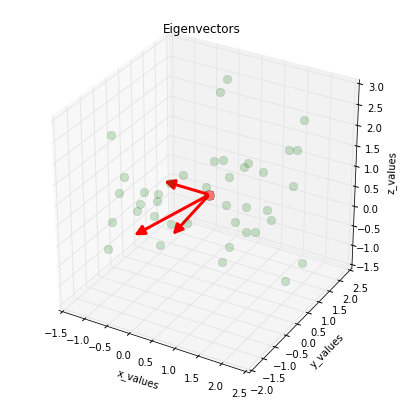

First, a matrix is defined, then the eigenvalues and eigenvectors are calculated. We will then test whether the first vector and value are in fact an eigenvalue and eigenvector for the matrix. The eigenvectors are returned as a matrix with the same dimensions as the parent matrix, where each column is an eigenvector.

Eigenvalues In Principal Component Analysis

Machine learning involves lots of data. If in any way we are able to reduce the data size without losing actual representation of those values then its a piece of pie for programmers.

Principal Component Analysis or PCA is performed for dimensionality reduction. With larger datasets, finding significant features gets difficult. So, in order to check for the correlation between two variables and if they could be dropped off the table to make the machine learning model more robust.

Talking about PCA let’s have a brief look at how it is performed:

- Firstly, the data needs to be centred — meaning subtracting the average or the mean from every attribute.

- Now computing the covariance matrix gets easier.

- Covariance between two variables gives how much they are dependent or independent of each other.

- If two variables say X and Y are said to be in positive correlation if Y increases with an increase in X and decreases with a decrease in X.

If this covariance matrix is multiplied with any vector in the feature space, then it gives a vector, the slope of which will slowly converge towards the maximum variance on repeated multiplications. So this multiplication of a random vector with covariance matrix points towards the dimension of the greatest variance in the data.

It becomes extremely crucial to identify these converging points in the data. One way to do is by observing which vectors do not turn on carrying out a multiplication with the covariance matrix.

So the span of the vector increases without rotating. The span is a scalar quantity and there exists a vector which for a certain value doesn’t transform in a certain way. This exactly resonates with the idea behind Eigenvectors.

Sample Code to calculate eigenvalues in Python

from numpy import array

from numpy.linalg import eig

A = array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

print(A)

values, vectors = eig(A)

print(values)

print(vectors)

Conclusion

The knowledge of manipulating the matrices by performing a transpose or a rotation is very essential. Though these operations can now be called with a single line of code in Python, having an intuition of how a transformed matrix changes in a coordinate space comes in handy while interpreting the outcomes.