Generative models have gained much popularity in recent years. These models help in handling missing information as well as treating with the variable-length sequences. Technically speaking, generative models deal with the models of distributions, defined over data points in some potentially high-dimensional space.

In this article, we discuss seven types of generative models, which are listed below in alphabetical order-

Autoregressive Models

Autoregressive Model or AR model is when a value from a time series is regressed on previous values from that same time series. The order of an autoregression is the number of immediately preceding values in the series that are used to calculate the value at the present time. In simple words, Autoregressive models predict future values based on past values. These models are flexible at handling a wide range of different time-series patterns.

Know more here.

Bayesian Network

Bayesian Network or Bayes Network is a generative probabilistic graphical model that allows efficient and effective representation of the joint probability distribution over a set of random variables. Bayes Network consists of two main parts, which are structure and parameters. The structure is a directed acyclic graph (DAG), and the parameters consist of conditional probability distributions associated with each node. This network can be used for various applications, such as time series prediction, anomaly detection, reasoning and other such.

Know more here.

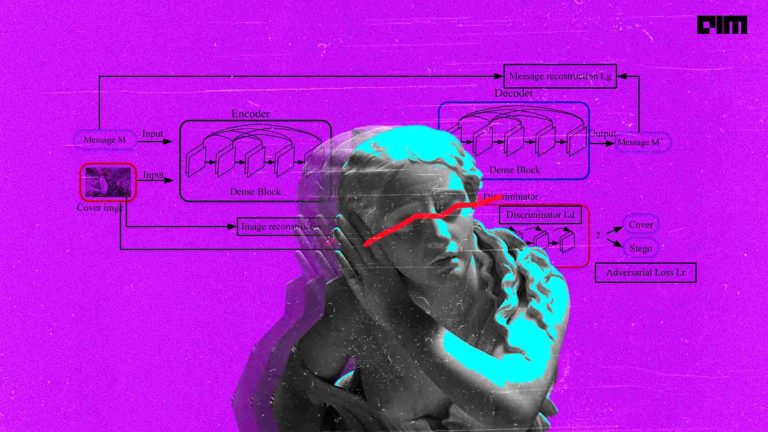

Generative Adversarial Networks

Generative Adversarial Networks or GANs are popular generative models that include two parts, generators and discriminators. This model works by estimating generative models via an adversarial process. The generative model captures the data distribution, and the discriminative model estimates the probability that a sample came from the training data rather than the generative model. GANs are one one of the trending generative models that have been used to create images of humans that do not exist/

Know more here.

Gaussian Mixture Model

Gaussian Mixture Model is a generative probabilistic model, which assumes all the data points are generated from a mixture of a finite number of Gaussian distributions with unknown parameters. GMMs are commonly used as a parametric model of the probability distribution of features in a biometric system, which includes vocal-tract related spectral features in a speaker recognition system. Thus, GMM parameters are estimated from training data using the iterative Expectation-Maximisation (EM) algorithm or Maximum A Posteriori (MAP) estimation from a well-trained prior model.

Know more here.

Hidden Markov Model

A Hidden Markov Model (HMM) is a statistical model that can be used to describe the evolution of observable events that depend on internal factors, which are not directly observable. The model is popularly known for their effectiveness in modelling the correlations between adjacent symbols, domains, or events, and they have been extensively used in various fields, especially in speech recognition and digital communication. A Hidden Markov Model consists of two stochastic processes, which are an invisible process of hidden states and a visible process of observable symbols.

Know more here.

Latent Dirichlet Allocation (LDA)

Latent Dirichlet Allocation or LDA is a generative probabilistic model with collections of discrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which each item of a collection is modelled as a finite mixture over an underlying set of topics. The model has applications to various problems, including collaborative filtering, content-based image retrieval, among others.

Know more here.

Variational Autoencoders (VAEs)

Variational Autoencoders (VAEs) have been one of the most popular approaches to unsupervised learning of complicated distributions. They are built on top of standard function approximators, which are neural networks and can be trained with stochastic gradient descent. The application of VAEs includes generating various kinds of complicated data, including handwritten digits, faces, CIFAR images, predicting the future from static images and more.

Know more here.