|

Listen to this story

|

To be scared of something you’ve built is probably the worst of all fears. It comes along with guilt and resentment of what you have done. This is the case with a lot of pioneering AI researchers such as Geoffrey Hinton and Yoshua Bengio, who are talking about abandoning their lives’ work to warn about the dangers of AI.

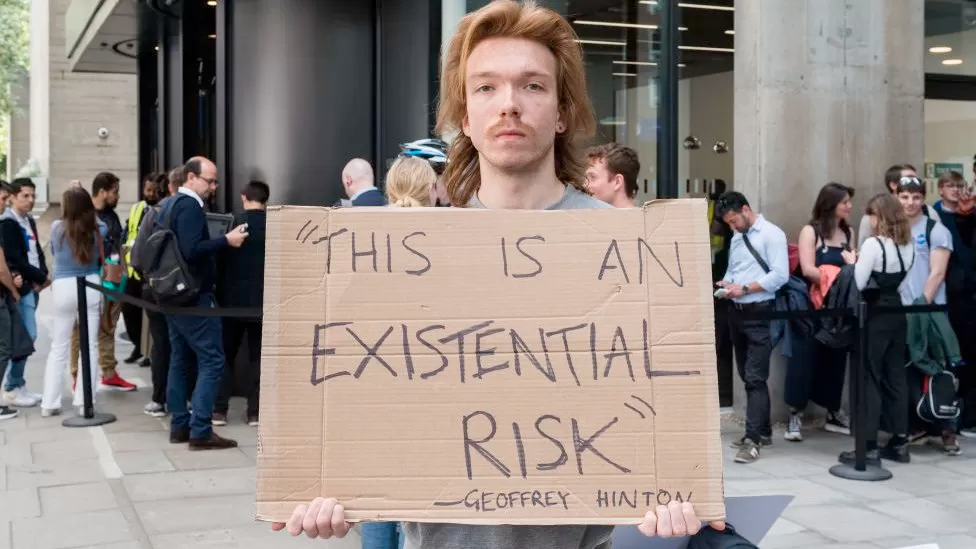

Bengio told the BBC that he feels “lost” over his life’s work. He is the second “godfather” of AI to come up with fears and ask for regulation of AI. Hinton was the first one to leave Google to warn the world about the problems of the pace of development of such AI technology. He claims that AI is “an existential risk”.

Overselling fear

Last week, hundreds of AI scientists and researchers joined in to warn that the future of humanity is at risk. The Statement on AI Risk, signed by Hinton and Bengio, also included OpenAI’s Sam Altman, Google DeepMind’s Demis Hassabis, and Stability AI’s Emad Mostaque, among others.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” reads the letter. Essentially, most of the people concerned about developing technology are concerned about what happens if the technology falls into the hands of “bad actors” which includes the military and terrorist organisations.

When the experts themselves come out to point out the risks of the technology, it might be tempting and too easy to fall into the fears. On the flip side, the people who are blooming with the AI revolution are now voting to increase developments in AI. That is also probably because making AI models like ChatGPT has now become easier with open source models. Take for example, the recent debate about the need for uncensored LLMs that are outperforming bigger models.

This can also be one of the reasons why the AI experts are now becoming AI doomsayers. They are increasingly getting scared of letting the technology be in the hands of people who are not so well versed with the possibility of it going rogue.

But on the contrary, the third godfather of AI, Yann LeCun’s reaction to AI researchers prophesying the doomsday scenario is face palming. LeCun, the Meta AI chief, has been quite vocal when criticising people who are overestimating the capabilities of the developing generative AI models such as ChatGPT and Bard.

A statistician's view of AGI doomer drama. https://t.co/nx1pGaNVt9

— Yann LeCun (@ylecun) June 4, 2023

Another AI expert to call out the AI experts who have become AI doomers is Kyunghyun Cho, who is regarded as one of the pioneers of neural machine translation which led to the development of Transformers by Google. Cho said in a media interview that glorifying “hero scientists” is like taking people’s words as the Gospel truth without rationalising the meaning behind them.

He expressed frustration towards the developing AI discourse and how the US Senate hearing was disappointing as it did not talk about the benefits of AI, but only the problems related to it. “I’m disappointed by a lot of this discussion about existential risk; now they even call it literal ‘extinction’. It’s sucking the air out of the room,” he said.

Losing Credibility

The point is, a person who has never ever deployed a single ML model in their life, it is very easy for them to become an AI doomer. They are often influenced by the hundreds of movies made about machines taking over the world. But now, if the so-called AI experts join the same narrative, with the same explanation, it seems like it is merely fear mongering, and not an actual reason.

Reminder: most AI researchers think the notion of AI ending human civilization is baloney.

— Pedro Domingos (@pmddomingos) May 3, 2023

Take for example Elon Musk, the longest running AI doomer hasn’t stopped yet. In a recent interview with the Wall Street Journal, Musk said, “I don’t think AI is going to try to destroy all humanity, but it might put us under strict controls.” He still thinks there is a “non-zero chance” of AI going full Terminator on humanity.

Earlier, the petition to pause giant AI experiments and not train models beyond GPT-4 was welcomed by a lot of AI researchers including Musk and Steve Wozniak. This new petition seems a lot similar to that one, only this time it includes Altman. This suggests a lot of possibilities other than actual fear of technology taking over humanity.

Altman had asked the government to regulate AI development. At the same time, he also holds the opinion that OpenAI should be evaluated differently. This definitely questions the company’s motivation behind enforcing government regulations on AI as the regulation is a clear conflict of interest for Altman.

We all know that fear sells. “Unfortunately, the sensational stories read more. The idea is that either AI is going to kill us all or AI is going to cure everything — both of those are incorrect,” explained Cho.