OpenPose is a Real-time multiple-person detection library, and it’s the first time that any library has shown the capability of jointly detecting human body, face, and foot keypoints. Thanks to Gines Hidalgo, Zhe Cao, Tomas Simon, Shih-En Wei, Hanbyul Joo, and Yaser Sheikh for making this project successful and this library is very much dependent on the CMU Panoptic Studio dataset.

OpenPose is written in C++ and Caffe. Today we are going to see a very popular library with almost a 19.8k star and 6k fork on Github: OpenPose with a small implementation in python, the authors have created many builds for different operating systems and languages. You can try it in your local machine with GPU or without GPU, with Linux or without Linux.

There are many features of OpenPose library let’s see some of the most remarkable ones:

- Real-time 2D multi-person keypoint detections.

- Real-time 3D single-person keypoint detections.

- Included a Calibration toolbox for estimation of distortion, intrinsic, and extrinsic camera parameters.

- Single-person tracking for speeding up the detection and visual smoothing.

OpenPose Pipeline

Before going into coding, implementation let’s look at the pipeline followed by OpenPose.

- First, an input RGB(red green blue) image is fed into a “two-branch multi-stage” convolutional neural network(CNN) i.e. CNN is going to produce two different outputs.

- The top branch, shown in the above figure(beige), predicts the confidence maps (Figure 1b) of different body parts like the right eye, left eye, right elbow, and others.

- The bottom branch predicts the affinity fields (Figure 1c), which represents a degree of association between different body parts of the input image.

- 2nd last, the confidence maps and affinity fields are being processed by greedy inference (Fig 1d).

- The pose estimation outputs of the 2D key points for all people in the image are produced as shown in (Fig 1e).

In order to capture more fine outputs, we use Multi-stage to increase the depth of the neural network approach, which means that the network is stacked one on top of the other at every stage.

Figure 2. OpenPose Architecture of the two-branch multi-stage CNN.

In Figure 2, the top branch of the neural network of OpenPose produces a set of detection confidence maps S. The mathematical definition can be defined as follows.

Outputs of Multi-Stage OpenPose network

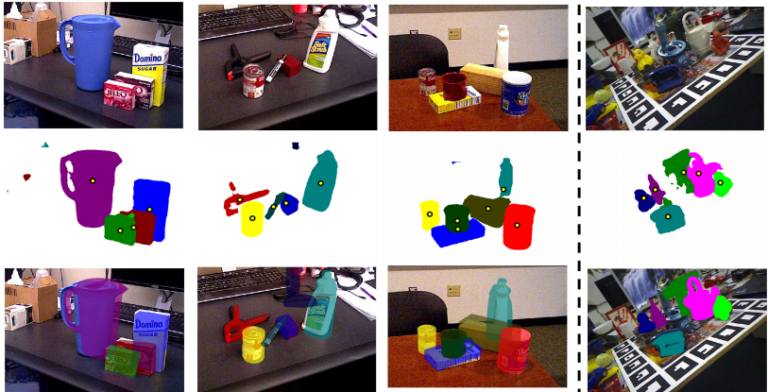

Let’s see the pipeline outputs how they got affected stage by stage and at the end, we get our pose estimation on the input image.

In the above Figure 3, The blue overlay TOP row shows the OpenPose network predicting confidence maps of the right wrist.

And the BOTTOM row shows the network predicting the Part Affinity Fields of the right forearm (right shoulder — right wrist) of humans across different stages.

Implementation

With this one passage command, your openpose will be extracted from GitHub to your google colab GPU runtime environment and it will install CMake with cuda10 and install all the dependencies needed to run the library. Also, we will be needing the youtube-dl library for using OpenPose pose estimation and keypoint detection directly on youtube videos

Installing OpenPose

import os

from os.path import exists, join, basename, splitext

# initiating variable for cloning openpose

git_repo_url = 'https://github.com/CMU-Perceptual-Computing-Lab/openpose.git'

project_name = splitext(basename(git_repo_url))[0]

if not exists(project_name):

# see: https://github.com/CMU-Perceptual-Computing-Lab/openpose/issues/949

# install new CMake becaue of CUDA10

!wget -q https://cmake.org/files/v3.13/cmake-3.13.0-Linux-x86_64.tar.gz

!tar xfz cmake-3.13.0-Linux-x86_64.tar.gz --strip-components=1 -C /usr/local

# clone openpose

!git clone -q --depth 1 $git_repo_url

!sed -i 's/execute_process(COMMAND git checkout master WORKING_DIRECTORY ${CMAKE_SOURCE_DIR}\/3rdparty\/caffe)/execute_process(COMMAND git checkout f019d0dfe86f49d1140961f8c7dec22130c83154 WORKING_DIRECTORY ${CMAKE_SOURCE_DIR}\/3rdparty\/caffe)/g' openpose/CMakeLists.txt

# install system dependencies

!apt-get -qq install -y libatlas-base-dev libprotobuf-dev libleveldb-dev libsnappy-dev libhdf5-serial-dev protobuf-compiler libgflags-dev libgoogle-glog-dev liblmdb-dev opencl-headers ocl-icd-opencl-dev libviennacl-dev

# install python dependencies

!pip install -q youtube-dl

# build openpose

!cd openpose && rm -rf build || true && mkdir build && cd build && cmake .. && make -j`nproc`

from IPython.display import YouTubeVideo

Input & preprocess a Custom video for Pose estimation

We are going to use the charlie video as our input sample but for test, we don’t need to wait

YOUTUBE_ID = '0daS_SDCT_U' YouTubeVideo(YOUTUBE_ID)

Input video:

Output

import io

import base64

from IPython.display import HTML

file_name=’output.mp4’

width=640

height=480

video_encoded = base64.b64encode(io.open(file_name, 'rb').read())

return HTML(data='''<video width="{0}" height="{1}" alt="test" controls>

<source src="data:video/mp4;base64,{2}" type="video/mp4" />

</video>'''.format(width, height, video_encoded.decode('ascii')))

Output video:

Conclusion

OpenPose is the best library for pose estimation and body keypoints detection, including accurately detecting foot, joining boines, and face. To learn more, you can follow some of the below resources, which include codes and research papers for a deep understanding of OpenPose:

- OpenPose Official GitHub Repository

- Research paper on OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields.

- Research Paper using Hand and face detectors: Hand Keypoint Detection in Single Images using Multiview Bootstrapping.

- Convolutional Pose Machines

- download latest Windows portable version of OpenPose

- OpenPose foot dataset

- OpenPose training code

- Command-line demo

- tf-pose-estimation: Tensorflow implementation of OpenPose

- Above demonstration Code: Colab Notebook.