At The Rising 2020, we had a session on yet another widely discussed topic in artificial intelligence — Explainable AI — that has the potential to bring trust among users of ML-based solutions. Anukriti Gupta, Manager – Data Science at United Health Group, discussed why there is a need for identifying the factors that lead to the outcome of AI models.

Anukriti started the session by explaining how AI has proliferated and is assisting businesses in making informed decisions to obtain business growth. Besides, she mentioned that AI drones are being developed to be leveraged in battlefields. However, Anukriti said that even the smallest percentage of error by AI-models could be catastrophic not just on the battlefield but for organisations that are heavily relying on cutting-edge technologies like AI. Consequently, Anukriti stressed on the fact that explainability is the need of the hour to ensure organisations deliver solutions that are effective as well as reliable.

To further substantiate her argument, Anukriti showed the attendees how AI had failed us in the past. For instance, Microsoft’s AI-bot — Tay — on Twitter went rouge by being biased in 2016. In addition, COMPAS, another AI system, was used by the U.S court under trail for determining potential recidivism risk. It was found that the AI in COMPAS was biased towards the colour of criminals.

Such instances, along with various privacy concerns, have led to the introduction of regulations such as GDPR, where organisations have to be transparent about their AI-based solutions. Therefore, companies need to actively embrace explainability to avoid any potential penalties for failing to comply with the regulations.

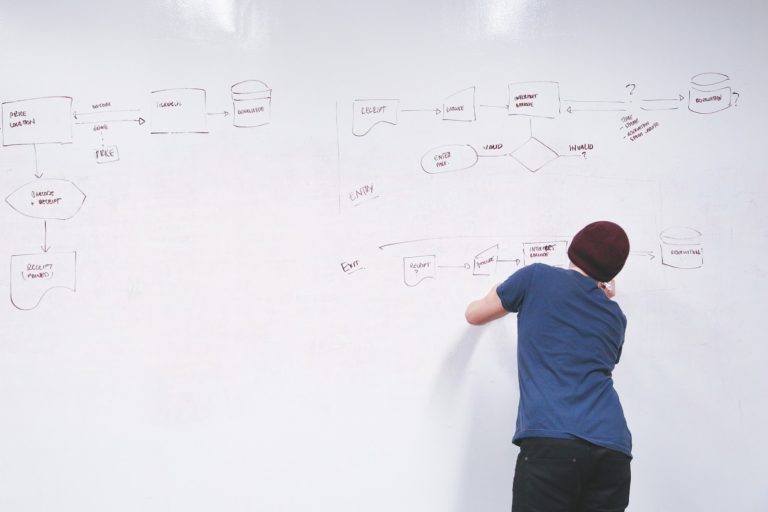

How Can We Obtain Explainability

“As the sophistication increased with advanced algorithms, the accuracy increased, but the explainability decreased,” said Anukriti. For one, we can explain how regression techniques work, but when it comes to deep learning techniques, we call it a black box as it’s difficult to explain.

However, there are various frameworks such as LIME (Local Interpretable Model-Agnostic Explanations), SHAP (SHapley Additve exPlanations), and ELI5 (Explain Like I’m 5) in the market that can help companies bring transparency in their models. Anukriti utilised employee attrition data and demonstrated the implementation of LIME and SHAP.

Being a model agnostic, LIME works with almost every algorithm. It is not only flexible but also easy to implement. However, some of the advantages of SHAP are its appealing visualisations, which simplifies the process of communicating the factors that were responsible for the outcome that AI models provide. Unlike LIME, that is mostly limited to local interpretability, SHAP offers global interoperability, thereby presenting the contribution of every variable in an outcome.

Anukriti said everyone should at least work with anyone with an explainability framework and gradually enhance their knowledge to bring trust among stakeholders. For this, she also recommended a book for people who want to get started with explainability.

Eventually, she concluded by saying that explainability is the key for businesses to succeed in the coming years. Therefore, one should obtain knowledge around the explainability of AI models and also stay up to date with its latest developments.