When we share or receive an image on WhatsApp or other social media, in most scenarios the quality or visuals of the image remains very low and sometimes we are barely able to extract information from those. This degradation is mainly due to the application of various compression algorithms that are used by these platforms. When it comes to restoring these images we have already seen several implementations for this task, in this post we’ll take a look at the Real-ESRGAN model which restores the image precisely and more accurately than most of the previous methods. Below are the points listed that are to be discussed in the post.

Table of contents

- What is Blind image restoration?

- How Real-ESRGAN restore the image?

- Implementing Real-ESRGAN

Let’s first discuss the basic working mechanism of these restoration models.

What is Blind Image restoration?

Image restoration attempts to recreate the original (ideal) scene from a degraded observation. Many image processing programs rely on the recovery process. Image restoration ideally tries to reverse the image deterioration process that occurs during image acquisition and processing. If the degradation is extreme, it may be impossible to reconstruct the original scene entirely, but partial recovery may be achievable.

Blurring and noise are two common types of picture capture degradation. The blurring could be caused by sensor motion or out-of-focus cameras, for example. In this case, the blurring function (also known as a point-spread function) must be known before image restoration. When this blurring function is unknown, the image restoration issue is referred to as blind image restoration.

Blind image restoration is the technique of estimating both the original image and the point-spread function concurrently using only a portion of the image processing and perhaps the original image. The various methodologies presented are dependent on the specific degradation and image models.

Direct measurement and indirect estimating are the two main approaches to blind image restoration. The former method measures unknown system properties, such as blur impulse response and noise level, from an image to be recovered before using these factors in the restoration. Indirect estimating approaches use procedures to produce a restoration or to establish crucial aspects of a restoration algorithm.

How does Real-ESRGAN restore the image?

Real-ESRGAN is an extension of the powerful ESRGAN that synthesizes training pairs with a more practical degradation mechanism to recover general real-world low-resolution pictures. Real-ESRGAN is able to repair most real-world photos and produce superior visual performance than prior works, making it more useful in real-world applications.

The most complicated degradations are frequently the result of intricate combinations of several deterioration processes, such as camera imaging systems, picture manipulation, and Internet transmission.

Researchers have used “high-order” degradation modelling for real-world degradations to overcome such degradations, i.e., the degradations are represented using numerous repeated degradation processes, each process being the classical degradation model. This model, in particular, uses a second-order degradation mechanism to achieve a reasonable mix of simplicity and effectiveness.

Furthermore, it makes numerous critical changes to ESRGAN’s existing discriminator function (e.g., U-Net discriminator with spectrum normalization) to improve discriminator capability and stabilize training dynamics.

To summarise, Real-ESRGAN is trained using only synthetic data. To better replicate complicated real-world degradations, a high-order deterioration modelling approach is devised. The synthesis process also takes into account frequent ringing and overshoot problems. Finally, to improve discriminator capabilities and stabilize training dynamics, we use a U-Net discriminator with spectral normalization.

Implementing Real-ESRGAN

In this section, we are going to implement the architecture. The implementation of this method is quite straightforward than the previous method I,e ESRGAN (Implementation can be found here) and comparatively uses few lines of codes to generate SOTA results.

Let’s quickly set up the environment by installing and importing the dependencies

# Clone Real-ESRGAN and enter the Real-ESRGAN

!git clone https://github.com/xinntao/Real-ESRGAN.git

%cd Real-ESRGAN

# Set up the environment

!pip install basicsr

!pip install facexlib

!pip install gfpgan

!pip install -r requirements.txt

!python setup.py develop

# Download the pre-trained model

!wget https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth -P experiments/pretrained_models

# imports

import os

from google.colab import files

import shutil

import cv2

import matplotlib.pyplot as plt

import os

import glob

Now that we have done all the heavy loading it just now matters of a couple of tasks, now we’ll load an image that is to be restored.

# make directory to upload image

upload_folder = 'upload'

result_folder = 'results'

os.mkdir(upload_folder)

os.mkdir(result_folder)

# upload images

uploaded = files.upload()

for filename in uploaded.keys():

dst_path = os.path.join(upload_folder, filename)

print(f'move {filename} to {dst_path}')

shutil.move(filename, dst_path)

By running above a pop-up will be prompted and by which you can load the desired image to restore.

Now after uploading the image, we’ll infer it by running the below command, the below downloads the pre-trained model and restores the image.

!python inference_realesrgan.py -n RealESRGAN_x4plus -i upload --outscale 3.5 --half --face_enhance

Now to visualize the generated result and original image we’ll use some utility functions based on matplotlib and cv2 which will facilitate us to visualize results in a systematic manner.

def display(img1, img2):

fig = plt.figure(figsize=(25, 10))

ax1 = fig.add_subplot(1, 2, 1)

plt.title('Input image', fontsize=16)

ax1.axis('off')

ax2 = fig.add_subplot(1, 2, 2)

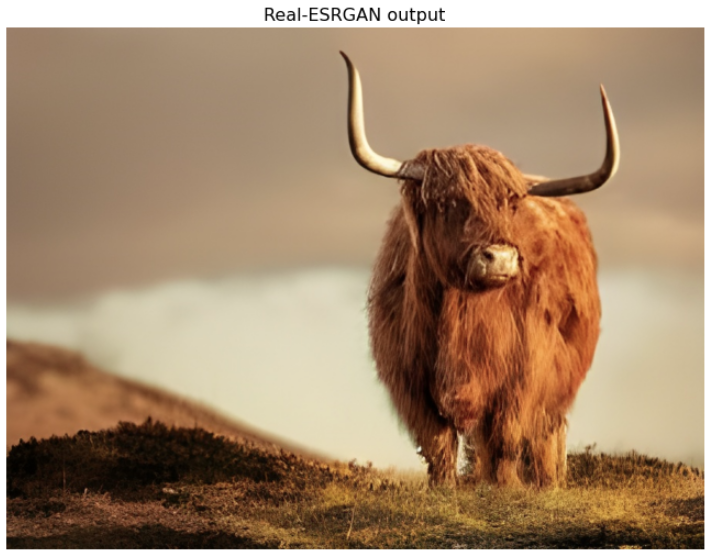

plt.title('Real-ESRGAN output', fontsize=16)

ax2.axis('off')

ax1.imshow(img1)

ax2.imshow(img2)

def imread(img_path):

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

return img

Now let’s retrieve the images from the directory.

input_list = sorted(glob.glob(os.path.join(upload_folder, '*')))

output_list = sorted(glob.glob(os.path.join(result_folder, '*')))

for input_path, output_path in zip(input_list, output_list):

img_input = imread(input_path)

img_output = imread(output_path)

display(img_input, img_output)

Here is the output,

Final words

Through this article, we have discussed image restoration. The need to use such methods mainly arises due to a lot of compressions, degradation processes that take place when the image travels through the web, and in order to retrieve meaningful information from those images, various methods have to be used. In contrast to it, we have seen a low code base implementation of Real-ESRGAN which is a successive version of ESRGAN.