Sall Grover launched Giggle, a women-only networking platform, in 2020. The app allows women to form communities and search for potential business partners, housemates and friends. With categories ranging from gaming to periods to mental health, the platform offered a safe space for women to interact freely. However, Grover’s sign up process sparked a Twitter storm of late.

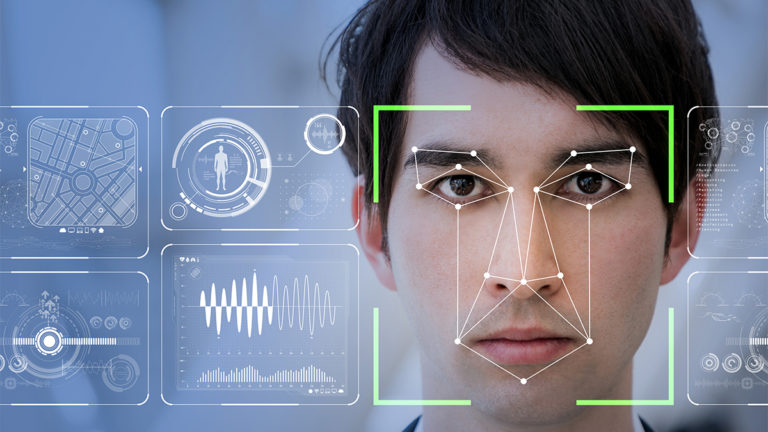

To sign up on Giggle, users have to click a selfie, which the app then analyses using facial recognition software to verify their gender. “Giggle is for girls. Due to the gender-verification software that giggle uses, trans-girls will experience trouble with being verified,” the app’s homepage read.

Though Giggle immediately took down the message, the app still doesn’t specify how trans women can enrol. Later, Grover said she wanted to be transparent about the limitation of the gender-verification technology and claimed to have consulted trans women while working on the app. However, the debate rekindled after she shared a Dailymail article about a trans swimmer with male genitals who caused discomfort among cisgender women in the women’s locker room.

In-built biases

Facial recognition technology entails facial detection and verification, identification and classification. The technology is used to infer a user’s age, gender and emotions–and feeds on the biometric data to throw up results.

Genderify, launched in 2020, determines the gender of a user by analysing their name, username and email address using AI. The platform was launched on the product showcase site Product Hunt and had in-built tools to categorise users into male or female. However, Genderify’s results carried gender stereotypes. For example, prefixes like doctor or scientist leaned towards male and the word ‘stupid’ had a 61.7% probability of being identified as female.

The creator of Genderify, Arevik Gasparyan, originally conceived the app as a marketing tool–exploiting customer data, analytics and demographic data–to extract business insights. However, the Twitter backlash prompted the founders to take the app off circulation a week after its launch.

Binary technology

Gender experts and digital rights activists have sounded the alarm on the risks of computer-driven image recognition systems that determine gender. Automatic Gender Recognition technology (AGR) is equipped to only detect two genders – male and female–by assessing the bone structure of their faces, effectively cancelling trans and non-binary genders and people of colour.

There are two major underlying issues at play here: Firstly, a person’s gender is inferred only by assessing their physical characteristics; secondly, AGR assumes gender and sex to be the same thing. Humans generate, collect and label the datasets, and also pick the algorithm to analyse the data. As a result, the datasets will have inherent biases, and the chances are, the biases of people building the model might also creep into the algorithm.

According to a paper published by the Stanford Social Innovation Review in 2021, only 22% of women work in data science and AI. In addition, men in low-income and middle-income households are 20% more likely to own phones, and around 300 million fewer women use the internet on their phones than men.

Complex solutions

But how do we approach the issue of gender discrimination in AI? Being a woman herself, Grover intended to create an inclusive platform for women and ended up with a trans-exclusionary app. The deployment of this technology could have serious consequences: Say if the surveillance system was designed to alert security if a person of the ‘wrong gender’ entered a bathroom.

Os Keyes studies the nexus between gender and technology at the University of Washington. She is openly transgender. “Frankly, all the trans people I know, myself included, have enough problems with people misgendering us, without robots getting in on the fun,” Keyes said.

Tech giants like Amazon and Microsoft, both of which offer facial-analysis services, have kept quiet over the solutions to these problems. In its Rekognition developer guide, Amazon noted the predictions on gender made by its facial analysis software should not be used to categorise a user’s gender identity. Clearly, it’s not a silver bullet.

Gender data can be useful, but only if handled with care. Therefore, the stakeholders should work together and take stock of the ethical, social, and moral concerns around gender data before deploying such AI models.