Entanglement, as Albert Einstein referred to as ‘spooky action at a distance’, refers to a phenomenon by which a particle can ‘know’ something about another particle even if a huge distance separates them. In quantum entanglement, these two particles are said to be so intertwined that one can infer not only the property of the partner particle but also influence it. When studied by Einstein, he found the phenomenon so baffling it was originally taken as evidence that quantum mechanic models were incomplete.

Register for Data Engineering Summit 2022 >>

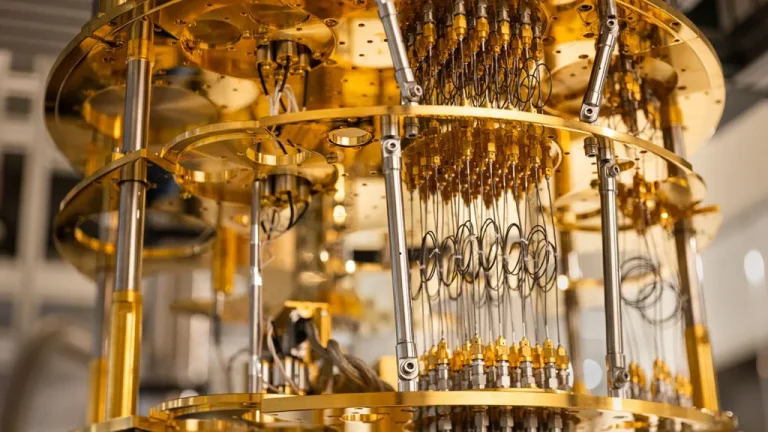

Quantum computers have components called the qubits, which are linked through entanglement, helping grow their computational power exponentially. This is particularly useful in modern encryption that is used in banking, and other sectors where securing data is of fundamental importance. Recent studies have shown that quantum computing might also help in boosting machine learning. Another application is simulating quantum systems.

In machine learning, the no-free lunch theorem suggests that all optimisation algorithms perform equally well when their performance is averaged over many problems and training data sets. With the rise of quantum machine learning, it becomes imperative to ask whether there is a quantum analogue of this theorem that would restrict a quantum computer’s capability to learn a unitary process with quantum training data.

This was recently studied by a group of researchers who documented their learnings in a paper titled “Reformulation of the No-Free-Lunch Theorem for Entangled Datasets”. In their paper, the authors showed that entangled datasets violate the classical no free lunches theorem.

No-free lunches in quantum learning

The no free lunch theorem entails that a machine learning algorithm’s average performance is dependent on the amount of data it has.

“Industry-built quantum computers of modest size are now publicly accessible over the cloud. This raises the intriguing possibility of quantum-assisted machine learning, a paradigm that researchers suspect could be more powerful than traditional machine learning. Various architectures for quantum neural networks (QNNs) have been proposed and implemented. Some important results for quantum learning theory have already been obtained, particularly regarding the trainability and expressibility of QNNs for variational quantum algorithms. However, the scalability of QNNs (to scales that are classically inaccessible) remains an interesting open question,” the authors write.

This also suggests a possibility that in order to model a quantum system, the amount of training data might also need to grow exponentially. This threatens to eliminate the edge quantum computing has over edge computing.

The authors have discovered a method to eliminate the potential overhead via a newfound quantum version of the no free lunch theorem. Their study showed that adding more entanglement to quantum computing would lead to exponential scale-up, which was verified using Rigetti’s Aspen-4 quantum computer. The researchers suggested an extra set of ‘ancilla’ qubits with the quantum system that can help the quantum machine learning circuit interact with many quantum states in the training data simultaneously. Study co-author Andrew Sornborger said, “Trading entanglement for training states could give huge advantages for training certain types of quantum systems.”

The authors believe that one of the applications of this work is in black box uploading, where we learn a model of quantum experiment and then study it on a quantum computer without requiring to do repeated experiments.

Challenges with the study

The major issue is the complexity of obtaining the entangled training data; this usually depends on the mode of access to the data. When the user has physical access to the target unitary, it is advantageous to input a state with the reference system so that the user can generate training data with entanglement.

As compared to input with no entanglement, this procedure decreases the average risk more efficiently. Secondly, while the study assumes perfect training, the writers caution that it was possible that exponential scaling may have training difficulty. In the past, several strategies have been proposed to avoid barren plateau (gradients that vanish exponentially in the number of qubits) in quantum neural networks; however, this remains an active area of research.

Read the full paper here.