Convolutional Neural Networks(CNNs) are at the heart of many machine vision applications. From tagging photos online to self driving cars, CNNs have proven to be of great help.

As the number of applications involving CNNs increase, the need for improving them has risen as well.

So, to balance this trade-off, the researchers at Google, introduce a more principled approach to scale CNNs and make them accurate.

In the paper titled “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, the authors propose a family of models, called EfficientNets, which they believe to superpass state-of-the-art accuracy with up to 10x better efficiency (smaller and faster).

Compound Scaling For 10x Better CNNs

CNNs are commonly developed at a fixed resource cost, and then scaled up in order to achieve better accuracy when more resources are made available.

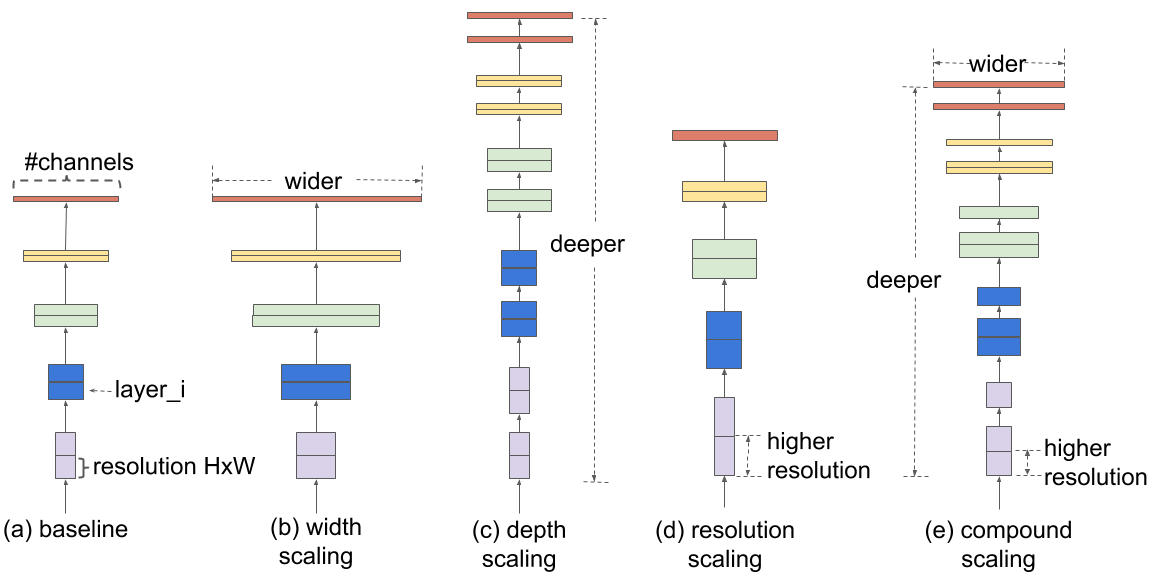

Typically, model scaling is done to arbitrarily increase the CNN depth or width, or to use larger input image resolution for training and evaluation. While these methods do improve accuracy, they usually lead to suboptimal performance.

Conventionally, the dimension of a neural net is manipulated to make them accurate. For instance, deeper ConvNet can capture richer and more complex features but are also more difficult to train due to the vanishing gradient problem

Whereas, wider networks tend to be able to capture more fine-grained features and are easier to train but shallow networks tend to have difficulties in capturing higher level features.

In this work, the authors propose a compound scaling method which has more control over model performance by having constraints on the scaling coefficients. In other words when to increase or decrease depth, height and resolution of a certain network.

The first step in the compound scaling method is to perform a grid search to find the relationship between different scaling dimensions of the baseline network under a fixed resource constraints (e.g., 2x more FLOPS).This determines the appropriate scaling coefficient for each of the dimensions mentioned above. We then apply those coefficients to scale up the baseline network to the desired target model size or computational budget.

The compound scaling method makes sense because if the input image is bigger, then the network needs more layers to increase the receptive field and more channels to capture more fine-grained patterns on the bigger image.

Future Direction

A deep neural network changes its form constantly as it gets trained. The distribution of each layer’s inputs change along with parameters of previous layers. This change increases latency in learning and it gets harder to train as the model embraces nonlinearities.

Since 2012, the capabilities of computer vision systems have improved greatly due to (a) deeper models with high complexity, (b) increased computational power and (c) availability of large-scale labeled data.

The memory required to update the weights during backpropagation can be reduced with GPipe as it automatically calculates the forward activations during backpropagation. Hence enabling the users to use more accelerators for training larger models and achieving performances to scale without filtering hyperparameters.

Whereas, MorphNet With every pass, learns the number of neurons per layer. When a layer has zero neurons, then that part of the network is cut off. This changes the topology of the network as the residual blocks in the network are removed.

The training can be carried out in a single run. And, MorphNet can be scaled to be ready for application on larger networks.

This technique also equips with the network with portability i.e, there is no need for keeping a track of the checkpoints that arise during training. This iterative approach of expanding and shrinking the neural networks gives better control over the usage of computational powers and time.

The room to refine neural networks still exist as they sometimes fumble and end up using brute force for light weight tasks.

Google has pioneered the research of making neural networks faster and lighter. Their previous works like GPipe, 3LC, MorphNet prove the same.

Read more about EfficientNet here