The year 2018 has been very eventful when it comes to artificial intelligence. With enhancements like GANs, the field of machine learning has ushered in a new era of applied AI. Earlier this year, researchers enabled AI to look through the walls where a camera colocated with a radio sensor, detected motion on the invisible side of the room and rendered stick figure-like imagery.

While the world scrambles to keep up with the pace of these innovations, researchers at MIT have published a new study demonstrating deep dark learning to identify objects in pitch black condition. In this technique, the researchers had successfully reconstructed transparent objects from images of those objects.

Deep Dark Technique

DNNs were initially trained on dark, grainy images of transparent objects and then they were made to associate the inputs from the dark imagery with specific outputs.

The training set consisted of 10,000 transparent glass-like etchings. These grainy images were captured in low light settings(one photon per pixel) which is lower than what a camera can capture in a sealed room.

To achieve this under low light settings, the researchers have consulted a database of 10,000 integrated circuits where every circuit is etched with a pattern of horizontal and vertical bars.

The setup consisted of an aluminium frame holding the phase spatial light modulator shielded from light. A camera was directed towards this frame and the modulator was switched on to rotate and capture each transparent pattern.

Each of these patterned circuits captured an image making it a collection of 10,000 grainy black and white images.

Working of SLM courtesy Bernard Lamprecht

Spatial light modulators are used in overhead projectors for spatially modulating light beam for transparency control. These are quite commonly used in business presentations and in-house movie screenings. It has the potential to revolutionise the way we store images as it is found to be useful for holographic data storage. Where two lasers are intersected to store the patterns throughout the volume of the photo-optical sensor, unlike the magnetic data storage which is usually a surface phenomenon.

To alleviate the problem with focus in case of transparent objects, the researchers have upset the focus by some margin to detect the presence of a transparent object in that abysmal imagery. If there are ripples in the captured images, then this knowledge can be used to verify the presence of transparent objects.

The model was tested under both defocused and focused settings to make it more robust and the results show that off-setting focus to accommodate for reconstruction based on light propagation produced cleaner images.

This experiment was repeated for a variety of objects like people, places and animals. And, after each training, the results have shown consistency in producing an output which resembled the original object.

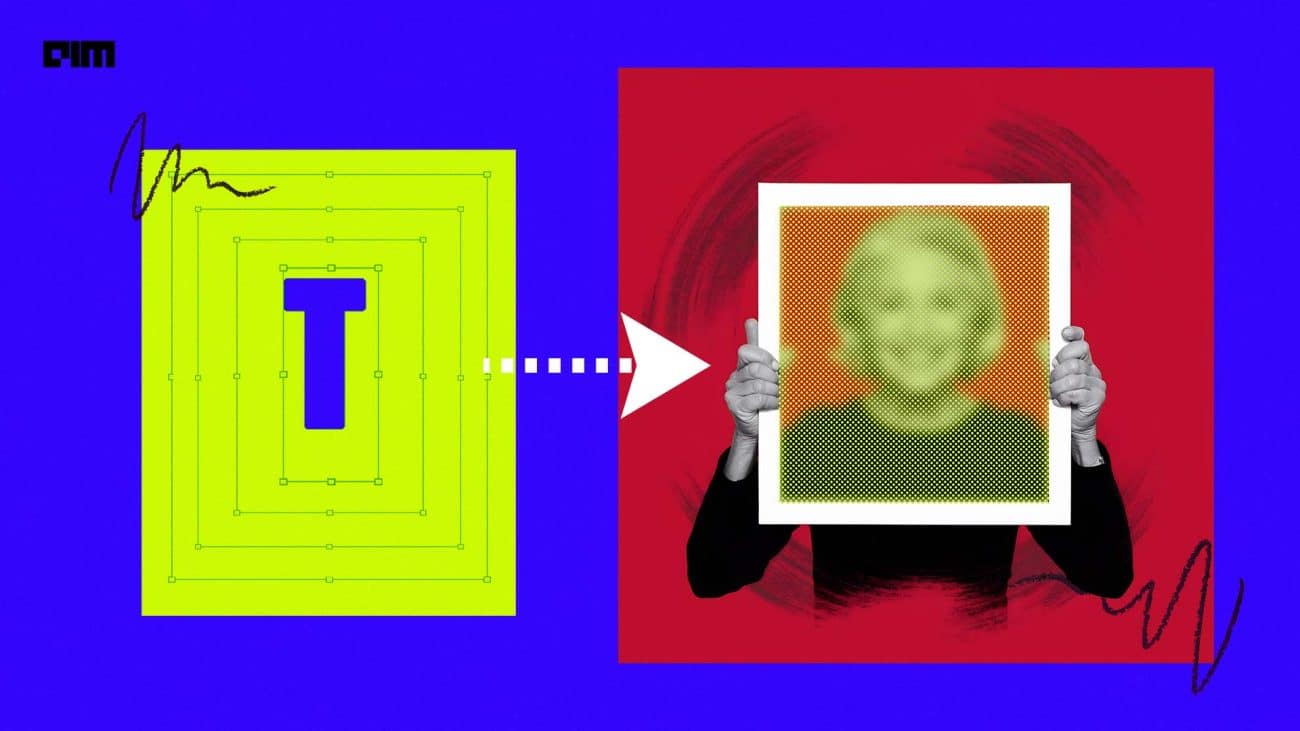

Courtesy of the researchers

In the above figure, from an original transparent etching (far right), engineers produced a photograph in the dark (top left), then attempted to reconstruct the object using first a physics-based algorithm (top right), then a trained neural network (bottom left), before combining both the neural network with the physics-based algorithm to produce the clearest, most accurate reproduction (bottom right) of the original object.

Direct Results

- Demonstrate the use of deep neural networks to recover objects under weak light and perform better compared to the classical Gerchberg-Saxton phase retrieval algorithm for the equivalent signal over noise ratio.

- Show that the phase reconstruction has improved when neural network are trained with an initial estimate of the object, as opposed to training it with the raw intensity measurement.

Usage

Low light imaging is great of significance in non-surgical diagnosis. Usually, the patient is showered with ionizing radiation, be it X-rays or CT. Any procedure which involves high-frequency radiation puts the patient at considerable risk.

“In the lab, if you blast biological cells with light, you burn them, and there is nothing left to image,” said George Barbastathis, professor of mechanical engineering at MIT who has conducted this study on Low Photon Count Phase Retrieval Using Deep Learning along with Alexandre Goy, Kwabena Arthur and Shuai Li.

With deep dark learning technique, the researchers claim to avoid this harmful exposure radiation and also detect issues in intricate and far deeper sections of the tissues; which were deemed to invisible previously with low exposure.