Bayesian inference method of statistical inference in which Bayes’ theorem is used to update the probability for a hypothesis when more evidence or information becomes available. Breakthrough applications of Bayesian statistics are found in sociology, artificial intelligence and many other fields. There have been arguments for and against Bayesian statistics for centuries which has made it a controversial subject. Bayes’ theorem or Bayesianism, in general, has given us some fantastic results in the past.

On 4 July 2012, the CERN (European Centre for Nuclear Research) surprised the general public and the scientific community. They discovered the Higgs boson — a particle in the Standard Model of modern physics. The researchers at CERN reasoned with a line of statistical reasoning that doesn’t quite agree with Bayesianism: Frequentism. Assuming that Higgs boson does not exist, the experimental results deviate more than five standard deviations from the expected value. Such a result could occur only once in two million times. Hence the researchers concluded that the Higgs boson has been discovered. After the announcement, there was a vivid debate between statisticians in the two camps. Livid letters which heavily attacked the results were shot off to CERN.

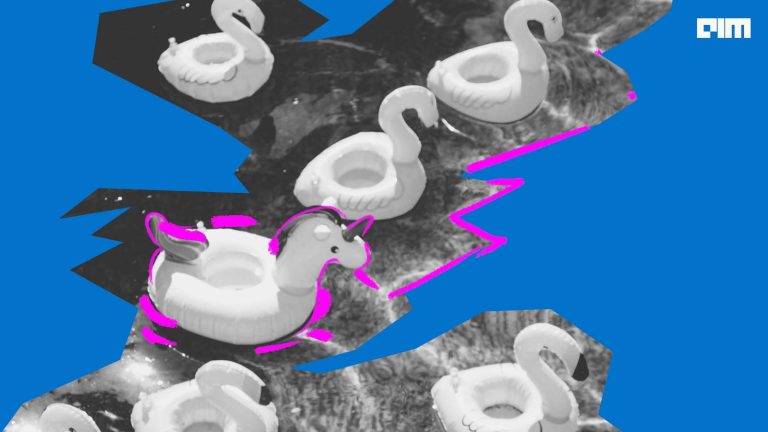

These two schools of thoughts have almost come to collide with each other. Irrespective of which school of thought leads, this clash is certainly going to give a new direction towards the evolution of AI.

Learning and Innate Knowledge In Humans

Drawing a parallel between how humans learn and how computers learn is a tedious task. But it is largely believed that our brains use some sort of Bayesian modelling to learn from the environment. It is an advantage to also use the innate knowledge we inherit and make use of sensory data the brain receives.

For that purpose, we need to dig deep into how we think about statistics in particular. Statistics is significantly important because it provides with all the tools to analyse, interpret and learn from data. Richard Royall, a prominent statistician, states that the three main questions in statistical analysis:

- What should we believe?

- What should we do?

- When does data count as evidence for a hypothesis?

The above questions are very closely related but distinct. Bayesians focus majorly on the first question of rational belief since they maintain that events and occurrences are a matter of uncertainty. After this, Bayesians answer the second and third question with the framework of probability calculus. Bayesians strongly believe that there is some knowledge which is ingrained within us. In order to be combined with data, our knowledge must first be cast in some formal language — which happens to be the language of probability.

On the other hand is frequentism, which is a statistical movement based on counts and frequency to think about the belief in events. In frequentist movement itself, there is a divide between researchers thinking deeply on the second and third question. Researchers such as Jerzy Neyman and Egon Pearson built their statistical framework on reliable decision procedures, therefore focussing on the second question. On the other hand a movement led by Ronald Fisher vehemently stresses ideas which lead to understanding the importance of (post-experimental) evidential assessments.

Arguments For Bayesianism:

The basic arguments pushing Bayesianism are :

- It is not a clever strategy to ignore what we know

- It is obvious and natural to cast what we know in the language of probabilities

- If our subjective probabilities are erroneous, their impact will get washed out in due time, as the number of observations increase

In the Bayesian world, the probability of hypotheses is needed to make a decision. Just like when the doctor tells us after a screening test that result is positive, a Bayesian would like to know the probability of the hypothesis “positive”. Above arguments try to suggest that Bayes’ theorem has the certainty of deductive logic because we have a prior in the calculation, which encodes our present-day knowledge. By trying different priors, Bayesians argue that how sensitive the results are to the choice of prior. This makes it easy to communicate a result framed in the language of the probability of hypotheses. There is also an argument that even if the prior is subjective, Bayesians argue that one can specify the assumptions and test out priors in the experiment.

Arguments Against Bayesianism:

The main arguments about the Bayesianism inference process are mostly around the prior. The arguments go that the prior is subjective. Different researchers would select different prior distributions based on their biases and speculation. There is no single method specified under the Bayesian analysis research that allows researchers to calculate priors. Because of researchers choosing priors in a random way, they arrive at different posteriors and conclusions.

Further, there are philosophical objections to assigning probabilities to hypotheses, as they can not constitute outcomes of repeatable experiments in which one can measure long-term frequency. The degree of belief of hypothesis is something frequentists can’t reason. A hypothesis can either be true or false. Compared to classical inference, Bayesian approaches move to computation very quickly. Other paradigms focus more on extracting information from data. Anti-Bayesians think that many statisticians over a generation will be ignorant of experimental design and analysis of variance.

Handling Uncertainty in Scientific Research

In many problems in the area of artificial intelligence, uncertainty is a factor that can not be ignored. Using probabilistic models can also improve the efficiency of standard AI-based techniques. Bayesian models are the most commonly used methods for dealing with uncertainty. It is also useful to know how Bayesian models can be used to go into the causality of the problem rather than only statistics.

Statistical analysis such as regression and other estimation techniques try to infer parameters of a distribution from samples of a population. Whereas, the causal analysis goes a little further in this regard. It aims to infer knowledge about the data generation process. With such knowledge, one can deduce not only deduce likelihoods of events under static conditions but also monitor dynamics under changing conditions. For eg. assessing responsibility and attribution. For example, whether event x was necessary (or sufficient) for the occurrence of event y.

Statistics deals with behaviour under uncertain, yet static conditions, while causal analysis deals with changing conditions. The main argument is that the joint distribution of symptoms and diseases doesn’t tell us that curing symptoms will cure diseases. It is also the case that if external conditions were to change the distribution wouldn’t change much. This in principle also implies that we cannot convert statistical knowledge into causal knowledge.

Since there is still a raging debate between Bayesians and Frequentists in the scientific community. There are cases being made that by enriching the language of probabilities with causal vocabulary in the Bayesian repertoire. Therefore we can still instill our prior knowledge in scientific decisions in a newer powerful way.