Google AI recently introduced their new Natural Language Processing (NLP) model, known as Fine-tuned LAnguage Net (FLAN), which explores a simple technique called instruction fine-tuning, or instruction tuning for short.

In general, fine-tuning requires a large number of training examples, along with stored model weights for each downstream task which is not always practical, particularly for large models. FLAN’s instruction fine-tuning technique involves fine-tuning a model not to solve a specific task, but to also make it more amenable to solving NLP tasks in particular.

FLAN is fine-tuned on a large set of varied instructions that use a simple and intuitive description of the task, such as “Classify this movie review as positive or negative,” or “Translate this sentence to Danish.” Creating a dataset of instructions from scratch to fine-tune the model would take a considerable amount of resources. Instead, it makes use of templates to transform existing datasets into an instructional format.

FLAN demonstrates that by training a model on a set of instructions, it not only becomes good at solving the kinds of instructions it has seen during training but becomes good at following instructions in general.

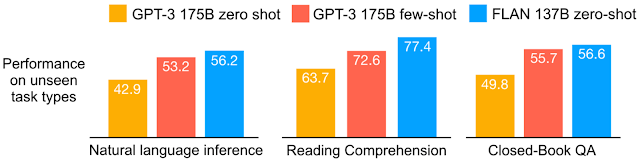

Google AI used established benchmark datasets to compare the performance of FLAN with existing models. It was also evaluated how FLAN performs without having seen any examples from that dataset during training. Evaluation results showed that FLAN on 25 tasks improves over zero-shot prompting on all but four of them. The results were found to be better than zero-shot GPT-3 on 20 of 25 tasks and better than even few-shot GPT-3 on some tasks.

It was also found that at smaller scales, the FLAN technique actually degrades performance, and only at larger scales does the model become able to generalize from instructions in the training data to unseen tasks. This might be because models that are too small do not have enough parameters to perform a large number of tasks.

Google AI hopes that the method presented will help inspire more research into models that can perform unseen tasks and learn from very little data.