Google AI research team recently introduced two families of neural networks for image recognition — EfficientNetV2 and CoAtNet. While EffcientNetV2 consists of CNNs with a small-scale dataset for faster training efficiency like ImageNet1K (with 1.28 million images), CoAtNet combines convolution and self-attention to achieve higher accuracy on large-scale datasets like ImageNet21 (13 million images) and JFT (3 billion images).

As per Google, EfficientNetV2 and CoAtNet are four to ten times faster while achieving SOTA and 90.88 per cent top-1 accuracy on the well-established ImageNet dataset. In addition to this, the team has also released the source code and pretrained models on the Google AutoML GitHub.

Training efficiency has become a critical focus for deep learning with neural network models, and training data size grows. For instance, GPT-3 shows remarkable capabilities in few-shot learning, but it needs weeks of training with hundreds and thousands of GPUs, making it difficult to retrain or improve. To tackle this issue, Google has proposed two models for image recognition that train faster and achieve SOTA performance.

EfficientNetV2

Based on EfficientNet architecture, EfficientNetV2 has been developed for smaller models and faster training. The researchers said that they systematically studied the training speed bottlenecks on modern GPUs/TPUs and noticed that

- Training with large image sizes results in increased memory usage and, thus, is often slower on GPUs/TPUs.

- Depthwise convolutions are inefficient on GPUs/TPUs, as they exhibit low hardware utilisation.

- The uniform compound scaling approach, which scales up every stage of convolutional networks equally, is sub-optimal.

To address these concerns, the researchers at Google proposed a training aware neural architecture search (NAS). It includes training speed in the optimisation goal and a scaling method that scales various stages in a non-uniform manner.

NAS is based on the platform-aware NAS. Compared to the previous method, which focuses on inference speed, in this study, the researchers have jointly optimised training speed, model accuracy, and model size.

Plus, they have extended the original search space to include more accelerator-friendly operations like FuseMBConv and simplified the search space by eliminating unnecessary operations like average pooling and max pooling. The outcome, EfficientNetV2 achieved, improved accuracy overall, compared to all previous models, while being much faster and up to 6.8x smaller.

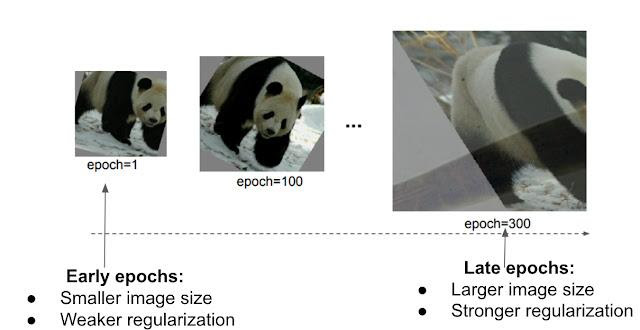

To speed up the training process, the researchers have proposed an enhanced method of progressive learning, which gradually changes image size and regularisation magnitude during training. Progressive training is being used in image classification, GANs and language models.

Unlike previous approaches that often trade accuracy for improved training speed, this new approach focuses on image classification and can slightly improve the accuracy while significantly reducing training time. The researchers said the idea is to adaptively change regularisation strength (dropout ratio or data augmentation magnitude) according to the image size.

In other words, a small image size leads to lower network capacity and requires weak regularisation, and vice versa — i.e., a large image size requires stronger regularisation to combat overfitting.

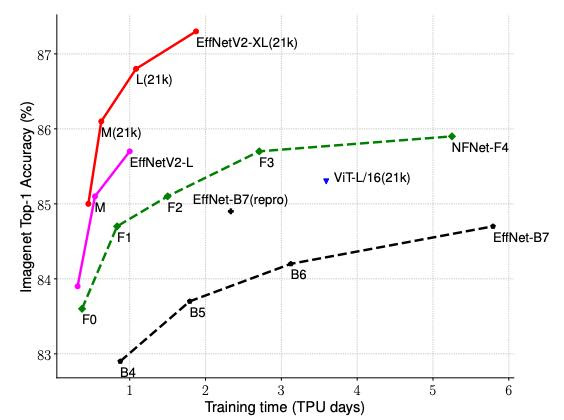

The researchers tested the EfficientNetV2 models on ImageNet, and a few transfer learning datasets like CIFAR-10/100, Flowers, and Cars. The graph below shows how EfficientNetV2 achieved much better training efficiency than prior models for ImageNet classification — a 5 to 11x faster training speed and up to 6.8 smaller model size, without any fall in accuracy.

CoAtNet

Inspired by Vision Transformer (ViT), the Google researchers expanded their study beyond convolutional neural networks (EfficientNetV2) to find faster and more accurate vision models.

In a research paper, ‘CoAtNet: Marrying Convolution and Attention for All Data Sizes,’ the team systematically studied how the combined convolution and self-attention can be used to develop fast and accurate neural networks for large-scale image recognition.

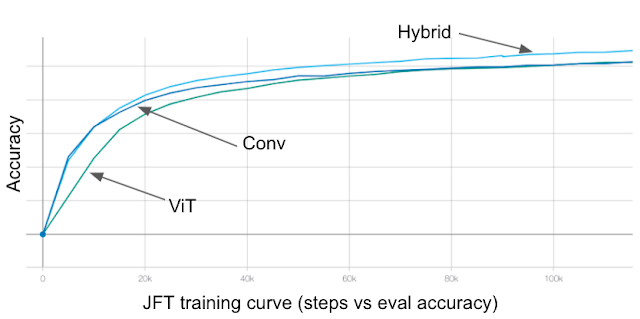

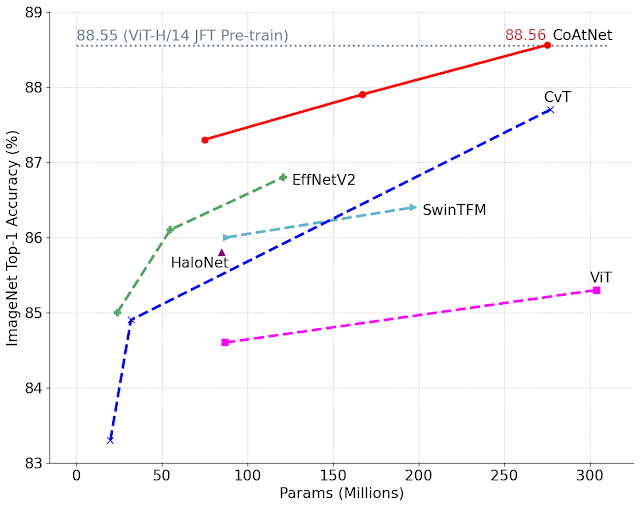

The researchers said that convolution often has better generalisation due to its inductive bias, while self-attention tends to have greater capacity thanks to its global receptive field. Further, they said that by combining convolution and self-attention, their hybrid models could achieve both generalisation and greater capacity, as shown in the graph below.

While conducting the study, the researchers noted two key insights.

- Depthwise convolution and self-attention can be naturally unified using simple relative attention.

- Vertically stacking convolution layers and attention layers in a way that considers their capacity and computation required in each stage are surprisingly effective in improving generalisation, capacity, and efficiency.

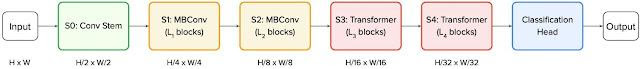

The researchers developed a family of hybrid models with convolution and attention based on these insights, called CoAtNets. The below image shows the overall architecture of CoAtNet.

According to Google, CoAtNet models outperformed ViT models and their variants across several datasets, including ImageNet1K, ImageNet21K, and JFT. Furthermore, compared to convolutional networks, CoAtNet showed comparable performance on a small-scale dataset (ImageNet1K) and achieved substantial gains as the data size increased (for example, on ImageNet21K and JFT).

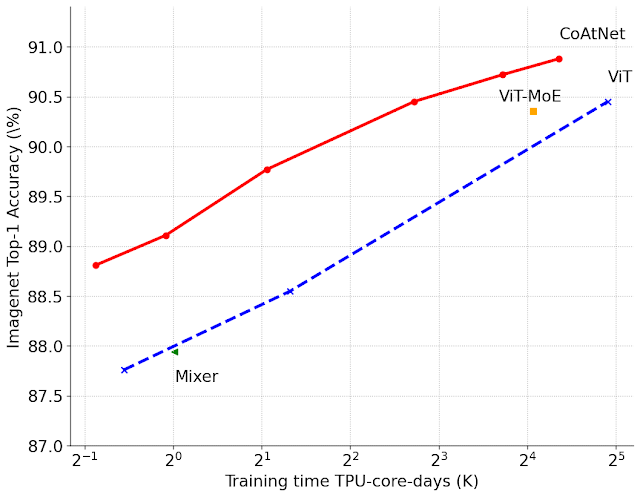

In addition to this, the researchers also evaluated CoAtNets on the large-scale JFT dataset. CoAtNet trained about 4x faster than previous ViT models, and more importantly, achieved a new SOTA top-1 accuracy on Image Net of 90.88 per cent.

Wrapping up

Currently, all EfficientNetV2 models are open-sourced, and the pretrained models are also available on TFhub. Meanwhile, CoAtNet models will also be open-sourced shortly. “In the future, we plan to optimise these models further and apply them to new tasks, such as zero-shot learning and self-supervised learning, which often require fast models with high capacity,” said the Google AI team.