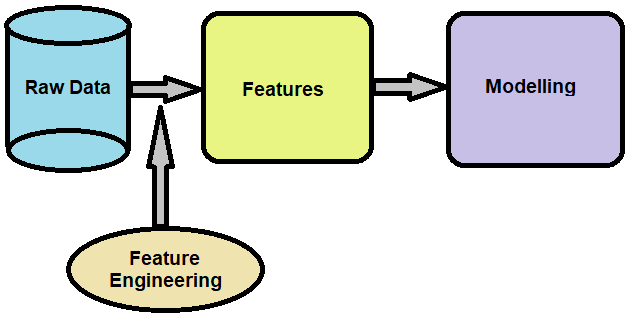

A feature can be said as the numeric representation of both structured and unstructured data. Feature engineering is one of the crucial steps in the process of predictive modelling. This method basically involves the transformation of given feature space, typically using mathematical functions, with the objective of reducing the modeling error for a given target.

Feature engineering creates features from the existing raw data in order to increment the predictive power of the machine learning algorithms. Generally, the feature engineering process is applied to generate additional features from the raw data. The new features are expected to provide additional information that is not clearly captured or easily apparent in the original or existing feature set.

Some of the feature engineering techniques are as mentioned below:

Binning

Binning or grouping data (sometimes called quantisation) is an important tool in preparing numerical data for machine learning. This tool is useful in replacing a column of numbers with categorical values that represent specific ranges, a column of continuous numbers has too many unique values to model effectively, etc.

Feature Hashing

Feature hashing, also known as hashing trick is the process of vectorising features. It can be said as one of the key techniques used in scaling-up machine learning algorithms. In text mining techniques such as document classification, sentiment analysis, etc. feature hashing has been broadly used as a method of converting tokens into integers. This process is basically done by applying a hash function to the features and using their hash values as indices directly. Feature hashing uses a random sparse projection matrix in order to reduce the dimension of the data while approximately preserving the Euclidean norm.

Log Transforms

Skewness can be said as a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. Log transform is one of the powerful tools for the analysis of data in order to make the highly skewed distributions less skewed. Then, these less skewed distributions can be valuable for making patterns in the data more interpretable along with a way to meet the assumptions of inferential statistics.

n-grams

n-grams are the effect of generalising the set-of-words approach by using word sequences. This method is used for checking ‘n’ continuous data (words or sounds) from a given sequence of text or speech. This model helps to predict the next item in a sequence. In sentiment analysis, the n-gram model helps to analyze the sentiment of the text or document.

Binarisation

Binarisation is the process of transforming data features of any entity into vectors of binary numbers to make classifier algorithms more efficient. Binarising data or threshold data can be said when all values above the threshold are marked 1 and all equal to or below are marked as 0. It can be useful when you have probabilities that you want to make crisp values.

Bag-of-words

Bag-of-Words (BoW) is an algorithm for feature engineering which counts how many times a word appears in a specific document. Those word counts enable us to compare documents and estimate their similarities for applications like search, document classification, and topic modelling. It is basically a method of interpreting text data when modelling text with machine learning algorithms. Bag-of-words approach can be widely used in natural language processing, document classifications, etc.