Many times we do not know the technology we have at hand. Smartphones, tablets, televisions and other everyday electrical devices in our day to day lives are extraordinarily powerful tools. What is behind technology or device? Today I’m going to talk about the Microsoft Kinect sensor.

What is the Kinect sensor?

The Kinect sensor was designed to change the way you play with game consoles in homes. It was the first device that allowed us to operate a console, without direct contact with a controller. Only through its visual systems are we able to control the functionalities of the device.

It was launched in 2009, at the Electronic Entertainment Expo 2009. Its original name was “Project Natal”. It can be defined as a free game and entertainment controller and its creator was Alex Kipman. Microsoft decided to develop it for the Xbox 360 game console.

In 2011 the second version for PC with Windows 7 and Windows 8 came out. The characteristic that makes it different is the ability to recognize gestures, voice commands and objects in images.

It is an innovative technology, behind which there is a combination of cameras, microphones and software. All of this is contained within Kinect.

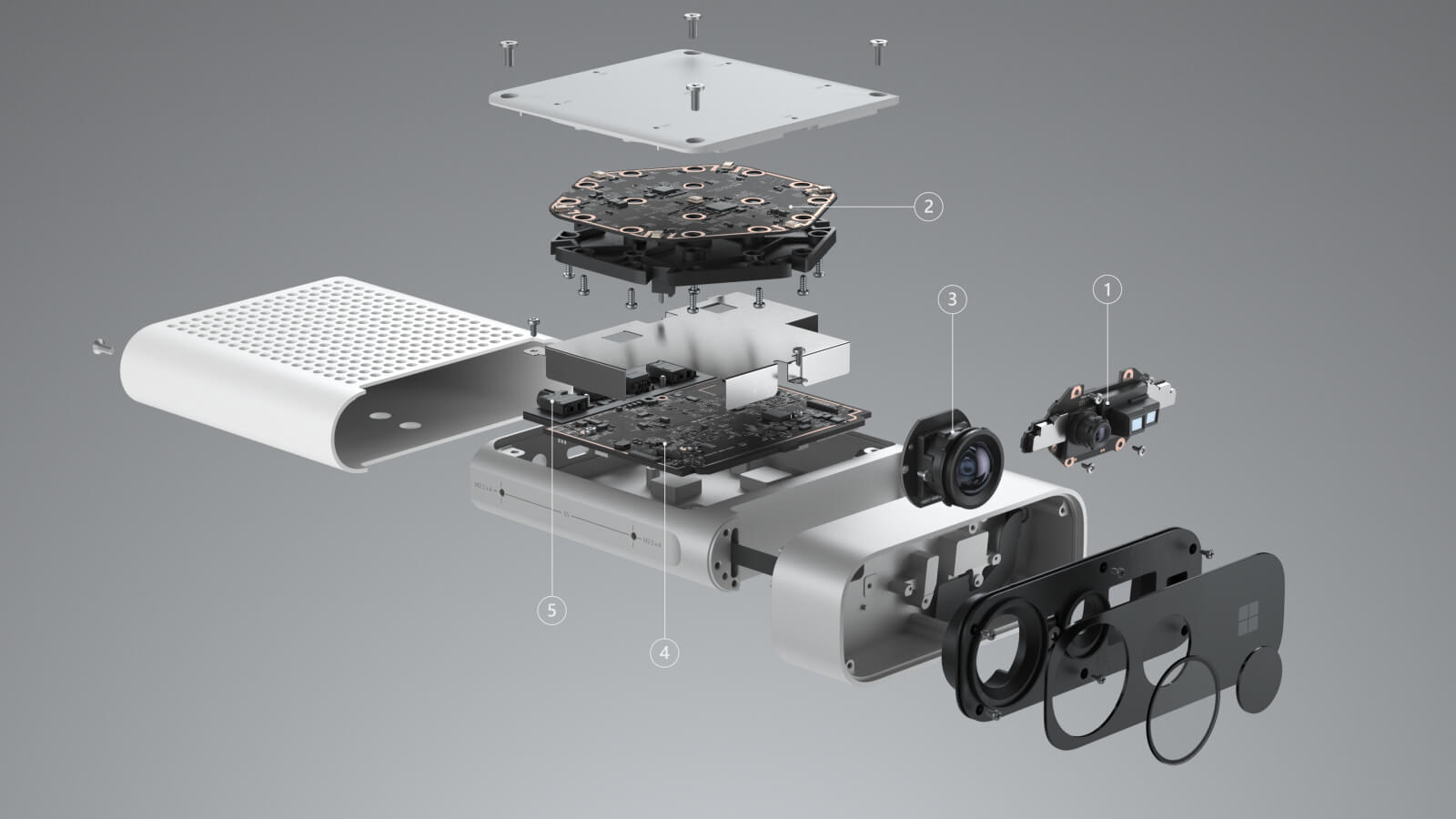

Basic parts of the Kinect sensor

-RGB color video camera. It works as a webcam, capturing images on video. The Kinect sensor uses this information to obtain details about objects and people in the room.

-IR emitter. The infrared emitter is capable of projecting infrared light into a room. As infrared light strikes a surface, the pattern is distorted. This distortion is read thanks to its other component, a depth camera.

-Depth chamber. It analyzes the infrared patterns emitted by the emitter and is capable of constructing a 3D map of the room and of all the objects and people within it.

-Microphone set. The Kinect sensor has four built-in precision microphones capable of determining where sounds and voices come from. It is also capable of filtering out background noise.

-Tilt motor. This motor has the ability to adjust on the basis, the Kinect sensor. It is able to detect the size of the person in front, to adjust up and down as appropriate.

Everything comes perfectly assembled on a horizontal bar of about 28 cm, on a rounded square base where it rests.

But what really equips this intelligence device is the software. Kinect is capable of capturing an incredible amount of data. Always setting your goal on the things that move in your environment. Thanks to the processing of this data through an artificial intelligence algorithm and machine learning methods, Kinect can map the visual data it obtains through its sensors.

The goal is to be able to detect human beings and understand what position each detected person is in.

Kinect Sensor Software

Once the sensors capture the information, they are immediately processed by artificial intelligence software. It is able to classify the different objects that are within a scene. It recognizes humans by its head and limbs.

Kinect understands how a human being moves and assumes, for example, that we are unable to turn our heads 360º and other impossible actions that we can perform. This is very simple for us, but for a machine it involves a long learning period.

This type of learning is known as machine learning. It consists of analyzing a huge amount of real-life data, to find patterns. This is how we learn human beings, based on observing and learning.

Kinect captures the movement of people through more than 48 points of articulation. This is done through a fairly complex algorithm, which takes multiple factors into account. So, how does it work?

- Construct a depth image through the IR emitter and the depth camera. This is done by triangulation, just as when calculating the distance stars are outside the solar system.

- Detect the parts of a person’s body. This is done using a decision tree. The patterns for making those decisions were obtained in the learning phase with more than a million examples.

This is a very shorthand for how the algorithm works. Behind all this there is a very extensive mathematical base. Probability and statistics, multivariate calculations, linear algebra, complex analysis, graph algorithms, geometry, differential equations, topology, etc. are used.

Create your own project with Kinect

There are several alternatives when using Kinect in our projects. The best and most optimal would be to work with the SDK that Microsoft offers for developers.

There are two SDKs depending on the version of Kinect you have. Version 1.8 for Kinect v1 and version 2.0 for Kinect v2.

You can develop applications with Kinect within Visual Studio with the native languages of Microsoft C # and Visual Basic.

There are other, “simpler” alternatives. One of them is to work with Processing. For this we need to configure our equipment. We must take special care in the SDK and drivers. Another option would be to program it with Python.

Real-life examples with Kinect

There are many sectors where Kinect can offer a wide range of possibilities.

Medicine: Gesture

In hospital operating rooms, one of the most important resources available to physicians is magnetic resonance imaging and tomographic imaging. Unfortunately for patients and surgeons, consulting this information can be time consuming and costly.

Gesture is a solution created by a North American company, which allows to consult these images through gestures thanks to Kinect. This is now valuable time to the surgeon while the patient is anesthetized.

Industry: KinectFusion

This project allows the use of a Kinect as a 3D scanner of small and large objects in high quality. It is free software that is incorporated into the SDK that Microsoft offers.

It was developed within Microsoft Research, Microsoft’s research center.

Integration: Kinect Sign Language Translator

Researchers from China, in collaboration with Microsoft Research, developed a sign language translator. This allows communication between people who speak sign language and those who do not. It does it both ways, both translating sign language into written text, how it speaks and vice versa, through an avatar, showing in sign language what a person is saying.

Geology: Reality Augmented Sandbox

It is a prototype created by the University of California Davis. It consists of a sandbox, a Kinect sensor and a projector. Thanks to the capabilities that Kinect offers with the depth camera, we can model virtual terrains and landscapes as if they were true.

While we make holes and mountains, Kinect extracts the data that is projected in the box itself. We can see the different level lines and even simulate the behavior of volcanoes, rivers, lakes and seas.